Month: June 2025

Blog

Building a Resilient Multi‑Layer Backup Strategy

Almost all modern businesses rely on data, which can potentially put their finances and reputations on the line. Catastrophic data loss is a real threat for organizations of all sizes.

Ransomware often targets this data, and the methods being used today by cybercriminals are becoming increasingly sophisticated.

Whether it is hardware failure, human error, or an internal breach from a third party, modern server backup solutions can help provide multi‑layered resilience.

These solutions have also come a long way in an effort to counter cybercriminal refinements and now go way beyond nightly tape rotations.

Modern methods embrace automation and restore faster than ever before, helping IT managers guarantee business continuity, compliance, and ransomware resilience, preventing downtime.

Backup Strategy Evolution: From Single‑Copy to Multi‑Tier

The previous standard was once single‑copy or tape‑only backups; however, they each run risks. Recovery is too slow, and single backup sets destroyed or infected mean permanent data loss.

While tapes are better for long‑term archiving, modern threats require more advanced solutions. A combination of multiple copies and periodic snapshots can help combat these threats, especially when coupled with off‑site replication.

Redundancy, immutability, recovery speed, automation, and security are all key aspects. The table below compares legacy methods with modern solutions:

| Aspect | Legacy Methods (Single‑Copy/Tape) | Modern Solutions |

|---|---|---|

| Redundancy | Single backup, stored on‑site or off‑site | Multiple copies across diverse locations (cloud, multiple data centers) |

| Immutability | No safeguard against malicious encryption | Immutable snapshots prevent alteration. |

| Recovery Speed | Slow, manual tape retrieval and system rebuild | Ultra‑fast, granular restore of files, volumes, or entire servers in minutes |

| Automation | Mostly manual, with the risk of human error | Policy‑based orchestration to automate schedules and replication |

| Security | Vulnerable to mishandling, theft, or environmental damage | Air‑gapped object storage plus encryption, access controls, and multi‑cloud redundancy |

Organizations are obligated to adhere to regulatory compliance, and when faced with ransomware, if something goes wrong, ultimately, critical data needs to be restored without delay.

Ransomware Resilience: Data Immutability

Modern ransomware attacks often target backup repositories, encrypting or deleting the data to force payments.

A great workaround is immutable snapshots; these snapshots lock the backup data for a set period of time, ensuring it can’t be changed in any way or erased by anyone, regardless of their privileges.

These immutable snapshots are “write once, read many,” meaning that if a malware infection strikes at midnight, a snapshot taken at 11:59 PM remains uncompromised.

Additionally, these backups are bolstered via multi‑layer replication across separate clouds or sites to prevent single‑point failure. If one data center backup is compromised, there is another that remains unaffected.

The replication tasks are swift thanks to high‑speed infrastructure and are monitored to identify any unusual activity. Any backup deletions or file encryption spikes are reported through automated alerts, helping to further protect against ransomware.

How Multi‑Location Replication Ensures Business Continuity

If a single site is compromised through failures or attacks, the data must remain recoverable. Major outages and regional disasters can be covered with multi‑cloud and geo‑redundancy to keep backups safe.

With a local backup, you have the ability to restore rapidly, but you shouldn’t put all of your eggs into one basket. By distributing backups with geographic separation, such as a second copy in a data center in another region or an alternate cloud platform, you avoid this pitfall.

With the right implementation, you can restore vital services properly, such as key business systems and line‑of‑business applications, keeping you protected against hardware failures and cyberattacks while adhering to Recovery Time Objectives (RTOs).

The more diverse and concurrent your backups are, the better your IT department can meet these RTOs.

Another strong method organizations often employ to ensure a fallback copy is always available is the “3‑2‑1‑1‑0” rule. That is to say, they have three copies, in two distinct forms of media, with one kept off‑site, one immutable, and zero errors.

Minimizing Downtime with Ultra‑Fast Granular Restores

While multiple secure backups are key, they are only half of the solution. Regardless of the lengths you go to back up and store data, flexibility and speedy recovery are required for these efforts to succeed.

Organizations must be capable of recovering data without rolling back the entire server, which is where granular recovery comes into play. Downtime can be reduced by restoring only what’s needed, be it a single database table or mailbox. That way, systems remain operational throughout restoration.

If the platform allows, you can also boot the server directly from the backup repository for instant recovery. This helps keep downtime to a minimum and means that you can still operate despite a failure on a server hosting critical services by copying data to production hardware.

With granular recovery and instant recovery, organizations can easily address regulatory demands and maintain business continuity.

Using Air‑Gapped Object Storage as a Final Defense

Live environments can still be devastated by a sophisticated attack despite a strong multi‑layered approach to securing data servers. The same can be said for connected backups, especially when it comes to insider threats. The solution is keeping isolated copies off‑network, otherwise known as air‑gapped backups, much like traditional tape stored offsite once did.

The modern approach differs slightly, elevating the level of protection using offline dormant copies on disconnected arrays or object storage. They only connect for a short window to perform scheduled replication, during which the physical or logically unreachable data cannot be altered. If data is breached, then the dormant copy acts as a final defense, becoming a vital lifeline.

Policy‑Based Automation as a Strong Operational Foundation

Manual backup management is error‑prone. With policy‑based orchestration, IT can streamline backup management processes by defining a schedule and setting retention and replication rules. This rules out the errors that manual backup is prone to.

Once IT has defined backup policies, the system automatically applies them across servers, clouds, and storage tiers, keeping everything consistent no matter the scale of operations.

Servers with “production” tags can be backed up hourly and replicated offsite with 2‑week immutability locks by admins without the need for continual human intervention. Scheduled test restores can also be automated on many platforms to simulate recovery under real conditions, saving time, reducing risks, and demonstrating proof of recoverability for regulatory requirements.

How Modern Server Backup Systems Support Compliance

Reliable data protection and recovery are demanded by HIPAA and GDPR. Article 32 of GDPR, for example, states that personal data must be recoverable in the event of any incident. This is also the case for finance (SOX) and payments (PCI‑DSS).

Modern server backup solutions help organizations to fulfil mandates and duties by enabling encrypted backups and off‑site redundancy failsafes. Immutability ensures the integrity of the data, as does air‑gapping, and having restore tests documented to hand is beneficial for audits.

Through automation, scheduled backups can help meet retention rules, such as retaining monthly copies for seven years, as you have easy access to historical snapshots. These automated backups satisfy legal obligations and are a reassuring trust factor for customers.

Technical Infrastructure: Laying the Foundations of a Robust Backup Plan

A robust backup plan rests upon solid technical infrastructure. To make sure that replication requirements are efficient, high‑bandwidth links (10, 40, 100 Gbps or more) and an abundance of power and cooling are needed. Without redundant power, continuous uptime is not achievable. To meet these requirements, you need to consider tier III/IV data centers provided by those offering global locations for true geographical redundancy.

Melbicom has a worldwide data center presence in over 20 locations, operating with up to 200 Gbps server connections for rapid backup transfers. Melbicom enables data replication in different regions, with reduced latency, helping organizations comply with data sovereignty laws. We have more than 1,000 ready‑to‑go dedicated server configurations, meaning you can scale to match demands, and should an issue arise, Melbicom provides 24/7 support.

How to Implement a Server Backup Solution that is Future‑Proof

Backup architecture needs to be constructed strategically. With the following technologies applied, you have a solid server backup solution to withstand the future:

- Lock data through the use of Immutable Snapshots to prevent tampering.

- Spread data across multiple clouds and sites with Multi‑Cloud Replication.

- Minimize downtime by performing a Granular Restore and recovering only what is necessary.

- Keep an offline failsafe using Air‑Gapped Storage for worst‑case scenarios.

- Automate schedules, retention, and verification ops with Policy‑Based Orchestration.

Remember that live environments need regular testing to verify they are running smoothly and that you will be protected should the worst occur. Test with real and simulated restoration to make sure backups remain valid over time, and be sure to encrypt in transit and at rest with strict access controls.

Conclusion: Always‑On Data Protection on the Horizon

Forgotten single‑copy tapes, modern hardware, and evolving cybercrime methods require modern multi‑level automated solutions.

To thwart today’s ransomware, prevent insider threats, and cope with rigorous regulatory audits, immutable snapshots, multi‑cloud replication, granular restores, and air‑gapped storage are needed.

Leveraging these technologies collectively with solid technological infrastructure is the only way to combat modern‑day threats to business‑critical data.

Get Reliable Backup‑Ready Servers

Deploy a dedicated server configured for rapid, secure backups and 24/7 expert support—available in 20+ global data centers.

Get expert support with your services

Blog

Reducing Latency and Enhancing Security with Dedicated Server Hosting

Modern digital services are pushing boundaries on speed and safety. Performance-critical platforms—whether running real-time transactions or delivering live content—demand ultra-low latency and airtight security. Even a few extra milliseconds or a minor breach can lead to major losses. In this environment, single-tenant dedicated server hosting stands out for providing both high performance and robust protection. Below, we examine how hosting on a dedicated server addresses these challenges better than multi-tenant setups.

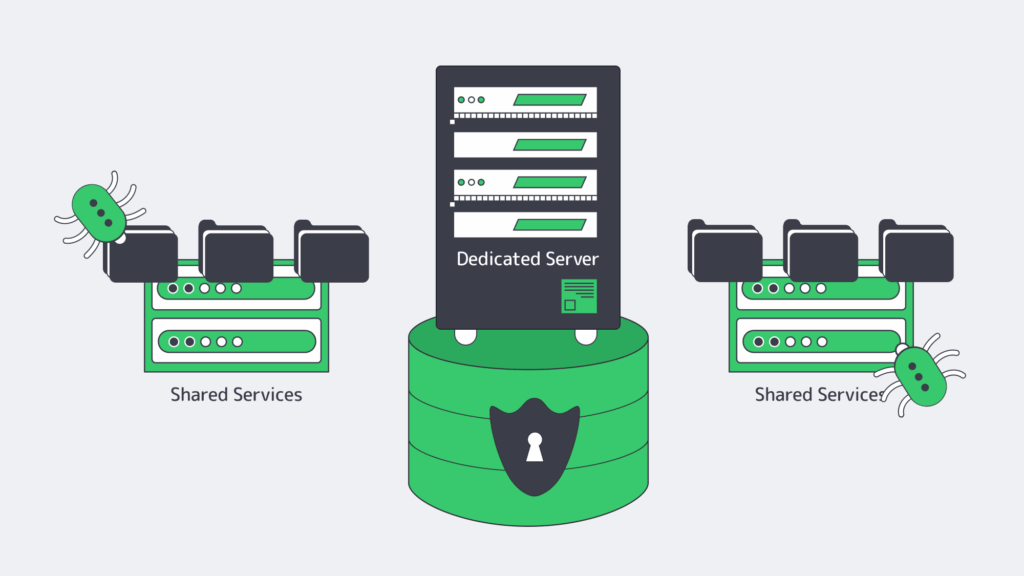

Dedicated Servers vs. Shared Environments: Beyond the “Noisy Neighbor”

In shared or virtualized environments, multiple tenants operate on the same physical hardware, often leading to the “noisy neighbor” issue: resource contention causes performance dips or latency spikes. Virtual machines (VMs) also add a hypervisor layer between the application and hardware, introducing extra overhead. Meanwhile, dedicated servers deliver complete hardware ownership—no risk of interference from other tenants and zero virtualization overhead. This single-tenant approach results in more predictable performance and simpler security management.

| Key Aspect | Dedicated Server (Single-Tenant) | Shared/Virtualized (Multi-Tenant) |

|---|---|---|

| Resource Isolation | 100% yours, no “noisy neighbors” | Resource contention from neighbors |

| Latency & Performance | Bare-metal speed, no hypervisor overhead | Added overhead from shared hypervisor |

| Security | Physical isolation, custom firewalls | Potential cross-VM exploits |

In some workloads, virtualization does have benefits like easy scaling. Yet for consistent performance and stronger security, dedicated hosting remains unmatched. As multi-tenant clouds grow, businesses that need predictable speed and safety at scale have rediscovered the value of dedicated server.

Sub-10 ms Response Times: How Dedicated Servers Excel in Low-Latency

In latency-sensitive operations, every millisecond counts. Network performance depends on distance, hop count, and how your infrastructure connects to end-users. Dedicated hosting makes it possible to carefully select data center locations—keeping servers near major population centers or key exchange points to achieve sub-10 ms latencies for local users. At scale, those milliseconds translate into smoother user experiences and a measurable competitive advantage.

For example, in electronic trading, a 1 ms improvement can be worth millions to a brokerage firm. While not every platform deals with trades, the same principle applies to anything that needs real-time interaction. A single-tenant server can integrate faster network interface cards and custom kernel drivers, enabling latencies measured in microseconds rather than the tens of milliseconds common in shared environments.

Most important, a dedicated environment ensures consistent latency since you’re not competing for network capacity with other clients. Melbicom has 20 PoP in Europe, Asia, Africa, and Americas, including a Tier IV facility in Amsterdam with excellent European connectivity. By placing servers strategically across these locations, you can slice response times to single-digit milliseconds for local traffic.

Custom Firewall, Private Networking, and Hardware Root-of-Trust

The Power of Custom Firewalls

In multi-tenant setups, firewall configurations are often limited or standardized to accommodate all users. With a single-tenant dedicated server, you can deploy custom firewall appliances or software, implementing fine-grained rules. This flexibility becomes crucial in mission-critical applications, where each port and protocol demands careful oversight. Dedicated hardware also lets you segment traffic on private VLANs, isolating back-end services from the public internet.

Private Networking for Latency and Security

Another hallmark of dedicated infrastructure is private internal networking—ideal for workloads that involve communication among multiple servers. Instead of routing traffic through external networks, you can use private links that reduce latency and diminish exposure to outside threats. Melbicom offers private networking capabilities so our customers can keep sensitive data flows entirely off the public internet. And with dedicated server hosting, bandwidth can reach up to 200 Gbps per machine, ensuring that large-scale data transfers or intense workloads operate smoothly.

Hardware Root-of-Trust: Effectively Fighting Firmware-Level Attacks

Firmware-level attacks are on the rise, with attackers targeting motherboards, baseboard management controllers, and BIOS code. One powerful defense is a hardware root-of-trust: a secure chip (such as a Trusted Platform Module, or TPM) that verifies server firmware at boot-time. Research from the Trusted Computing Group underscores TPM’s effectiveness at preventing low-level compromises. In a shared virtual environment, you might not have direct access to these safeguards. In a dedicated server, you control secure boot settings and can verify firmware integrity before your OS even starts.

Defending Against Modern Threats

Cyber threats have spiked in frequency and intensity. Cloud infrastructures blocked 21 million DDoS attacks last year, an increase of more than 50% over the previous period. While multi-tenant providers do offer layered defenses, sharing the underlying environment can mean broader attack surfaces. Dedicated servers by nature limit those surfaces. You have exclusive use of the CPU, storage, and network interfaces, making it harder for attackers to exploit side-channel vulnerabilities. You can also implement advanced intrusion detection tools without worrying about impacting other tenants’ performance.

Scalability and Support

Some assume dedicated hosting lacks scalability. But providers like us at Melbicom have simplified server provisioning—our inventory includes more than 1,000 ready-to-go server configurations that can be deployed quickly, often in hours. Monitoring and support are likewise robust. We at Melbicom monitor for unusual traffic patterns, offering DDoS protection where needed. Free 24/7 technical support ensures you can resolve issues anytime. That kind of tailored assistance can be indispensable for mission-critical workloads.

Key Industry Trends Driving Dedicated Server Hosting

Analysts link the rise of dedicated hosting to two major drivers: low-latency requirements and relentless cyber threats. As next-gen networks and real-time applications proliferate, more businesses need near-instant responses. A recent market survey indicated an annual growth rate of over 20% for low-latency communications, suggesting continued demand for infrastructure that can meet sub-10 ms round-trip times.

On the security front, multi-tenant attacks—especially cross-tenant exploits—have grown more sophisticated. This trend pushes organizations toward single-tenant architectures. In some cases, a hybrid model is adopted: critical workloads run on dedicated machines, while less sensitive tasks reside in elastic cloud environments. The net effect is a growing share of infrastructure budgets allocated to hosting on a dedicated server.

| Trend | Impact on Dedicated Hosting |

|---|---|

| Rise of Ultra-Low Latency Apps | Boosts demand for physically close servers offering sub-10 ms or microsecond-level performance |

| Escalating Cyber Threats | Single-tenant designs isolate vulnerabilities; advanced security controls become essential |

| Cost Predictability | Fixed-rate dedicated servers can be more economical at scale than variable cloud pricing |

Conclusion: Single-Tenant Infrastructure for Speed and Safety

For mission-critical services seeking sub-10 ms response times and robust security, single-tenant dedicated infrastructure remains the gold standard. Multi-tenant environments have improved, but noise issues and cross-tenant risks persist. By taking full advantage of custom firewalls, private networking, and hardware root-of-trust, you create a fortified environment with consistent low latency.

Get Your Dedicated Server

Deploy a high-performance dedicated server in hours and gain ultra-low latency with robust security.

Get expert support with your services

Blog

Why Choose a Netherlands Dedicated Server for EU Reach

If you possess a digital company that requires seamless access throughout Europe, you need a top-class internet hosting site. You find that in the Netherlands. With high-bandwidth connectivity and a commitment to greener energy solutions, the cost‑effective infrastructure in the Netherlands beats that of most other countries. On top of that, the Netherlands has very solid data protection laws, which adds another layer of “trust and security” coating to this pivotal internet hosting location. Until recently, many businesses stuck with data centers located in their home countries. Those days are over. Almost any Dutch data center is faster, more reliable, and more in line with the kinds of legal requirements businesses are now compelled to meet.

The low‑latency pan‑European connectivity provided by a Netherlands dedicated server is a huge advantage to any digital enterprise looking to operate across the EU. To understand why, let’s take a look at the benefits and how the country’s privacy law alignment and sustainability focus benefits can help with growth and success.

AMS‑IX: Providing Pan‑European Low Latency Exchanges

Low latency is vital to exchange speeds, and the latency rests upon traffic routes. Amsterdam is situated at the core of Europe’s network map, and hosts one of the world’s largest peering hubs, the Amsterdam Internet Exchange (AMS‑IX). AMS‑IX interconnects with over 1,200 networks and handles traffic peaks (over 14 Tbps) on a regular basis. Among its peers are Tier‑1 carriers and global content providers, which shortens cross‑continental data routes, lowering latency dramatically.

The round‑trip times coming out of Amsterdam are impressive. Latency tests sent to Frankfurt and Paris and back take roughly 10–15 ms 1.2. and back in under 20 ms. Southern Europe, such as Madrid, shows a response of 30–40 ms. The table below demonstrates the results of some typical round‑trip delays:

| Test Destination | Round‑Trip Latency Approximation |

|---|---|

| London, UK | ~15 ms |

| Frankfurt, DE | ~10 ms |

| Paris, FR | ~16 ms |

Table. Latency information gathered from Epsilon Global Network.

Businesses that operate with a nearby server presence stand to reap the benefits. Despite being a single point of presence in Amsterdam, the packet delivery speed through an AMS‑IX connection can work to an enterprise’s advantage. Amsterdam is already a major juncture for Web traffic, with a peering layer that enables the breakdown of overall traffic to local destinations. For an enterprise using an AMS‑IX connection in Amsterdam, the connection is faster than a point‑to‑point Web service in the same city. The Web services, SaaS, e‑commerce, and streaming businesses that do use the connection seem pleased with the results.

At Melbicom, our outbound and inbound traffic passes through high‑capacity, multi‑redundant fiber networks that take advantage of the direct peering at AMS‑IX and other low‑latency exchanges. Such stability prevents any bottlenecks, ensuring that our connections remain up and accessible all the time, hitting us with reliable speeds that cater to any heavy workloads that demand dependability.

Regulatory Compliance and Legal Assurance

The Netherlands has a strong commitment to data privacy, meaning its hosting must conform to many worldwide data protection laws. Given the country’s EU status, it adheres to the General Data Protection Regulation (GDPR) but is also regulated by local rules and oversight. The standards are much higher for providers operating within the jurisdiction of the Netherlands when it comes to data handling, breach notifications, and user privacy, which ensures trust for the end users.

With such strong regulation in Dutch laws, enterprises hosted within have better legal clarity and consistency across European markets. Typically, when operating in various locations, enterprises are faced with differing national regulations to comply with. The Netherlands’ robust implementation of EU directives gives a strong framework that ensures compliance. This is especially advantageous for those collecting or processing sensitive information, and fosters reassurance for customers who can relax knowing their personal or corporate data is stored and processed securely.

Local hosting was once favored for meeting individual data mandates, but with the Netherlands’ GDPR integration and data security priorities, there has been a real shift in perspective. Today, the merits of the advanced data‑centered ecosystems hosted in Amsterdam are being recognized by businesses. They understand increasingly that the impressive performance, coupled with its comprehensive legal protection, makes it unbeatable. At Melbicom, this protection is further reinforced through strict security controls, ISO/IEC 27001 certifications, and around‑the‑clock monitoring. Our Amsterdam data centers ensure secure, compliant workloads regardless of scale.

Sustainably Powered Data Centers

Sustainability is a subject of heated current‑day discourse, and many businesses are attempting to embrace it in order to minimize their environmental footprints. A good number of the data centers situated in the Netherlands use renewable energy sources, as the country has made a commitment to much greener energy solutions. The Dutch Data Center Association states that around 86% of the data centers in the Netherlands use wind, solar, or hydro energy, and this figure is set to increase in the coming years.

Operators are also taking on more and more 100% renewable energy contracts, innovating their practices with advanced cooling systems and recycling waste heat to increase efficiency and reduce consumption. The country’s Power Usage Effectiveness (PUE) ratios hover around at the 1.2 mark, where competitors show PUE levels above 1.5.

Enterprises can significantly lower their carbon footprint per compute cycle by tapping into the infrastructure of a dedicated server hosting arrangement. This helps businesses meet sustainability benchmarks and benefits the environment, which in the modern world is important to users with eco‑conscious mindsets. Melbicom’s facility, based in Amsterdam, benefits from the nation’s green initiatives, and our Tier III and Tier IV data center servers prioritize energy efficiency, lowering your environmental impact and promoting sustainability without sacrificing performance.

1 Gbps Unmetered Dedicated Servers for Cost‑Efficient Bandwidth

Cost efficiency is another major factor that makes dedicated Netherlands servers so attractive. As Amsterdam is such a prime interconnection hub with high‑capacity bandwidth, it can afford to price itself very competitively among carriers. This higher transit capability equates to generous unmetered or high‑limit data plans.

Often, a dedicated server Netherlands option offers 1 Gbps unmetered plan, which would be far more expensive in some other locations in Europe. With unmetered hosting, large volumes of traffic can be handled without being charged per gigabyte, which is a big advantage. For clients with demanding throughput, Melbicom can provide multi‑gigabit uplinks cost‑efficiently by leveraging the rich peering offered by the Netherlands connections, regardless of how bandwidth‑hungry the applications may be. Whether it is streaming media, content delivery, or IoT platforms, operations run smoothly without the fees snowballing.

Smaller markets operating from local data centers are unable to match the low‑latency routes and economical high‑bandwidth packages that the Netherlands boasts with its mature market. They are also more accessible; you don’t need to be a tech giant to benefit. 5 Gbps or 10 Gbps server configurations are readily available for mid‑sized enterprises and can be scaled. Companies expecting growth that need to maintain continuity and deliver high‑quality experiences will find a dedicated server Netherlands arrangement ideal for coping with any fast‑growing traffic demands across Europe.

Shifting the Historical Local‑Only Mindset

In the last decade, the shift from local‑only hosting to centralized Dutch infrastructure has been accelerating. Historically, servers were fragmented and kept within their respective national borders due to barriers surrounding performance and legalities. These days, those barriers are largely non‑existent, and cross‑border links are faster than ever before.

With the introduction of high‑speed fiber routes that span entire continents, the connections between Amsterdam and all major European cities are low‑latency and consistent. Add to that the unified data protections that come under GDPR mandates, and you have a secure solution for storage and handling in every EU state. These factors combined are the force that has driven markets toward dedicated servers in the Netherlands in recent years, making it a hub for pan‑European activities.

Even many smaller markets now route their internet traffic via Amsterdam, creating a redundant “trombone routing” effect that wastes time and resources. Opting to physically host from the Netherlands simplifies infrastructure and boosts performance and speed. We have seen a rise in clients consolidating their needs at Melbicom, making the leap to a single or clustered environment in our Amsterdam data center. This shift comes with cost benefits and provides predictable uptime.

Conclusion: Achieve Pan‑European Reach Through the Netherlands

Amsterdam sits at the heart of operations in the role of Europe’s internet capital. AMS‑IX’s peering reigns superior, and the country’s dedication to sustainability means power‑efficient data centers. The nation’s digital operations are ruled by strong regulatory agreements, which give it further advantages to businesses and enterprises. For low‑latency responses that cover the whole of Europe, a dedicated server Netherlands strategy gives you the speed and power required without having to complicate your hosting solution with multiple local deployments. Choosing to host in Amsterdam provides a unification between secure high‑speed bandwidth needed for demanding workloads and compliance, while allowing you to tap into the nation’s green power efforts.

Order a Netherlands Dedicated Server

Deploy high‑performance, low‑latency servers in Amsterdam today and reach users across Europe with ease.

Get expert support with your services

Blog

Singapore’s Sustainable Data Center Expansion

Singapore serves as a fundamental data center center with more than 70 facilities combined with 1.4 GW of operational capacity. A temporary data center construction ban implemented by the government caused a brief halt in growth because of power supply and sustainability worries. The “capacity moratorium” did not affect Singapore’s standing as a data center location. The country maintained its position as a leading data center location in the world during that brief period even though new development was paused.

After the construction pause, Singapore resumed data center development through programs that enforced strict environmental standards. The goal focuses on maximizing power efficiency alongside promoting renewable energy usage while lowering carbon emissions. The 80 MW pilot allocation received immediate approval from authorities before plans emerged to create sustainable data center capacity reaching 300 MW. The Data Centre Green Policy of Singapore requires sophisticated cooling systems with waste heat recovery capabilities and state‑of‑the‑art infrastructure to achieve PUE levels below 1.2. The implementation of this method results in the best dedicated server in Singapore becoming part of a world‑class data center with Tier III or Tier IV standards which achieve maximum environmental efficiency.

The Expansion of Subsea Connectivity

Singapore stands as a strategic global location because it connects major submarine cable routes which span between Asia‑Pacific, Europe and North America. The country has maintained its role as a telecommunications gateway for the region through its strategic position. The total number of submarine cables operating in Singapore reached 26 systems during 2023. The upcoming submarine cable installations by Google and Meta together with other companies will bring the total count to more than 40 systems.

The Bifrost and Echo systems along with other major cable projects establish direct US West Coast connectivity for Singapore. Projects such as Asia Direct Cable and SJC2 enhance the connectivity between East and Southeast Asian regions. The purpose of these cables minimizes latency across both trans‑Pacific and intra‑Asian routes which maintains Singapore’s status as a region with minimal round‑trip times.

The international bandwidth of Singapore supports hosting providers to deliver extremely fast uplink speeds to their customers. For instance, the server support of 200 Gbps at Melbicom enables us to serve large streaming or transaction‑intensive platforms. Physical diversity of cables creates robust route redundancy for the region. Traffic rerouting through alternative systems becomes possible when one cable experiences downtime. The result of these upgrades benefits enterprise customers by providing constant network performance and high availability for their Singapore dedicated servers.

The substantial infrastructure investments demonstrate Singapore’s determination to establish itself as the supreme connectivity center for Asia‑Pacific. The government predicts digital economy investments from submarine cable projects and data center expansions to reach above US$15 billion. The infrastructure upgrades create essential connectivity support for businesses which choose to deploy their operations in the region.

Cybersecurity and Regulatory Strength

Singapore maintains superior physical infrastructure together with one of the most powerful cybersecurity frameworks worldwide. The Global Cybersecurity Index places Singapore among the world leaders because of its outstanding performance in cyber incident response and threat monitoring and legal standards. The Cyber Security Agency of Singapore (CSA) maintains fundamental security protocols while establishing quick response systems to protect data centers and other critical infrastructure.

A data center‑friendly regulatory framework exists as the second essential factor which supports the industry. The authorities maintain specific data handling regulations through the Personal Data Protection Act (PDPA) as well as guidelines for electronic transactions and trust services. The combination of strong privacy measures with an adaptable regulatory environment has attracted numerous fintech, crypto and Web3 startups to the market. Singapore stands apart from countries with ambiguous laws through its clear position which creates stability.

Enterprises find comfort in dedicated server hosting within Singapore because the government maintains stable legal frameworks and makes significant digital asset protection investments. The power grids operate with stability and disaster recovery planning reaches complete levels while network protection reaches advanced stages. The combination of these elements lowers the probability of downtime and compliance breaches which is why financial institutions and compliance‑heavy organizations select Singapore for their dedicated servers.

Latency Comparison: Singapore vs. Other Asian Hubs

Latency stands as an essential factor which determines user experience quality because it affects real‑time services such as gaming and video streaming along with financial operations. The combination of geographical location with numerous submarine cables enables Singapore to provide significantly lower round‑trip times to Southeast Asia and Indian destinations than its neighboring markets.

The following simplified table shows estimated latency times (in milliseconds) that measure the distance between Singapore and Mumbai and several major destinations.

| Destination | From Singapore | From Mumbai |

|---|---|---|

| Jakarta | ~12 ms | ~69 ms |

| Hong Kong | ~31 ms | ~91 ms |

Table: Approx. round‑trip latency from data centers in Singapore vs. India.

The differences between these latency values are essential for online gaming (which requires sub‑50 ms pings) as well as high‑frequency trading and HD video conferencing. The direct network connections of Singapore to Europe and North America decrease the requirement for multiple network transmission points.

A dedicated server solution in Singapore leads to significant performance improvements in application response times for organizations that target regional user bases. The excellent presence of top‑tier ISPs and content providers and Internet Exchange Points (IXPs) in Singapore accelerates traffic handoffs which results in superior user experiences.

Driving Demand: iGaming, Web3, and Crypto

Singapore data centers serve multiple industries yet several fast‑growing fields stand out among them. Online betting companies and online casinos serving Southeast Asian audiences need dependable and robust infrastructure to operate their betting platforms. The platforms achieve excellent redundancy and strong data protection rules as well as fast network performance to regional players because of Singapore.

Singapore has become a destination for Web3 and crypto firms to expand their operations. The Monetary Authority of Singapore (MAS) has established digital payment and blockchain company regulations which positions the nation as an ideal location for crypto exchanges, NFT marketplaces and DeFi projects. The platforms execute complex transactions at high volumes which requires powerful servers to process them effectively. The data centers in Singapore deliver specialized hardware configurations with GPU‑based systems which support compute‑intensive tasks while maintaining low latency for both local and global transactions.

Enterprise SaaS together with streaming services and e‑commerce operate their central hosting infrastructure from Singapore. The fundamental reason organizations select Singapore remains stable because of its combination of low‑latency connectivity, regulatory certainty, robust security and proven resilience.

Conclusion: Singapore’s Edge in Dedicated Server Hosting

Singapore maintains its position as the top data center location in the Asia‑Pacific region because it balances its development strategies. After implementing sustainability requirements on data center approvals, the country regained its position as a leading dedicated server hosting location through new green data centers and extensive subsea cable networks and high cybersecurity ratings. Organizations across Asia are increasingly dependent on powerful infrastructure with low latency which Singapore successfully provides to its clients.

Order a Server in Singapore

Deploy a high‑performance dedicated server in our Tier III Singapore facility and reach Asia‑Pacific users with ultra‑low latency.

Get expert support with your services

Blog

Choosing the Right Web Application Hosting Strategy

Modern web deployments demand strategic infrastructure choices. The growth of web traffic and rising user demands have led to numerous web application hosting options: dedicated servers in global data centers, multi‑cloud structures, edge computing, and hybrid clusters. The decision to select a correct approach depends on workload characteristics as well as elasticity requirements, compliance rules, and geographic latency limitations. The article provides a useful selection framework to evaluate the appropriate hosting model.

Decision Matrix Criteria

Workload Profile

Stateful vs. stateless: Stateless services (e.g., microservices) maintain easy horizontal server scalability because they do not store state information. The handling of memory and session data becomes crucial when implementing stateful applications which include Java sessions and real‑time data services.

CPU, memory, or I/O bound: A media streaming platform with huge I/O needs might prioritize high‑bandwidth servers. An analytics tool that depends on raw computational power will require different server specifications than streaming platforms which need high bandwidth servers. A dedicated server with predictable resources delivers superior performance than multi‑tenant virtualization for Java web applications under heavy concurrency conditions.

Elasticity

Predictable vs. spiky demand: Dedicated servers excel when loads are consistent, as you can plan capacity. Cloud or container environments enable auto‑scaling to prevent bottlenecks during sudden traffic surges (e.g., product launches). A common strategy involves keeping dedicated servers operational for base capacity while activating cloud resources during times of peak demand.

Compliance and Security

Organizations must use either single‑tenant or verified data center hosting to process regulated information. Security configurations and physical access become more manageable through dedicated servers which provide complete control to users. Public clouds offer compliance certifications (HIPAA, PCI‑DSS, etc.), yet multi‑tenant environments need users to place their trust in provider‑level isolation. Enterprises usually maintain regulated data within single‑tenant or private hosting environments.

Geographic Latency

Physical distance influences response times. Each 1,000 km adds roughly 10 ms of latency. Amazon discovered that a 100 ms rise in response time results in a 1 % decline of sales revenue. Server placement near user clusters stands essential for achieving high performance. Some organizations use edge computing along with content delivery networks (CDNs) to distribute content near user locations. Melbicom operates 20 global data centers, plus a CDN spanning 50+ cities for low‑latency access.

Comparing Hosting Approaches

| Paradigm | Strengths | Trade‑offs |

|---|---|---|

| Dedicated Servers | Single‑tenant control, consistent performance, potentially cost‑efficient at scale. Melbicom provides customers the ability to deploy servers in Tier III or IV data centers that offer up to 200 Gbps bandwidth through server rental options. | Manual capacity planning, slower to scale than auto‑provisioned cloud. Requires sysadmin expertise. |

| Multi‑Cloud | Redundancy across providers, avoids lock‑in, can cherry‑pick specialized services. | Complexity in management, varying APIs, potential high data egress fees, multiple skill sets needed. |

| Edge Computing | Reduces latency by localizing compute and caching. Real‑time applications as well as IoT devices and content benefit most from this approach. | Complex orchestration across multiple mini‑nodes, data consistency challenges, limited resources at each edge. |

Dedicated Servers

Each server operates independently on dedicated hardware which eliminates the performance problems caused by neighboring applications. CPU‑ and memory‑intensive applications find strong appeal in the unadulterated computing capabilities of dedicated servers. Through our dedicated server deployment at Melbicom, you can acquire servers in hours and enjoy bandwidth options reaching up to 200 Gbps per server. The machine costs remain constant through a fixed monthly or annual payment which covers all usage. The reliability of Tier III/IV facilities matches uptime targets from 99.982–99.995 %.

The advantages of dedicated infrastructure come with the requirement for more direct hands‑on administration. The process of scaling up requires physical machine additions or upgrades instead of cloud instance creation. Workloads such as large databases and specialized Java web application stacks benefit from this feature because they require precise OS‑level tuning.

Multi‑Cloud

The use of multiple cloud providers decreases your dependence on any single cloud platform. The major advantage of redundancy is that it is extremely unlikely to see simultaneous outages of all providers. Surveys reveal that 89 % of enterprises have adopted multi‑cloud computing, but the complexity level is high. Juggling different APIs makes it harder to handle monitoring, networking, and cost optimization tasks. The costs of moving data outside of clouds can become quite expensive. A unifying tool such as Kubernetes or Terraform helps maintain consistent deployments.

Edge Computing

The processing of requests by edge nodes located near users leads to minimized latency. A typical example is a CDN that caches static assets. The deployment of serverless functions and container instances on distributed endpoints within advanced edge platforms enables real‑time service operations. Real‑time systems together with IoT analytics and location‑based apps require local processing because they need responses within 50 milliseconds. The distribution of micro‑nodes across various geographic locations creates challenges when trying to synchronize them. The network must redirect traffic to other edge nodes when a city‑level outage occurs.

Hybrid Models

Hybrid cloud integrates on‑premises (or dedicated servers) with public cloud. The deployment of sensitive data on dedicated servers combines with web front‑ends running on cloud instances. Kubernetes functions as a container orchestration platform to provide infrastructure independence which enables the deployment of identical container images across any environment. This method unites the cost efficiency of dedicated infrastructure with the scalability benefits of the cloud. The main difficulty lies in managing the complexity because synchronizing networks with security policies and monitoring across multiple infrastructures becomes extremely challenging.

Why Hybrid? Every team identifies that a single environment does not fulfill all their requirements. A cost‑conscious organization requires steady monthly fees for core capacity needs but also wants cloud flexibility for peak situations. Containerization helps unify it all. Each microservice becomes deployable on dedicated servers and public cloud infrastructure without significant difficulties through containerization. Robust DevOps practices together with observability represent the essential components for success.

Mapping Requirements to Infrastructure

The combination of strategic environments usually leads to the best results. Evaluate the requirements of statefulness and compliance as well as latency and growth patterns when deciding.

- Stateful and performance‑sensitive apps require dedicated servers for direct hardware access to thrive.

- The use of auto‑scaling and container platforms in the cloud works best for unpredictable workload needs.

- The placement of compliance‑driven data requires single‑tenant or private systems within specific regions.

- Deploying in data centers near users combined with a CDN or edge computing provides the best results for latency‑critical applications.

Each piece in your architecture needs to match the requirements of your application to achieve resilience and high performance. The combination of dedicated servers in one region with cloud instances in another and edge nodes for caching allows you to achieve low latency, strong compliance, and cost optimization.

Melbicom’s Advantage

The Tier III and IV data centers of Melbicom offer dedicated servers with 200 Gbps bandwidth per server to support applications requiring constant high throughput. Your solution will become more effective when you integrate dedicated servers with cloud container orchestration and a global CDN for latency reduction. The adaptable infrastructure provided by Melbicom enables you to develop a solution that addresses the specific requirements of your application.

Launch Dedicated Servers Fast

Deploy high‑bandwidth dedicated servers in 20 modern data centers worldwide and scale confidently.

Get expert support with your services

Blog

Dedicated Server Cheap: Streamlining Costs and Reliability

When it comes to tech startups or newly opened businesses, a tight budget is often a factor when choosing hosting infrastructure. It’s true—cloud platforms do offer outstanding flexibility, still traditional hosting remains a go‑to option for more than 75% of workloads, with dedicated servers being that golden middle offering benefits of both worlds.

In this article, we explore how to rent reliable yet affordable dedicated servers by choosing the right CPU generations, storage options, and bandwidth options. We also briefly recall the 2010s “low‑cost colocation” times and explain why hunting for low‑end prices without considering an SLA could be a risky practice, creating more headaches than savings.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

|

A Look Back: Transition from Low‑Cost Colocation to Affordable Dedicated Servers

In the 2010s, the most common scenario for an organization seeking cheap hosting was simply choosing the cheapest shared hosting, VPS, or no‑frills dedicated server options. Considering this philosophy, providers were building their strategies around using older hardware located in basic data center facilities and cutting costs on support. Performance guarantees were considered some sort of luxury, while frequent downtime issues were considered normal.

Today, the low‑cost dedicated server market still treats affordability as a cornerstone, yet many providers combine it with modern hardware, robust networks, and data center certifications. Operating at scale, they can offer previous‑gen CPUs, fast SSDs, or even NVMe drives with smart bandwidth plans. The result is happy customers who get reliable hosting solutions and providers that make a profit by offering great services on transparent terms.

How to Select Balanced CPU Generations

Here is the reality—when looking for cheap dedicated servers, forget about cutting‑edge CPU generations. Some still remember the days when every new generation offered double the performance. Well, those days are gone, and now to get 2x the performance takes more than five years for manufacturers. This means that choosing a slightly older CPU of the same line can cut your hosting costs, still delivering 80–90% of the performance of the latest flagship.

Per‑core / multi‑core trade‑offs. Some usage scenarios (e.g., single‑threaded applications) require solid per‑core CPU performance. If that’s your case, look for older Xeon E3/E5 models with high‑frequency values.

High‑density virtualization. If your organization requires isolated virtual environments for different business services (e.g., CRM, ERP, database), a slightly older dual‑socket Xeon E5 v4 can pack a substantial number of cores at a lower cost.

Avoid outdated features. We recommend picking CPUs that support virtualization instructions (Intel VT‑x/EPT or AMD‑V/RVI). Ensure your CPU choice aligns with your RAM, storage, and network needs.

Melbicom addresses these considerations by offering more than 1,300 dedicated server configurations—including a range of Xeon chips—so you can pinpoint the best fit for your specific workloads and use cases.

How to Achieve High IOPS with Fast Storage

Picking the right storage technology is critical as slow drives often play a bottleneck role in the entire service architecture. Since 2013, when the first NVMe (Non‑Volatile Memory Express) drives reached the market, they’ve become a go‑to option for all companies looking for the fastest data readability possible (e.g., streaming, gaming).

NVMe drives reach over 1M IOPS (Input/Output Operations Per Second), which is far above SATA SSDs with 100K IOPS. They also offer significantly higher throughput—2,000–7,000+ MB/s (SATA drives give only 550–600 MB/s).

| Interface | Latency | IOPS / Throughput |

|---|---|---|

| SATA SSD (AHCI) | ~100 µs overhead | ~100K IOPS / 550–600 MB/s |

| NVMe SSD (PCIe 3/4) | <100 µs | 1M+ IOPS / 2K–7K MB/s |

SATA and NVMe performance comparison: NVMe typically delivers 10x or more IOPS.

Let’s say you are looking for a dedicated server for hosting a database or creating virtual environments for multiple business services. Even an affordable option with NVMe will deliver drastically improved performance. And if your use case also requires larger storage, you can always add on SATA SSDs to your setup to achieve larger capacity at low cost.

Controlling Hosting Expenses with Smart Bandwidth Tiers

Things are quite simple here: choosing an uncapped bandwidth plan with the maximum data‑transfer speeds could be very time‑saving in planning, but not cost‑saving (to put it mildly). To reduce hosting bills without throttling workloads, it’s important to allocate some time for initial consideration of what plan to choose: unmetered, committed, or burstable, as well as the location(s) for optimal content delivery.

Burstable bandwidth. A common scenario for providers offering this option is to bill on a 95th percentile model, discarding the top 5% of client traffic peaks. This allows short spikes in bandwidth consumption without causing extra charges.

Unmetered bandwidth. What could have been considered a provider’s generosity in the beginning, in reality might turn into good old capacity overselling. In some cases, you will experience drops in bandwidth caused by other clients; in others it will be you who causes such troubles.

Location matters. Sometimes placing your server closer to your clients can better help you meet the ultimate goals of delivering data to your audiences, reducing network overheads.

With Melbicom, you can choose the most convenient data center for your server from among 21 options located worldwide. We offer flexible bandwidth plans from affordable 1 Gbps per server connectivity options up to 200 Gbps for demanding clients.

Why Choose Providers That Operate in Modern Data Centers

Tier I/II data centers aren’t necessarily bad—in some rare scenarios (read: unique geographical locations), they could be the only options that are available. But in most scenarios, you will be able to choose the location. And since electricity often represents up to 60% of a data center’s operating expenses, we recommend avoiding lower‑tier facilities. Efficient hardware and advanced cooling lead to lower overhead for data center operators. They in turn pass savings to hosting providers that leverage this factor to offer more competitive prices to customers.

Melbicom operates only in Tier III/IV data centers that incorporate these optimizations. Our dedicated server options only include machines with modern Xeon CPUs that use fewer watts per unit of performance than servers with older chips and support power‑saving modes. This allows us to offer competitive prices to our customers.

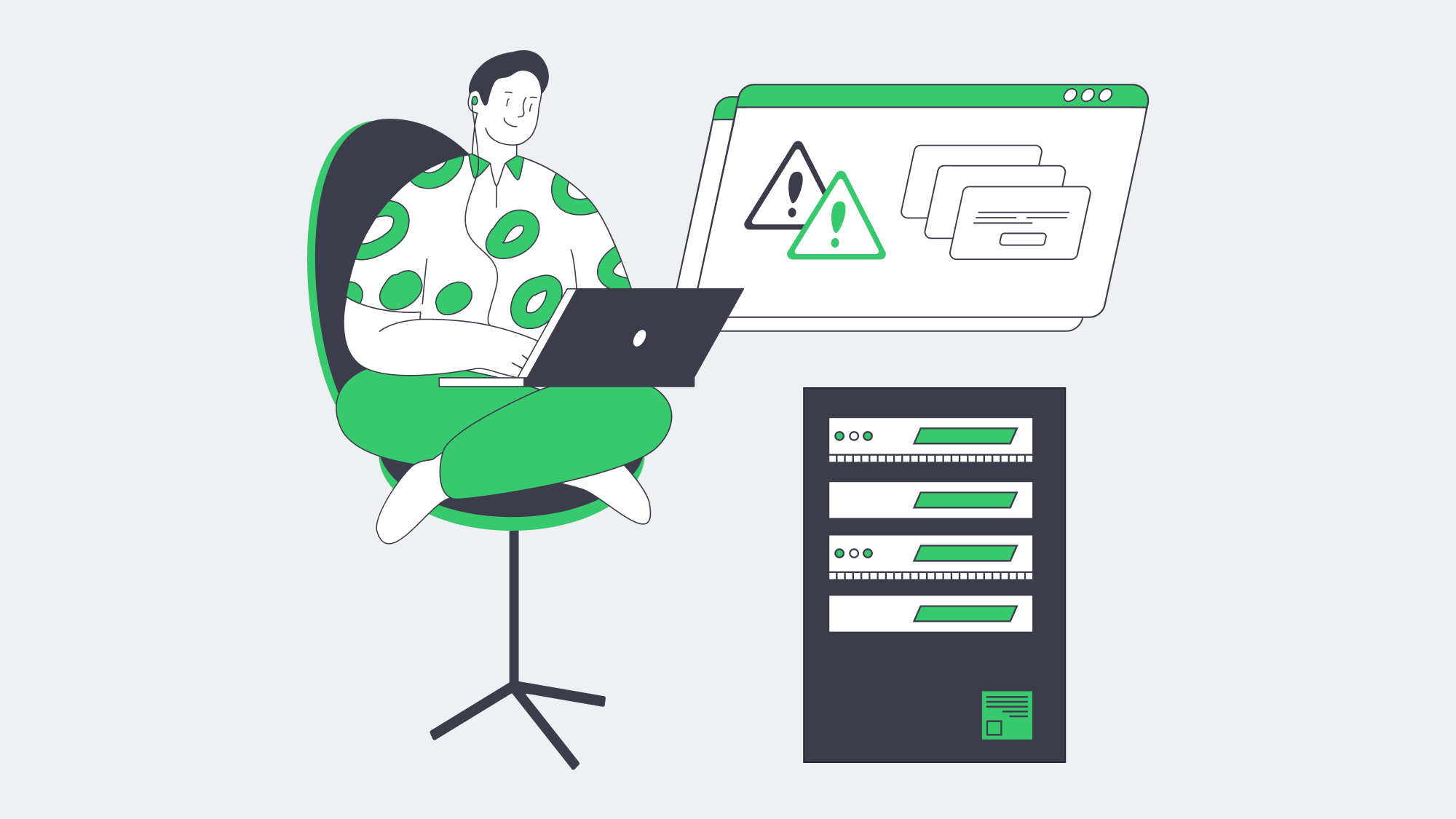

How to Streamline Monitoring to Lower TCO

We are sure you are familiar with the concept of total cost of ownership. It’s not only important to find dedicated servers with a well‑balanced cost/performance ratio; it’s also crucial to establish internal practices that will help you keep operational costs to a minimum. It doesn’t make a lot of sense to save a few hundred on a dedicated server bill and then burn 10x‑30x of those savings in system administrators’ time spent on fixing issues. This is where a comprehensive monitoring system comes into play.

Monitor metrics (CPU, RAM, storage, and bandwidth). Use popular tools like Prometheus or Grafana to reveal real‑time performance patterns.

Control logs. Employ ELK or similar stacks for root‑cause analysis.

Set up alert notifications. Ensure you receive email or chat messages in cases of anomaly detections. For example, a sudden CPU usage spike can trigger an email notification or even an automated failover workflow).

With out‑of‑band IPMI access offered by Melbicom, you can manage servers remotely and integrate easily with orchestration tools for streamlined maintenance. This helps businesses stay agile, keep infrastructure on budget, and avoid extended downtime, which is especially damaging when system administrator resources are limited.

Why Avoid Ultra-Cheap Providers When Selecting a Dedicated Server?

Many low‑end box hosting providers use aggressive pricing to acquire cost‑conscious customers, but deliver subpar infrastructure or minimal support. The lack of an SLA is a red flag, as without it, you expose yourself to ongoing service interruptions, delays in response, and no legal protection when the host fails to meet performance expectations. Security remains a major concern because outdated servers do not receive firmware updates and insecure physical data center facilities put your critical operations and data in danger.

Support can be equally critical. A non‑responsive team or pay‑by‑the‑hour troubleshooting might cause days of downtime. The investment of slightly more money for providers that offer continuous support throughout 24 hours is essential for any business. For instance, we at Melbicom provide free 24/7 support with every dedicated server.

Conclusion: Optimizing Cost and Performance

Dedicated servers serve as essential infrastructure for organizations that require both high performance and stable costs along with complete control. The combination of balanced CPU generations with suitable storage/bandwidth strategies, as well as monitoring automation allows you to achieve top performance at affordable costs.

The era of unreliable basement‑level colocation has ended because modern, affordable dedicated server solutions exist. They combine robustness with low cost, especially when you select a hosting provider that invests in quality infrastructure as well as security and support.

Why Choose Melbicom

At Melbicom, we offer hundreds of ready-to-go dedicated servers located in Tier III/IV data centers across the globe with enterprise-grade security features at budget-friendly prices. Our network provides up to 200 Gbps per-server speed, and we offer NVMe-accelerated hardware, remote management capabilities, and free 24/7 support.

Get expert support with your services

Blog

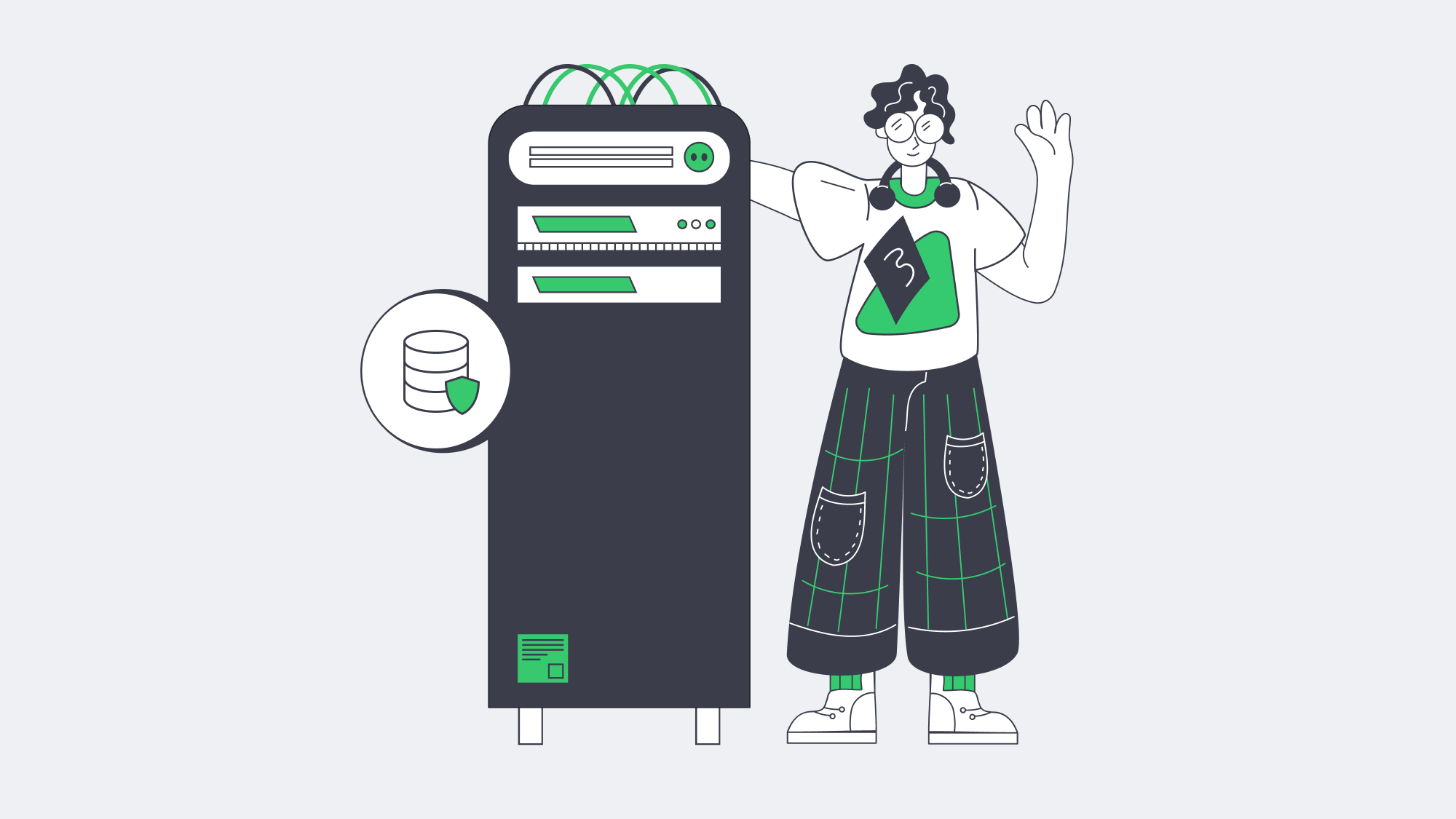

Effective Tips to Host a Database Securely with Melbicom

One does not simply forget about data breaches. Each year, their number increases, and every newly reported data breach highlights the need to secure database infrastructure, which can be challenging for architects.

Modern strategies that center around dedicated, hardened infrastructure are quickly being favored over older models.

Previously, in an effort to keep costs low and simplify things, teams may have co-located the database with application servers, but this runs risks. If an app layer becomes compromised, then the database is vulnerable.

Shifting to dedicated infrastructure and isolating networks is a much better practice. This, along with rigorous IAM and strong encryption, is the best route to protect databases.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

|

Of course, there is also compliance to consider along with monitoring and maintenance, so let’s discuss how to host a database securely in modern times.

This step-by-step guide will hopefully serve as a blueprint for the right approach to reduce the known risks and ever-evolving threats.

Step 1: Cut Your Attack Surface with a Dedicated, Hardened Server

While a shared or co-located environment might be more cost-effective, you potentially run the risk of paying a far higher price. Hosting your database on a dedicated server dramatically lowers the exposure to vulnerabilities in other services.

By running your Database Management System (DBMS) solely on its own hardware, you can prevent lateral movement by hardening things at an OS level. With a dedicated server, administrators can disable any unnecessary services and tailor default packages to lower vulnerabilities further.

The settings can be locked down by applying a reputable security benchmark, reducing the likelihood that one compromised application provides access to the entire database.

A hardened dedicated server provides a solid foundation for a trustworthy, secure database environment. At Melbicom, we understand that physical and infrastructure security are equally important, and so our dedicated servers are situated in Tier III and Tier IV data centers. These centers operate with an overabundance of resources and have robust access control to ensure next-level protection and reduce downtime.

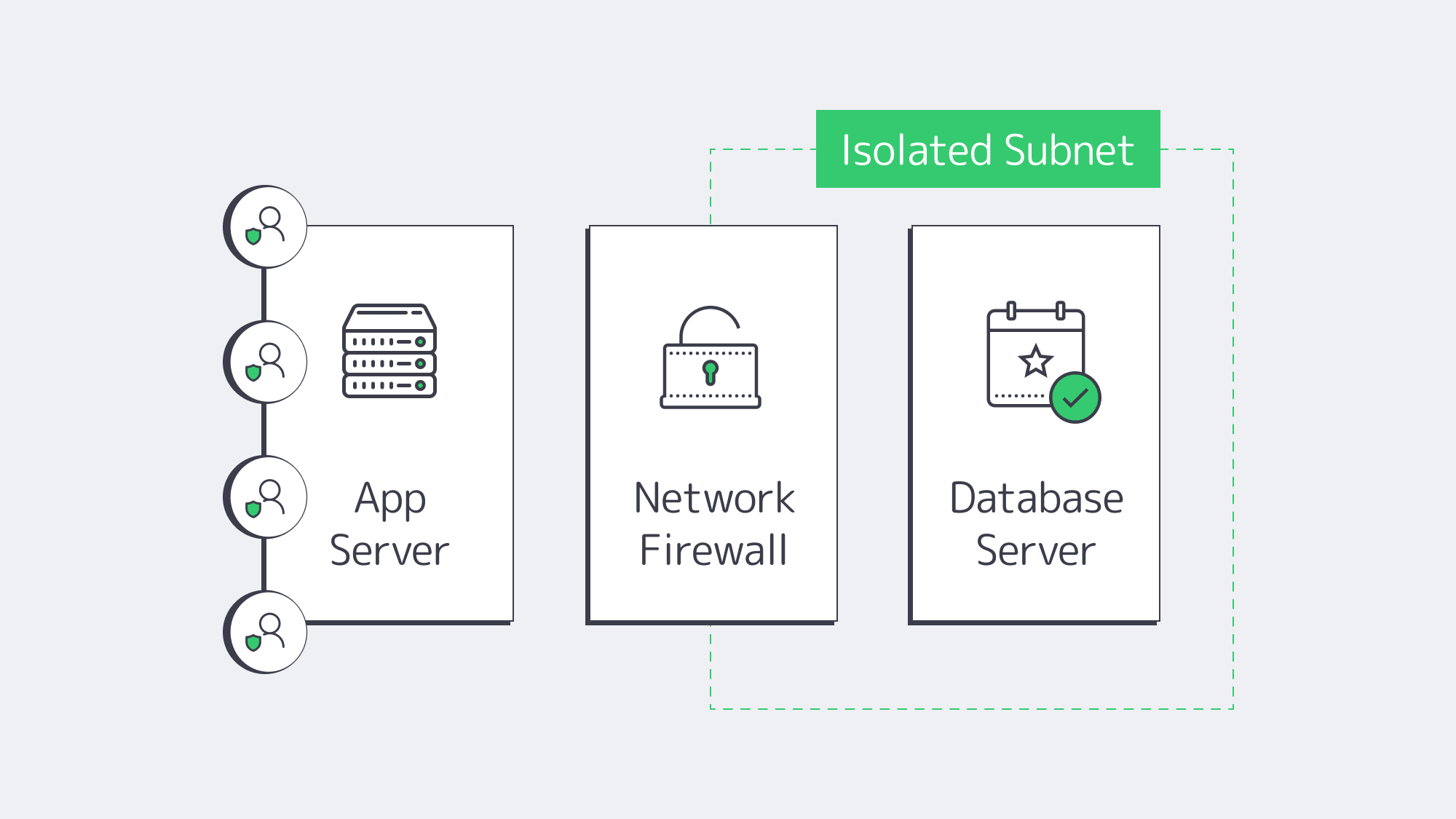

Step 2: Secure Your Database by Isolating the Network Properly

A public-facing service can expose the database unnecessarily, and therefore, preventing public access in addition to locking your server down is also crucial to narrowing down the risk of exploitation.

By isolating the network, you essentially place the database within a secure subnet, eliminating direct public exposure.

Network isolation ensures that access to the database management system is given to authorized hosts only. Unrecognized IP addresses are automatically denied entry.

The network can be isolated by tucking the database behind a firewall in an internal subnet. A firewall will typically block anything that isn’t from a fixed range or specified host.

Another option is using a private virtual local area network (VLAN) or security grouping to manage access privileges.

Administrators can also hide the database behind a VPN or jump host to complicate gaining unauthorized access.

Taking a layered approach adds extra hurdles for a would-be attacker to have to bypass before they even reach the stage of credential guessing or cracking.

Step 3: At Rest & In-Transit Encryption

Safeguarding databases relies heavily on strong encryption protocols. With encryption in place, you can ensure that any traffic or stored data intercepted is inaccessible, regardless.

Encryption needs to be implemented both at rest and in transit for full protection. Combining the two helps thwart interception attempts on packets and prevent stolen disks, which are both major threat vectors:

It can be handled at rest, either with OS-level full-disk encryption such as LUKS, via database-level transparent encryption, or by combining the two approaches.

An ideal tool is Transparent Data Encryption (TDE), which automatically encrypts and is supported by commercial versions of MySQL and SQL Server.

For your in-transit needs, enabling TLS (SSL) on the database can help secure client connections. It disables plain text ports and requires strong keys and certificates.

This helps to identify trusted authorities, keeps credentials protected, and prevents the potential sniffing of payloads.

Depending on the environment, compliance rules may demand cryptographic controls. In instances where that is the case, separating keys and data is the best solution.

The keys can be regularly rotated by administrators to further bolster protection. That way, should raw data be obtained, the encryption, working hand in hand with key rotation, renders it unreadable.

Step 4: Strengthen Access with Strict IAM & MFA Controls

While you might have hardened your infrastructure and isolated your network, your database can be further secured by limiting who has access and restricting what each user can do.

Only database admins should have server login; you need to manage user privileges at an OS level with strict Identity and Access Management (IAM).

Using a key where possible provides secure access, whereas password-based SSH is a weaker practice.

Multi-factor authentication (MFA) is important, especially for those with higher-level privileges. Periodic rotation can help strengthen access and reduce potential abuse.

The best rule of thumb is to keep things as restrictive as possible by using tight scoping within the DBMS.

Each application and user role should be separately created to make sure that it grants only what is necessary for specific operations. Be sure to:

- Remove default users

- Rename system accounts

- Lock down roles

An example of a tight-scope user role in MySQL might look something like: SELECT, INSERT, and UPDATE on a subset of tables.

When you limit the majority of user privileges down to the bare minimum, you significantly reduce threat levels. Should the credentials of one user be compromised, this ensures that an attacker can’t escalate or move laterally.

Ultimately, only by combining local or Active Directory permissions with MFA can you reduce and tackle password-based exploitation.

Step 5: Continually Patch for Known Vulnerabilities

More often than not, data breaches are the result of a cybercriminal gaining access through a known software vulnerability.

Developers constantly work to patch these known vulnerabilities, but if you neglect to update frequently, then you make yourself a prime target.

No software is safe from targeting, not even the most widely used trusted DBMSs.

You will find that from time to time, software such as PostgreSQL and MySQL publish urgent security updates to address a recent exploit.

Without these vital patches, you risk exposing your server remotely.

Likewise, operating with older kernels or library versions can give root access as they could harbor flaws.

The strategy for countering this easily avoidable and costly mistake is to put in place a systematic patching regime. This should apply to both the operating system and the database software.

Scheduling frequent hotfix checks or enabling automatic updates helps to make sure you stay one step ahead and have the current protection needed.

Admins can use a staged environment to test patches without jeopardizing production system and stability.

Step 6: Automate Regular Backups

Your next step to a secure database is to implement frequent and automated backups. Scheduling nightly full dumps is a great way to cover yourself should the worst happen.

If your database changes are heavy, then this can be supplemented throughout the day with incremental backups as an extra precaution.

By automating regular backups, you are protecting your organization from catastrophic data loss. Whether the cause is an in-house accident or hardware failure, or at the hands of an attack by a malicious entity.

To make sure your available backups are unaffected by a local incident, they should be stored off-site and encrypted.

The “3-2-1 rule” is a good security strategy when it comes to backups. It promotes the storage of three copies, using two different media types, with one stored offsite in a geographically remote location.

Most security-conscious administrators will have a locally stored backup for quick restoration, one stored on an internal NAS, and a third off-site.

Regularly testing your backup storage solutions and restoration shouldn’t be overlooked.

Testing with dry runs when the situation isn’t dire presents the opportunity to minimize downtime should the worst occur. You don’t want to learn your backup is corrupted when everything is at stake.

| Strategy | Frequency | Recommended Storage Method |

| Full Dump | Nightly | Local + Offsite |

| Incremental Backup | Hourly/More | Encrypted Repository, Such as an Internal NAS |

| Testing-Dry Run | Monthly | Staging Environment |

Remember, backups contain sensitive data and should be treated as high-value targets even though they aren’t in current production. To safeguard them and protect against theft, you should always keep backups encrypted and limit access.

Step 7: Monitor in Real-Time

The steps thus far have been preventative in nature and go a long way to protect, but even with the best measures in place, cybercriminals may outwit systems. If that is the case, then rapid detection and a swift response are needed to minimize the fallout.

Vigilance is key to spotting suspicious activity and tell-tale anomalies that signal something might be wrong.

Real-time monitoring is the best way to stay on the ball. It uses logs and analytics and can quickly identify any suspicious login attempts or abnormal spikes in queries, requests, and SQL commands.

You can use a Security Information and Event Management (SIEM) tool or platform to help with logging all connections in your database and tracking any changes to privileges. These tools are invaluable for flagging anomalies, allowing you to respond quickly and prevent things from escalating.

The threshold setting can be configured to send the security team alerts when it detects one of the following indicators that may signal abuse or exploitation:

- Repeated login failures from unknown IPs

- Excessive data exports during off-hours

- Newly created privileged accounts

The logs can also be analyzed and used to review response plans and are invaluable if you suffer a breach. Regularly reviewing the data can help prevent complacency, which can be the leading reason that real incidents get ignored until it’s too late.

Step 8: Compliance Mapping and Staying Prepared for Audits

Often, external mandates are what truly dictate security controls, but you can make sure each of the measures you have in place aligns with compliance obligations such as those outlined by PCI DSS, GDPR, or HIPAA, with compliance mapping.

Isolation requirements are met by using a dedicated server, and confidentiality rules are handled with the introduction of strong encryption.

Access control mandates are addressed with IAM and MFA, and you manage your vulnerabilities by automating patch updates. By monitoring in real time, you take care of logging and incident response expectations.

The logs and records of each of the above come in handy as evidence to back up the security of your operations during any audits because they will be able to identify personal or cardholder data, confirm encryption, and demonstrate the privileged access in place.

It also helps to prepare for audits if you can leverage infrastructure certifications from your hosting provider. Melbicom operates with Tier III and Tier IV data centers, so we can easily supply evidence to demonstrate the security of our facilities and network reliability.

Secure Database Hosting: Key Takeaways and Next Steps

Co-locating is a risky practice. If you take security seriously, then it’s time to shift to a modern, security-forward approach that prioritizes a layered defense.

The best DBMS practices start with a hardened, dedicated server, operating on an isolated secure network with strict IAM in place.

Strategies such as patch updates and backup automation, as well as monitoring help you stay compliant and give you all the evidence you need to handle an audit confidently.

With the steps outlined in this guide, you now know how to host a database securely enough to handle any threat that a modern organization could face. Building security from the ground up transforms the database from a possible entry point for hackers to a well-guarded vault.

Why Choose Melbicom?

Our worldwide Tier III and Tier IV facilities house advanced, dedicated, secure servers that operate on high-capacity bandwidth. We can provide a fortified database infrastructure with 24/7 support, empowering administrators to maintain security confidently. Establish the secure foundations you deserve.