Blog

Singapore Servers as Your APAC Low-Latency Anchor

For many global teams, Asia-Pacific is where latency budgets go to die. The region already has the world’s largest online population, yet penetration is still catching up — which means user growth is shifting east while expectations for “instant” experiences keep rising.

At the same time, Asia-Pacific’s e‑commerce market alone is projected to exceed USD 7 trillion by 2030, making it the world’s largest and fastest‑growing digital commerce region. Real‑time payments, streaming, multiplayer games, and SaaS collaboration tools are all piling onto the network. For any product with global ambitions, slow paths into Southeast Asia and North Asia are now an existential risk, not just a UX blemish.

Underneath that demand, data center and interconnection investment is exploding. The broader Asia-Pacific data center market is expected to grow from about USD 102.45 billion in 2024 to USD 174.81 billion by 2030, at a 9.3% CAGR. Within that, Singapore is a clear outlier: its data center market was valued at roughly USD 948.9 million in 2024 and is forecast to reach USD 2.78 billion by 2033, growing at 12.1% annually.

Choose Melbicom— 130+ ready-to-go servers in SG — Tier III-certified SG data center — 55+ PoP CDN across 6 continents |

|

Singapore’s role is not just about market size. It already hosts more than 1.4 GW of installed data center capacity and is recognized by regulators and industry analysts as a regional hub, backed by a dense regulatory and sustainability framework designed specifically for data centers. At the physical layer, Singapore sits on top of roughly 30 international submarine cable systems with a combined design capacity of about 44.8 Tbit/s, turning it into one of the most interconnected nodes on the planet.

We looked at submarine cable maps, regulatory briefings, data center market forecasts, and interconnection growth figures across Asia-Pacific. Our conclusion: if you need consistent sub‑100 ms round‑trip times across the region without building a custom multi‑country footprint from scratch, Singapore‑based dedicated servers remain the most straightforward backbone for low‑latency reach. This post breaks down how to use it effectively.

Server Hosting in Singapore: a Low-Latency Gateway to Asia-Pacific

At the network level, Singapore behaves like an edge location for most of Asia-Pacific. According to industry and policy analysis, the city‑state handles over 99% of its international data traffic via subsea cables and is currently served by around 28 active cable systems, with at least 13 more in various stages of planning or construction.

That physical density is one reason Singapore’s digital economy already contributes an estimated 17.7% of national GDP — about SGD 113 billion (roughly USD 88 billion). It’s also why latency from a well‑peered Singapore data center to major Asia-Pacific metros can feel “local” even when you’re crossing national borders.

From a practical standpoint, here’s what we at Melbicom typically see when we map latency from our Singapore facility across the region over high‑quality transit and peering:

| Destination Metro | Typical RTT From Singapore (ms) | Practical Use Case |

|---|---|---|

| Jakarta | 30–40 | Mobile games, real‑time payments |

| Bangkok | 35–45 | SaaS collaboration, streaming |

| Manila | 40–50 | Web apps, e‑commerce storefronts |

| Tokyo | 65–80 | Multiplayer games, trading frontends |

| Sydney | 90–110 | Collaboration, content, back‑office SaaS |

These are not lab‑perfect numbers — they’re realistic ranges across premium carriers under normal conditions. For most interactive workloads, staying below ~100 ms RTT is enough to feel “snappy”; below 50 ms tends to feel instant for typical users.

To put that in context: Singapore consistently ranks at or near #1 globally for fixed broadband speeds, with median download speeds above 500 Mbps and single‑digit millisecond last‑mile latency. That local access quality, combined with cable density, is what makes Singapore such a strong control point for dedicated server hosting Asia‑wide.

Melbicom’s Singapore data center sits directly on this fabric: a Tier III facility with 16 MW of power, 1–200 Gbps of dedicated bandwidth available per server, and more than 100 ready‑to‑go dedicated configurations at any given time. When you pair that with a global network spanning more than 20 transit providers and dozens of internet exchange points, you get a platform where the physical distance is as short as the map allows — and the logical distance (hops, congestion, packet loss) is carefully minimized.

Visualizing Latency From Singapore

For engineering teams, the takeaway is simple: if you anchor your Asia-Pacific workloads on a well‑connected dedicated server in Singapore, your “worst” latencies across most of the region will often be comparable to intra‑regional latencies in Europe or North America.

How Do I Choose a Reliable Dedicated Server in Singapore?

Choosing a reliable dedicated server in Singapore comes down to three layers: the physical facility (tier, power, connectivity), the network (transit quality, IXPs, routing policy), and the server profile (CPU, RAM, storage, bandwidth). Focus on latency budgets and traffic patterns first, then pick hardware and bandwidth that can safely absorb peak load with room for growth.

1. Start With Latency and Peering, Not Just Specs

For low‑latency work, a server in SG needs more than a fast CPU. You want:

- Dense, diverse connectivity. Singapore’s subsea hub status only helps you if your provider actually buys quality transit and peers broadly. In our case, Melbicom connects to 20+ upstream carriers and nearly thirty internet exchange points globally, so traffic to users in Jakarta, Seoul, or Sydney can often take direct or near‑direct paths.

- Guaranteed bandwidth, without ratios. Oversubscribed outbound links create surprise latency spikes. Melbicom exposes up to 200 Gbps per server in the Singapore facility, with committed capacity rather than “best effort” share‑and‑pray models.

If you’re evaluating Singapore’s best dedicated server options, always ask for looking‑glass URLs, test IPs, and traceroutes before you sign. Static marketing claims won’t tell you how your real routes behave.

2. Match Server Profiles to Traffic Shape

From Melbicom’s Singapore catalog, you can usually choose among:

- CPU families: modern Intel Xeon and AMD EPYC generations suitable for CPU‑heavy game servers, real‑time analytics, or microservices backends.

- Storage tiers: pure NVMe for latency‑critical databases and queues; mixed SSD/HDD for large asset libraries or logs.

- Network profiles: from 1 Gbps ports for moderate traffic up to 100–200 Gbps for content‑heavy or multiplayer workloads.

We keep hundreds of servers ready for 2‑hour activation globally, with more than 100 stock configurations in Singapore alone. Custom server configurations — for example, high‑frequency CPUs plus large NVMe arrays — can usually be deployed in three to five business days.

For global teams, the sweet spot is often: multi‑core Xeon, 128–256 GB RAM, NVMe primary storage, and at least 10 Gbps dedicated bandwidth per node. That’s enough to consolidate multiple Kubernetes worker roles or large game shards without creating new bottlenecks.

3. Demand Operational Maturity

Specs are easy to copy; operations aren’t. When you look at Singapore dedicated server hosting providers, verify:

- 24/7 support and on‑site engineers. Singapore’s tight regulatory environment means most facilities are robust; the real differentiator is how fast someone can swap a failing DIMM or debug a networking issue at 03:00 local time. Melbicom offers free, round‑the‑clock technical support and on‑site component replacement.

- BGP session support. If you bring your own IP space, you should be able to establish a BGP session to announce prefixes and optimize routing without resorting to GRE tunnels or hacks. Melbicom provides BGP session support across all data centers, including Singapore.

- API and automation. For DevOps‑driven teams, an API‑driven control plane, KVM over IP, and predictable naming for “server sg‑X” nodes are as important as raw clock speed.

What Are the Benefits of Hosting a Website on Singapore Dedicated Servers?

Hosting a website or application on Singapore dedicated servers places your origin close to the fastest‑growing digital economies, on top of some of the world’s densest subsea connectivity and low‑latency interconnection. The result: better page load times, higher conversions, and more consistent performance across APAC without a sprawling footprint.

Closer to the Fastest-Growing Digital Economies

Asia-Pacific will continue to dominate e‑commerce growth, with the region’s online commerce volume expected to surpass USD 6 trillion by the end of the decade. Southeast Asia alone is projected to see its internet economy approach USD 1 trillion in GMV by 2030.

Running your origin in Singapore means your packets spend less time bouncing through distant gateways to reach those economies. For global products, that translates directly into fewer rage‑quits, higher session lengths, and better in‑app monetization.

Latency, UX, and Revenue Are Directly Linked

The correlation between speed and business metrics is no longer theoretical. Deloitte and Google’s “Milliseconds Make Millions” research found that a 0.1‑sec improvement in mobile site speed increased retail conversion rates by 8.4% and travel conversions by 10.1%.

If your Seoul or Jakarta users are running 200–250 ms away from an origin in Western Europe, shaving even 80–100 ms by moving that origin to a Singapore dedicated server is often the cleanest way to unlock similar uplifts — especially once you’ve already exhausted front‑end optimization.

Low-Latency Networks Are the New Baseline

Equinix’s Global Interconnection Index, cited by Telecom Review Asia, projects that interconnection bandwidth in Asia-Pacific will grow at a 46% CAGR, reaching about 6,002 Tbps. That growth is being driven by e‑commerce, digital payments, and real‑time applications — exactly the workloads that suffer most from high latency.

In parallel, surveys show that over 70% of data centers in Asia are now connected to networks offering more than 100 Mbps to enterprises, underlining how quickly “good enough” bandwidth is becoming table stakes.

For your stack, that means user expectations are calibrated by local best‑in‑class experiences. If a competitor’s API or game server is physically closer — or better peered into those networks — your product will feel sluggish by comparison.

Designing a Low-Latency Architecture on Singapore Servers

Once you’ve chosen a provider and a hardware profile, the next step is architectural. A single high‑end box in Singapore can still underperform if you treat it like “just another origin.” The goal is to use your Singapore node as a latency anchor for the region.

Map Latency Budgets Across Asia-Pacific

Start by defining clear latency budgets. Real‑time PvP or esports: target sub‑50 ms; acceptable up to ~80 ms. Competitive SaaS UX (dashboards, live collaboration): target sub‑150 ms; acceptable up to ~200 ms. Turn‑based or non‑interactive content: more tolerant, but still benefits from lower jitter.

Overlay those budgets on your traffic map. Most of Southeast Asia and parts of North Asia fall comfortably inside the “green zone” when served from Singapore; farther‑edge users (e.g., rural Australia, Pacific islands) may still exceed some thresholds. That’s where you decide whether one Singapore origin plus CDN is enough, or whether you add secondary footprints (e.g., Seoul or Sydney) as you scale.

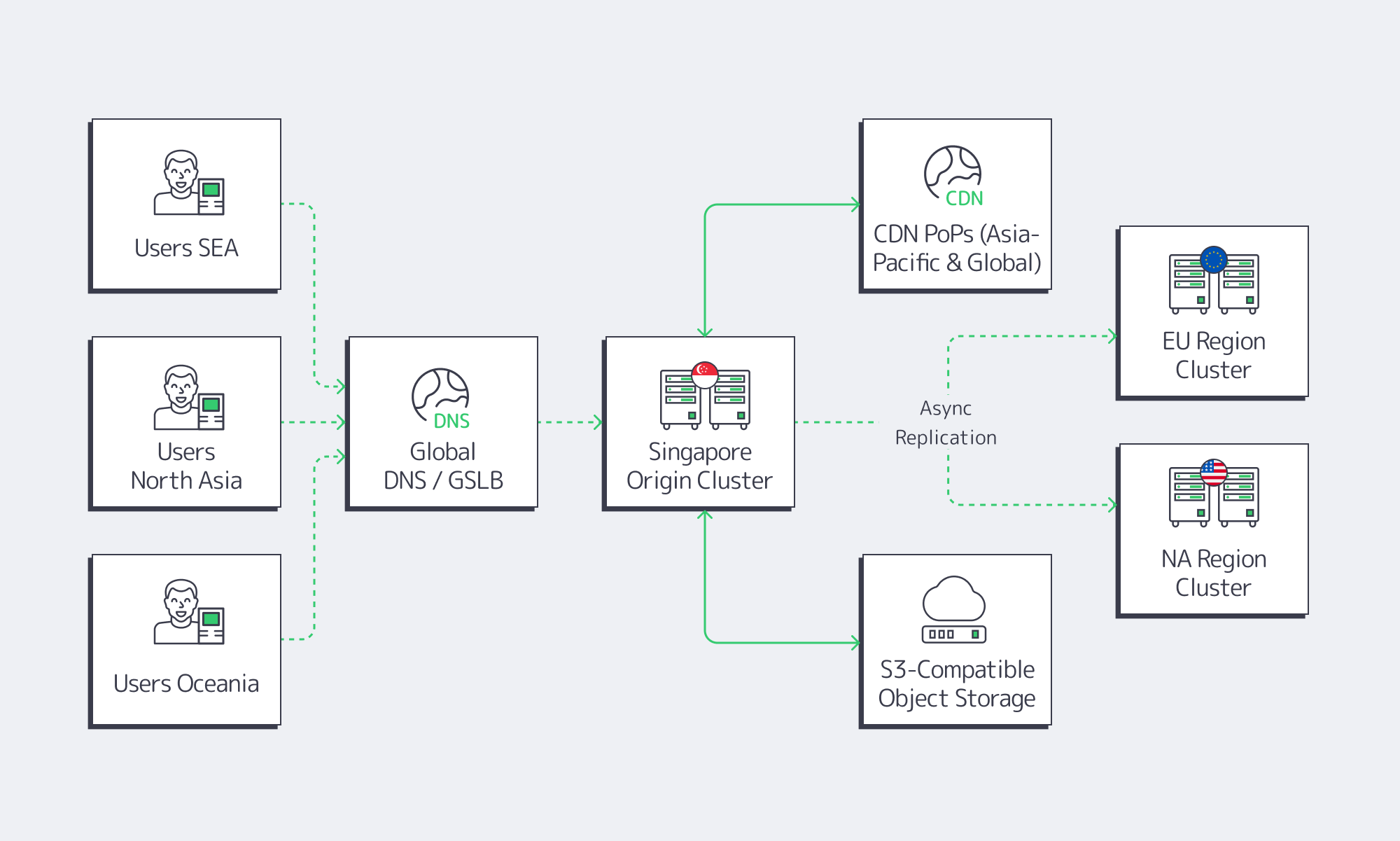

Use a Singapore Dedicated Server as APAC Origin, Not a Global Monolith

A common mistake is to treat Singapore as a universal global origin. That leads to sub‑optimal paths for Europe or the Americas. Instead:

- Use Singapore as the Asia-Pacific origin in a broader topology where Europe and North America have their own origin clusters.

- Pin APAC DNS responses (or GSLB rules) so that users from the region hit

apac.example.comresolving to Singapore or nearby POPs. - Keep cross‑regional chatter to a minimum by pushing only the state that truly needs to be global (billing, accounts, leaderboards) to a multi‑region datastore.

This pattern is especially useful for dedicated server hosting Asia gaming workloads: shard per region, use Singapore for Southeast Asia and much of East Asia, and sync only global state asynchronously.

Pair Singapore With CDN and Object Storage

A dedicated server in Singapore gives you CPU, RAM, and bandwidth; it shouldn’t be your universal file store or global cache.

Modern guidance from edge‑delivery vendors is clear: CDNs and edge computing have complementary roles. CDNs excel at caching static assets, reducing origin load and average latency; edge compute is best reserved for custom logic that truly benefits from running within a few milliseconds of the user.

With Melbicom, you can combine:

- CDN with 55+ PoPs across 36 countries. That gives you edge caches close to users while keeping your Singapore origin small and predictable.

- S3‑compatible object storage. Our S3 cloud storage offers up to hundreds of terabytes of scalable, NVMe‑accelerated capacity, ideal for assets, backups, and logs that don’t belong on your origin disks.

In a typical setup:

- Your main application and databases run on one or more Singapore dedicated servers.

- Static assets (game patches, media, JS bundles) live in S3‑compatible storage.

- Melbicom’s CDN pulls from S3 and your Singapore origin, caching at PoPs worldwide.

- Only latency‑sensitive API calls or gameplay traffic hit the Singapore servers directly.

That architecture lets you treat the SG origin as an ultra‑fast control plane for the region.

Singapore as Your APAC Latency Anchor

When you step back from the individual stats, a clear pattern emerges. Asia-Pacific’s digital economy is compounding rapidly; interconnection bandwidth is exploding; and Singapore has positioned itself at the center of that network with dense subsea cables, high‑capacity data centers, and a regulatory framework explicitly tuned for digital infrastructure.

For technical teams, the opportunity is straightforward: treat Singapore not as a checkbox location, but as a strategic latency anchor for the region. Start with a well‑peered Singapore server cluster, wrap it in a CDN and S3 storage layer, and then add complementary regions only where your latency budgets or regulatory needs truly demand it.

Here are a few practical takeaways before you sketch your next architecture diagram:

- Quantify your latency needs first. Decide which user journeys truly require sub‑80 ms latency and which can tolerate more. Let that drive how aggressively you invest in Singapore and where you might need secondary regions.

- Make peering and bandwidth non‑negotiable. Insist on test IPs, traceroutes, and clear per‑server bandwidth guarantees. For high‑growth products, treat 10 Gbps per origin server as a minimum rather than a luxury.

- Separate compute from content. Use Singapore dedicated servers for real‑time logic and state; push heavy assets into S3‑compatible storage and CDN. That keeps your latency‑critical flows tight and predictable.

- Automate region‑aware rollout. Treat Singapore as its own deployment stage with per‑region feature flags, canaries, and observability. Don’t assume a feature that performs well in Frankfurt will behave identically against high‑churn mobile networks in Southeast Asia.

- Plan for regulatory and sustainability shifts. Singapore’s regulators are tightening energy efficiency and resilience standards for data centers, which is good news for uptime — but it also rewards providers who invest in modern, efficient infrastructure. Align your long‑term plans with that direction of travel.

Deploy in Singapore today

If you’re ready to turn these principles into a concrete deployment, we at Melbicom can help you anchor your Asia-Pacific workloads in Singapore. Our Singapore facility offers Tier III infrastructure, up to 200 Gbps per‑server bandwidth, more than 100 ready‑to‑go configurations, and custom builds delivered in 3 to 5 business days — all backed by free 24/7 support and seamless integration with our CDN and S3 storage. Order a server and start turning Singapore into the low‑latency backbone for your next phase of global growth.