Blog

Dedicated vs. Cloud Hosting in Singapore: Which Is Right for You?

If you’re running high‑growth infrastructure out of Singapore, the “all‑in on public cloud” era is already over. Across Melbicom’s own customer base, the typical production stack now mixes public cloud, private cloud, and dedicated servers, with heavy, steady‑state workloads quietly moving off hyperscale platforms.

Independent data matches what we see. TechRadar’s analysis found that 86% of companies still use dedicated servers, and 42% have migrated workloads from public cloud back to dedicated servers in the past year. Broadcom’s global survey of VMware customers reports that 93% of organizations deliberately balance public and private cloud, 69% are considering repatriating workloads from public cloud, and 35% have already done so.

Singapore sits at the centre of this rebalancing. The city‑state is doubling down on its role as a regional hub, including plans for a 700MW low‑carbon data centre park on Jurong Island—roughly a 50% boost to national capacity. For teams serving users across APAC, the real question is no longer “cloud or not?” but which workloads belong on a dedicated server in Singapore, and which should stay on cloud platforms.

Choose Melbicom— 130+ ready-to-go servers in SG — Tier III-certified SG data center — 55+ PoP CDN across 6 continents |

|

In this article, we combine what we at Melbicom see running infrastructure in Singapore with recent research on performance, cost, and compliance. We’ll unpack where Singapore dedicated servers outperform cloud, where cloud still clearly wins, and how to design a mix that reins in cloud costs without handcuffing product teams. By the end, you should have a decision framework for placing each workload—plus a clear sense of when a dedicated server Singapore strategy is the more sustainable path.

Performance: When a Dedicated Server Singapore Strategy Wins

Cloud still wins on sheer flexibility. But for latency‑sensitive and throughput‑heavy workloads, a well‑designed dedicated deployment in SG usually has a structural edge.

Dedicated Server Hosting in Singapore for Latency‑Critical Workloads

A dedicated machine means no noisy neighbours, no shared hypervisor, and no surprise throttling. CPU scheduling, NUMA layout, storage IOPS, and network queues are all under your control. When you pin cores to specific services or tune kernel parameters, you actually get the benefit consistently—something far harder to guarantee on multi‑tenant cloud hosts.

That matters for low‑latency trading and pricing engines, real‑time fraud detection and risk scoring, multiplayer game backends, WebRTC, live streaming, and interactive AI features. In those cases, a Singapore dedicated server hosted in a Tier III+ facility with strong peering will typically deliver more stable p95/p99 latency than a comparable cloud instance, simply because the stack between your process and the NIC is thinner and less virtualised.

At our Singapore site, we provision 1–200 Gbps of bandwidth per server on Tier III infrastructure, with more than a hundred ready‑to‑go configurations. Globally, we operate 21 data centers and a backbone tied into more than 20 transit providers and over 25 internet exchange points, fronted by a CDN delivered through 55+ points of presence in 36 countries. In practice, that combination—local compute plus global edge distribution—is exactly what latency‑sensitive apps need: keep state and write paths in Singapore, push static or cacheable content out over the CDN, and avoid shipping every byte through expensive cloud egress.

For European or North American teams expanding into Southeast Asia, this mixed setup often looks like:

- dedicated servers in Singapore for real‑time APIs and stateful services,

- regional caches and object storage for media and large payloads, and

- selective use of cloud services where managed functionality (for example, analytics or messaging) outweighs the jitter and cost.

Where Cloud Instances Still Shine

Cloud is still the fastest way to experiment. For bursty, short‑lived workloads—ad‑hoc analytics jobs, A/B tests, temporary campaign backends—spinning up instances on a cloud server Singapore platform is usually the right call. You get managed services, autoscaling, and platform tools without touching hardware.

For highly variable or unpredictable workloads, especially early in a product’s life, if traffic can swing 10x day‑to‑day and you haven’t yet stabilised demand, paying the flexibility premium is often cheaper than over‑provisioning dedicated capacity.

Financial‑sector analysis of cloud migration in Southeast Asia underlines this pattern. Institutions across Indonesia, the Philippines, Singapore, and neighbouring markets are accelerating digital banking, putting pressure on infrastructure but often starting on cloud to move quickly. The mistake isn’t using cloud; it’s leaving everything there once workloads become stable, heavy, and business‑critical.

Cost: Cloud Flexibility vs. Dedicated Server Predictability

Public cloud pricing looks granular: per vCPU‑hour, per GB‑month, per million requests. In reality, your invoice tends to consolidate into one of the largest lines in the P&L.

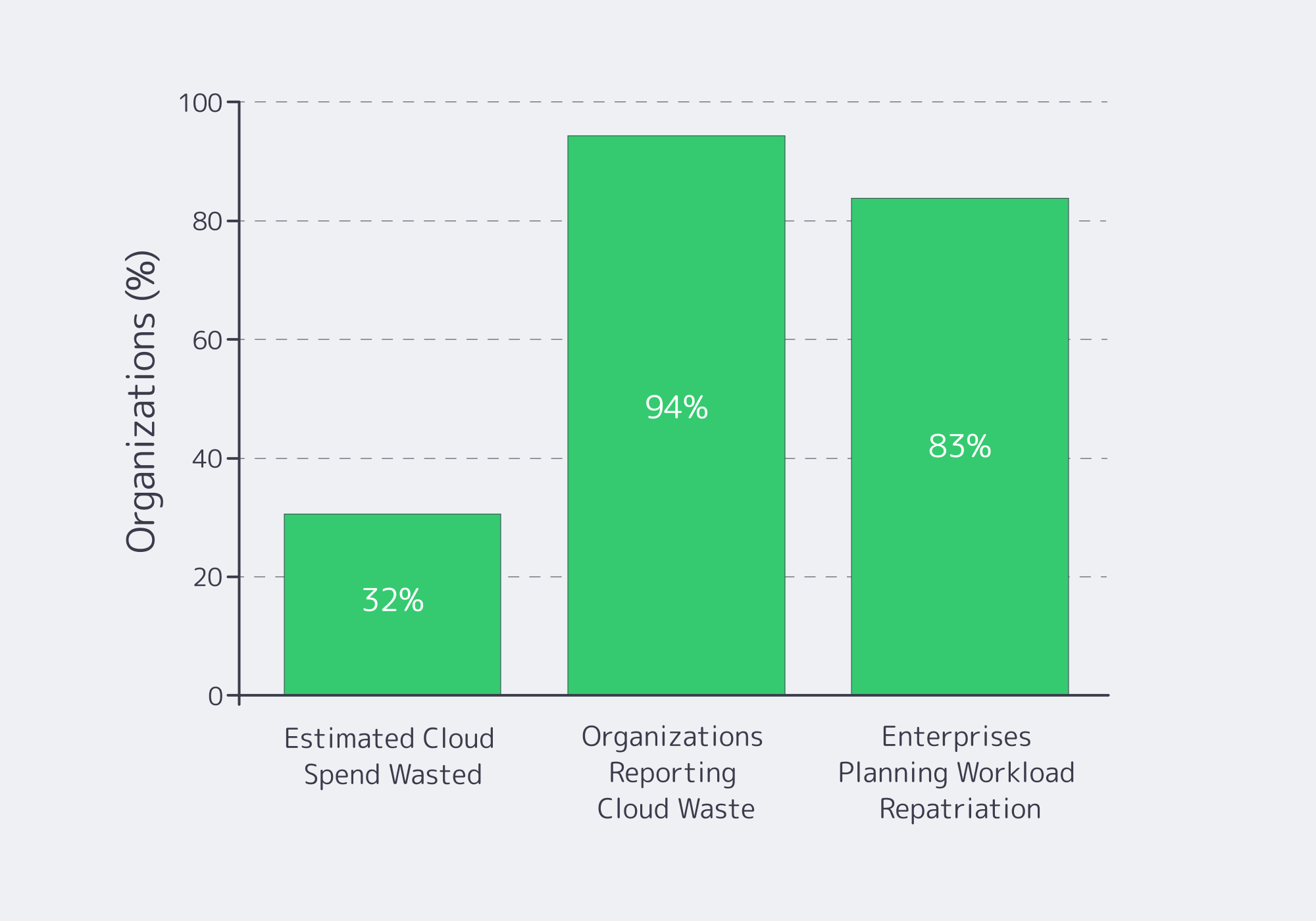

In the same study cited earlier, nearly half of IT leaders reported surprise cloud‑related infrastructure costs, and 32% felt a meaningful slice of their infrastructure budget was being wasted on under‑used cloud resources.

In the Flexera’s State of the Cloud research, nearly half of IT leaders reported surprise cloud‑related infrastructure costs, with respondents self‑estimating that about 32% of cloud spend is wasted, up from 30% the previous year.

Cloud Hosting in Singapore and the Actual Cost of Elastic Workloads

Cloud’s economic model is built around elasticity. You avoid upfront CapEx, but you pay a premium for every kWh, vCPU, and GB you consume—forever.

Andreessen Horowitz’s “Trillion‑Dollar Paradox” analysis of public software companies found that, for some high‑growth firms, committed cloud contracts averaged around 50% of cost of revenue—a level where optimization has a direct, material impact on gross margin. Broadcom’s survey work shows why many teams are re‑examining their cloud footprint: 94% of IT leaders report some level of waste in public‑cloud spend, nearly half believe more than a quarter of that spend is wasted, and 90% say private cloud offers better cost visibility and predictability.

In Southeast Asia’s financial sector, additional cost layers show up in the migration itself. A recent analysis of cloud migration projects across banks in the region points to high refactoring costs, long transitional periods where on‑prem and cloud must be run in parallel, and the need for specialist cloud talent—each of which pushes the all‑in cloud bill higher than the raw price list suggests.

High‑density GPU nodes, sustained multi‑gigabit throughput, and premium storage tiers all carry cloud pricing multipliers that compound as workloads grow. Once you add data‑egress charges and inter‑AZ traffic for chatty microservices, “just leave it in the cloud” stops being neutral advice.

How Dedicated Servers in Singapore Change the Unit Economics

Dedicated reverses that model. You pay a predictable monthly fee for a known quantity of CPU, RAM, storage, and bandwidth, and you can drive utilisation close to the limit without triggering new pricing tiers.

A Barclays‑backed CIO survey found that 83% of enterprises plan to move at least some workloads from public cloud to on‑premises or private cloud infrastructure, up from 43% just a few years earlier—a striking swing driven largely by cost and control concerns. It’s not nostalgia; it’s unit economics.

For Singapore specifically, steady‑state workloads such as core databases, ledgers, game backends, and real‑time APIs often reach a point where running them on dedicated servers in a local facility is materially cheaper than keeping them on large cloud instances. Bandwidth‑heavy services—video, file delivery, AI inference over websockets—benefit from flat‑rate or high‑included‑traffic models. With a dedicated box pushing tens of gigabits and a CDN absorbing global distribution, your marginal cost per additional user often falls over time instead of rising.

At Melbicom, we see this pattern regularly. Teams start in the cloud, then move hot paths—databases, in‑memory caches, critical application tiers—to our Singapore dedicated servers once traffic patterns stabilise. In our Singapore data centre alone, there are 100+ ready‑to‑go configurations, and custom servers can be assembled in roughly 3–5 business days, so there’s no long CapEx‑style planning cycle. You keep an OpEx mindset while clawing back the premiums built into hyperscale pricing.

Dedicated vs. Cloud at a Glance

| Aspect | Dedicated Server in Singapore | Cloud Server in Singapore |

|---|---|---|

| Performance | Consistent, low‑jitter performance with full control over hardware, network stack, and tuning. Best for latency‑sensitive and high‑IO workloads. | Good average performance, but more variance due to multi‑tenancy, noisy neighbours, and shared hypervisors—especially at higher utilisation. |

| Cost | Fixed monthly pricing with high utilisation potential; better long‑term unit economics once workloads are steady and resource‑intensive. | Pay‑as‑you‑go and CapEx‑free, ideal for experiments and spiky demand; can become one of the largest recurring costs at scale. |

| Control & Compliance | Full control over data locality, access paths, logging, and change management; easier to map to internal policies and audits. | Shared responsibility model with constraints on where and how data is stored and processed; more complex to explain and evidence to regulators. |

Compliance and Data Control: Singapore Dedicated Servers Hosting vs. Cloud

Performance and cost are quantifiable. Compliance is more binary: either you can prove you meet your obligations, or you can’t.

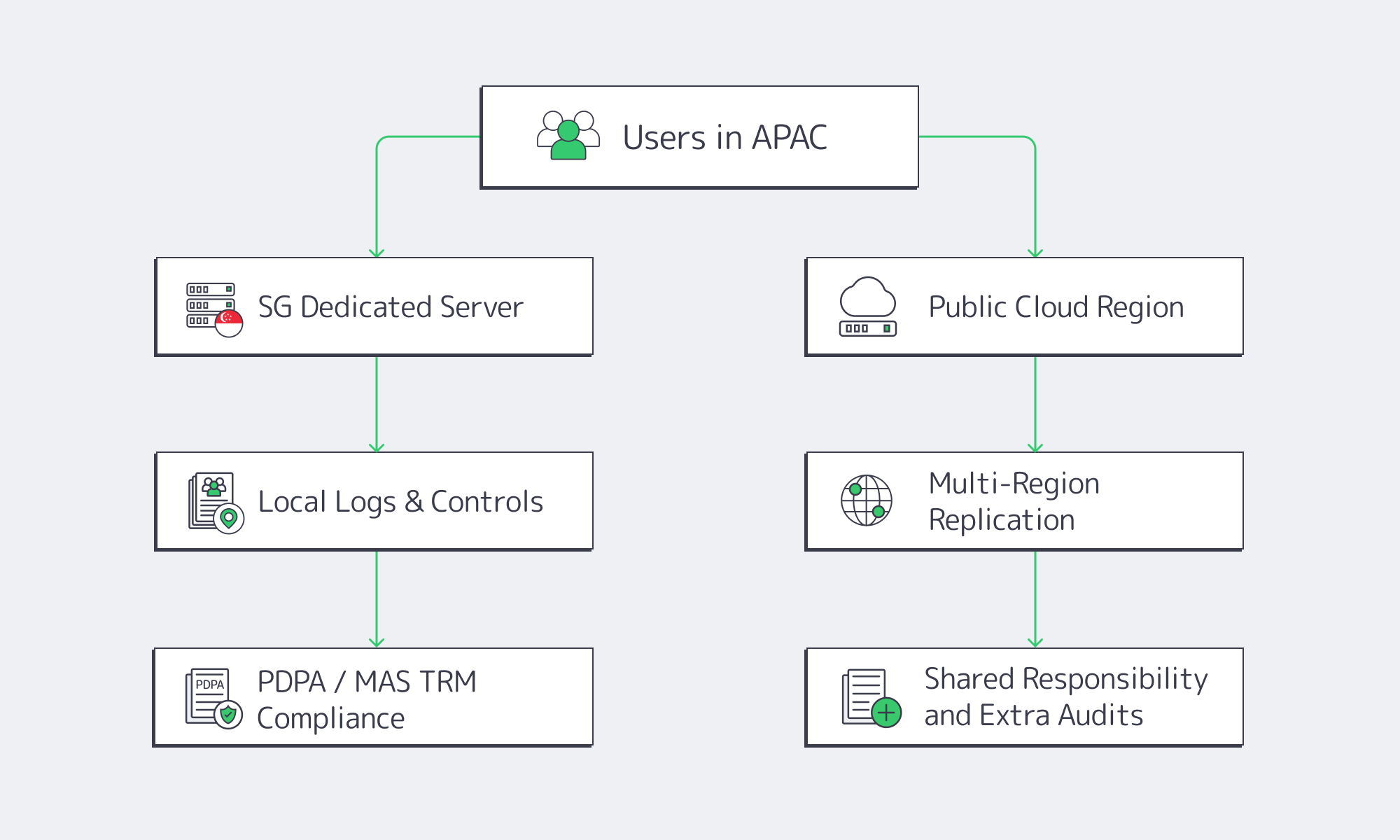

PDPA, Financial Regulation, and Data Residency

Singapore’s Personal Data Protection Act (PDPA) has real teeth. The Personal Data Protection Commission (PDPC) can order organisations to stop processing data, delete unlawfully‑held information, and impose fines of up to 10% of local annual turnover or SGD 1 million (roughly USD 800K), whichever is higher. Cross‑border transfers are allowed only if the overseas recipient offers “comparable protection” to PDPA standards.

Layer on sectoral rules—such as MAS Technology Risk Management (TRM) guidelines in finance, PCI DSS for payments, and internal risk policies—and the tolerance for opaque control planes shrinks fast. A recent review of cloud migration in Southeast Asia’s financial sector highlights what this looks like in practice:

- overlapping data‑protection regimes across markets,

- data‑localisation requirements in countries like Indonesia and Vietnam,

- cloud concentration and vendor lock‑in risks, and

- significant skill gaps and migration costs.

That mix is driving many institutions toward hybrid models: regulated systems anchored in a clearly defined environment (often in Singapore), with carefully curated use of public‑cloud services around the edges.

Building a Defensible Control Story

A Singapore‑hosted dedicated environment simplifies the story you tell auditors, regulators, and enterprise customers. You can point to specific racks in a Singapore data centre and document exactly which workloads and datasets run there. Only your team (and selected provider engineers under contract) can access the physical machines; there is no shared control plane and no other tenants on the box. OS images, patch cadence, encryption standards, and logging pipelines are all under your governance, making it easier to map them to PDPA, MAS TRM, PCI DSS, and internal policies.

By contrast, regulated workloads that remain on multi‑tenant cloud platforms tend to require substantial additional governance: a carefully designed landing zone, multi‑account structure, centralised security policies, and dedicated cloud‑spend management tooling are all needed just to keep the environment compliant and economical. None of this negates cloud’s value—but it does highlight that the operational burden sits with you.

We at Melbicom lean into the simpler model where it helps. Our dedicated servers in Singapore run in a Tier III facility with 1–200 Gbps per‑server bandwidth and direct connectivity into our global backbone. Regulated or high‑risk workloads can stay entirely within Singapore, under your change control, while non‑sensitive assets—media, cached responses, static content—are pushed out through our CDN and S3‑compatible object storage layer for global reach. That lets you meet data‑residency and audit expectations without accepting the cost and jitter of keeping everything in a single public‑cloud region.

Getting the Mix Right in Singapore

For most teams, the answer in Singapore isn’t “all‑cloud” or “all‑dedicated.” The pattern emerging from both industry data and what we see in practice is a deliberate mix: core, steady‑state, and regulated workloads on dedicated servers in Singapore; bursty, experimental, or heavily managed components in the cloud; and global reach delivered via CDN and object storage rather than by stretching a single compute layer everywhere.

Surveys and case studies point in the same direction. Enterprises worldwide are rebalancing toward private and dedicated infrastructure for their heaviest workloads, while keeping cloud for what it does best: fast iteration, managed services, and short‑lived capacity. At the same time, Singapore is expanding low‑carbon data‑centre capacity and strengthening its role as an anchor for regional infrastructure, making it an obvious hub for that dedicated tier.

If you’re deciding where to place your next tranche of capacity in or around Singapore, a few practical recommendations follow from the analysis above:

- Anchor latency‑critical and compliance‑heavy systems on dedicated Singapore infrastructure. Real‑time trading, risk, payments, game state, and other stateful services that suffer from multi‑tenant jitter or complex audit trails belong on single‑tenant servers under your direct control, close to your users.

- Use cloud platforms surgically for elasticity and managed primitives. Early‑stage products, unpredictable workloads, and specialised managed services (databases, analytics, functions) still fit well on cloud hosting Singapore platforms—provided you set clear thresholds for when to repatriate long‑running, resource‑intensive components.

- Treat network and data‑movement costs as first‑class design constraints. For bandwidth‑heavy or chatty services, design around high‑capacity dedicated links in Singapore plus a CDN and object storage tier, rather than assuming cross‑region or cross‑provider traffic will remain a rounding error on your cloud bill.

Answering those questions honestly usually reveals the shape of your Singapore infrastructure portfolio without the need for ideology.

Get a Dedicated Server in Singapore

Ready to move stable or regulated workloads to Singapore? Deploy high‑performance dedicated servers with 1–200 Gbps bandwidth and fast setup, backed by our global network, CDN, and 24/7 support.