Blog

Hybrid Web3 Infrastructure Strategy: Dedicated Baseline, Cloud For Bursts

Web3 infrastructure doesn’t behave like “normal” web infrastructure. A blockchain server (validators, full nodes, sequencers, indexers, RPC, event pipelines) isn’t a stateless pool of app servers you can autoscale into oblivion and forget. It’s stateful, I/O-heavy, and latency-sensitive in ways that expose the two things hyperscale cloud is worst at: metered unpredictability and performance variance.

The result is a pattern that’s quietly become mainstream: modern teams keep cloud for bursts, edge, and backups—but repatriate the “always-on” core of crypto hosting to single-tenant dedicated servers for deterministic performance and predictable cost.

That last line is the uncomfortable truth for Web3 infra: you can’t optimize what you can’t predict, and “pay for what you use” becomes “pay for what your users (and your chain) force you to do.”

Which Infrastructure Reduces Web3 Infra Costs Most

Dedicated servers typically reduce Web3 infrastructure costs the most when workloads are steady-state: validators, RPC, indexers, and data-heavy backends that run 24/7. Hyperscale cloud still wins for burst capacity and one-off workloads, but metered compute, storage operations, and especially data egress can punish always-on systems. The cost-optimal architecture is usually hybrid: bare metal baseline, cloud for spikes and DR.

The Web3 Infrastructure Cost Model Cloud Pricing Doesn’t Love

Hyperscale cloud is optimized for elasticity and productized building blocks. Web3 infrastructure is optimized for continuous performance and continuous data movement. That mismatch shows up in places CFO dashboards don’t capture until it’s too late:

- Always-on compute: a validator or RPC fleet isn’t seasonal. If the box is always hot, “elastic” becomes “expensive.”

- State growth and reindexing: chain history and derived indices grow monotonically. Snapshots, reorg handling, and replay workloads create recurring high I/O windows.

- Bandwidth is a first-class resource: RPC is network-first. Indexers are network + disk-first. Propagation is latency-first. In cloud, bandwidth is usually metered and constrained at multiple layers (instance limits, cross-AZ, egress, managed LB/NAT).

Cloud vs. Dedicated for Web3 Infrastructure Decisions

| Web3 Infrastructure Constraint | Where Hyperscale Cloud Commonly Hurts | Dedicated Base Layer + Cloud Burst Approach |

|---|---|---|

| 24/7 node and RPC compute | Metered hourly costs + “always-on” instances erode unit economics | Single-tenant servers for baseline; cloud only for temporary scale events |

| Storage growth + reindex workloads | Storage ops + access patterns can trigger surprise bills and throttling | Local NVMe for hot state; object storage for snapshots/archives |

| High-throughput RPC and data APIs | Egress, cross-zone traffic, and LB/NAT patterns amplify spend | High-port dedicated bandwidth for steady traffic; cloud CDN/edge for cacheable reads |

| Latency variance and jitter | Multitenancy, virtualized networking, and noisy neighbors widen tail latency | Deterministic hardware + tighter OS/network tuning; cloud reserved for non-critical paths |

| Multi-region resiliency | Cross-region data replication is powerful but often pricey | Dedicated primary/secondary regions; cloud for DR drills, backups, and short-lived failover |

This is why “cloud repatriation” looks less like a rollback and more like an optimization: keep cloud where it’s best (burst and managed services), and stop renting your baseline.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

What Dedicated Server Setup Optimizes Blockchain Performance

The dedicated server setup that optimizes blockchain performance is single-tenant hardware with high-clock CPU cores, fast local NVMe, and uncongested bandwidth in a well-connected data center. This minimizes noisy-neighbor interference, reduces latency variance, and gives operators control over kernel, I/O scheduling, and networking—controls that materially affect p95/p99 RPC latency and block propagation in real production.

Why a Single-Tenant Blockchain Server Beats a Multitenant VM for the Hot Path

In Web3 infrastructure, “performance” isn’t average throughput—it’s the tails:

- Propagation and gossip punish jitter. If a node’s networking stack is inconsistent, it’s effectively “slow” even if average throughput looks fine.

- RPC SLAs are tail-latency problems. A few bad milliseconds at p99 can feel like outages when wallets and bots retry and stampede.

- Indexer ingestion is an I/O pipeline. If your write amplification spikes, your lag becomes user-facing.

A dedicated server gives you an unshared performance envelope. Cloud can give you impressive burst throughput, but deterministic variance control is harder when the noisy neighbor is literally someone else’s workload.

What Optimized Looks Like in Web3 Server Hosting

A high-performance Web3 server typically converges on a similar shape:

- CPU: fewer, faster cores beat “lots of mediocre vCPUs” for many chain clients and RPC workloads (especially under lock contention and mempool processing).

- Storage: local NVMe for hot chain state, databases, and indices; avoid remote volumes on the critical path when you can.

- Network: provision for sustained high bandwidth and stable routing, not just “best effort” throughput.

This is also where infrastructure becomes geography-aware without becoming geography-obsessed: pick locations for users, peers, and liquidity centers, then optimize the routes and tails.

Where Melbicom Fits When Performance Is the Bottleneck

For teams building Web3 infrastructure on dedicated servers, Melbicom’s approach is purpose-built for the “baseline layer”: single-tenant Web3 server hosting in 21 global Tier III+ locations, backed by a 14+ Tbps backbone with 20+ transit providers and 25+ IXPs, and per-server ports up to 200 Gbps (location dependent—see data centers). Melbicom keeps 1,300+ ready-to-go configurations that go live in 2 hours, and Melbicom can deliver custom builds in 3–5 business days.

On top of that baseline, the hybrid layer stays intact: Melbicom’s CDN runs 55+ PoPs across 36 countries for cacheable reads and static assets; S3 cloud storage provides an object-store target for snapshots and backup artifacts; and BGP sessions enable routing control and multi-site designs without turning your edge into a science project. (And yes—Melbicom provides 24/7 support when you’re debugging routing at 3 a.m.)

When Should Web3 Infra Teams Repatriate Cloud Workloads

Web3 teams should repatriate cloud workloads when costs become volatile, latency SLOs keep failing at the tails, or storage and bandwidth economics start dictating architecture decisions. Repatriation doesn’t mean “no cloud”—it means moving baseline blockchain workloads to single-tenant servers while keeping cloud capacity for bursts, disaster recovery, and specialized managed services.

Repatriation Signals for Crypto Server Hosting

If you’re seeing these, you’re already paying the repatriation tax—just not explicitly:

- The “egress budget” is now a first-class line item (and keeps growing with usage).

- RPC p99 latency drifts upward under load, even after “adding more instances.”

- Indexer lag becomes a constant firefight because the storage pipeline is throttled or inconsistent.

- “We can’t forecast this bill” becomes a monthly meeting rather than a one-off surprise.

- You’re optimizing architecture around pricing mechanics instead of around throughput, correctness, and resiliency.

What Web3 Infra Actually Moves First

Teams don’t repatriate everything. They repatriate what is (1) always-on, (2) bandwidth-heavy, (3) I/O-heavy, and (4) latency-sensitive:

- validator + full-node fleets

- RPC clusters and load balancers tightly coupled to network performance

- indexers, ETL, event pipelines, and query layers with large hot datasets

- gateway layers that need consistent throughput

What stays in cloud: burst compute, infrequent batch jobs, “just in case” overflow, and managed services that genuinely create leverage.

Hybrid Crypto Hosting Strategies That Actually Work

The winning pattern in 2026 isn’t “cloud vs. dedicated.” It’s intentional hybrid: single-tenant servers for the baseline, cloud for elasticity, plus tight control over data movement.

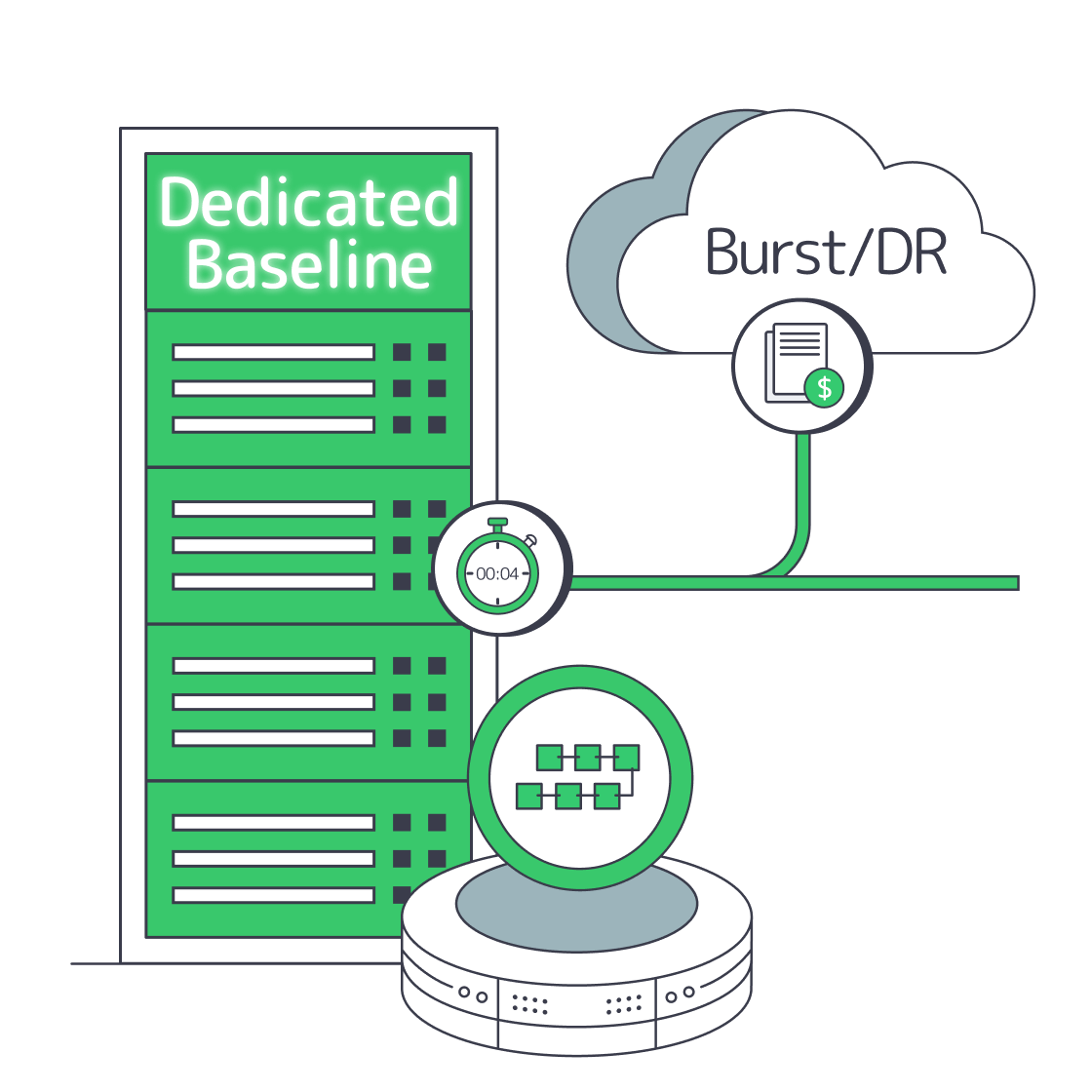

Pattern 1: Dedicated Baseline + Cloud Burst

Run the steady-state load on dedicated servers. Use cloud instances only when demand temporarily exceeds baseline capacity (launch days, NFT mints, volatility spikes). This forces a healthier planning discipline: you pay premium rates only when the business is actually benefiting.

Pattern 2: Dedicated Core + CDN for Read Scaling

For read-heavy traffic (docs, ABIs, static site assets, and cacheable API responses), pushing delivery to an edge network changes the economics. Melbicom’s CDN (55+ PoPs across 36 countries) can offload predictable reads while your dedicated core handles the uncached, chain-dependent requests.

Pattern 3: Dedicated Hot Data + Object Storage for Snapshots and Backups

Keep hot state and indices on local NVMe; move artifacts to object storage: snapshots, replay packages, logs, and backups. Melbicom’s S3-compatible storage is a natural fit for “durable and cheap(ish)” data that shouldn’t be on the same box as the node process.

Pattern 4: Multi-Site Dedicated Servers + BGP Sessions for Routing Control

When uptime and latency are existential, hybrid often becomes multi-site. With BGP sessions, teams can control routing and failover behavior more explicitly than DNS tricks alone. The key is keeping the topology simple enough to operate—two well-chosen sites beat five half-maintained ones.

Designing Web3 Infrastructure for the Next Market Cycle

The next cycle won’t only stress scale. It will stress unit economics under scale. Web3 infra that survives isn’t the one that can hit the highest peak once—it’s the one that can run hot for months without making finance or SREs miserable.

At Melbicom, the architecture conversations that go best start with a brutally honest baseline: how much steady-state CPU, NVMe, and bandwidth your protocol actually needs when it’s “quiet,” and what kind of burst profile you get when it isn’t. Once you have that, infrastructure stops being a religious debate and becomes engineering: isolate the baseline onto single-tenant servers, then use cloud strategically where it creates real leverage.

Build Your Hybrid Web3 Infrastructure with Melbicom

The most cost-effective Web3 infrastructure strategies today don’t chase purity. They chase control: control over tail latency, control over data movement, and control over unit economics as your chain traffic scales. Dedicated servers are how teams stabilize the baseline; cloud is how teams absorb the unpredictable.

If the goal is to ship reliable crypto hosting without turning cloud billing into an engineering constraint, the decision isn’t “cloud or dedicated.” The decision is what belongs on the baseline, what belongs in burst capacity, and what belongs at the edge.

- Baseline first: size dedicated capacity for steady-state validators/RPC/indexing, not for peak mania.

- Measure tails: optimize p95/p99 RPC latency and propagation jitter before throwing more instances at averages.

- Treat bandwidth as a primary resource: design for sustained throughput and predictable routing, not “free” internal traffic assumptions.

- Separate hot state from durable artifacts: keep hot chain data local; push snapshots/logs/backups to object storage.

- Use cloud tactically: bursts, DR drills, and non-critical services—never as the default home for the always-on core.

Launch dedicated Web3 servers today

Get predictable performance and cost with single-tenant hardware, CDN, and S3-compatible storage. Build a hybrid baseline on dedicated servers and burst to cloud when needed.