Blog

Dedicated Servers for Decentralized Storage Networks

Decentralized storage stopped being a curiosity the moment it started behaving like infrastructure: predictable throughput, measurable durability, and node operators that treat hardware as a balance sheet, not a weekend project. That shift tracks the market. Global Market Insights estimates the decentralized storage market at $622.9M in 2024, growing to $4.5B by 2034 (22.4% CAGR).

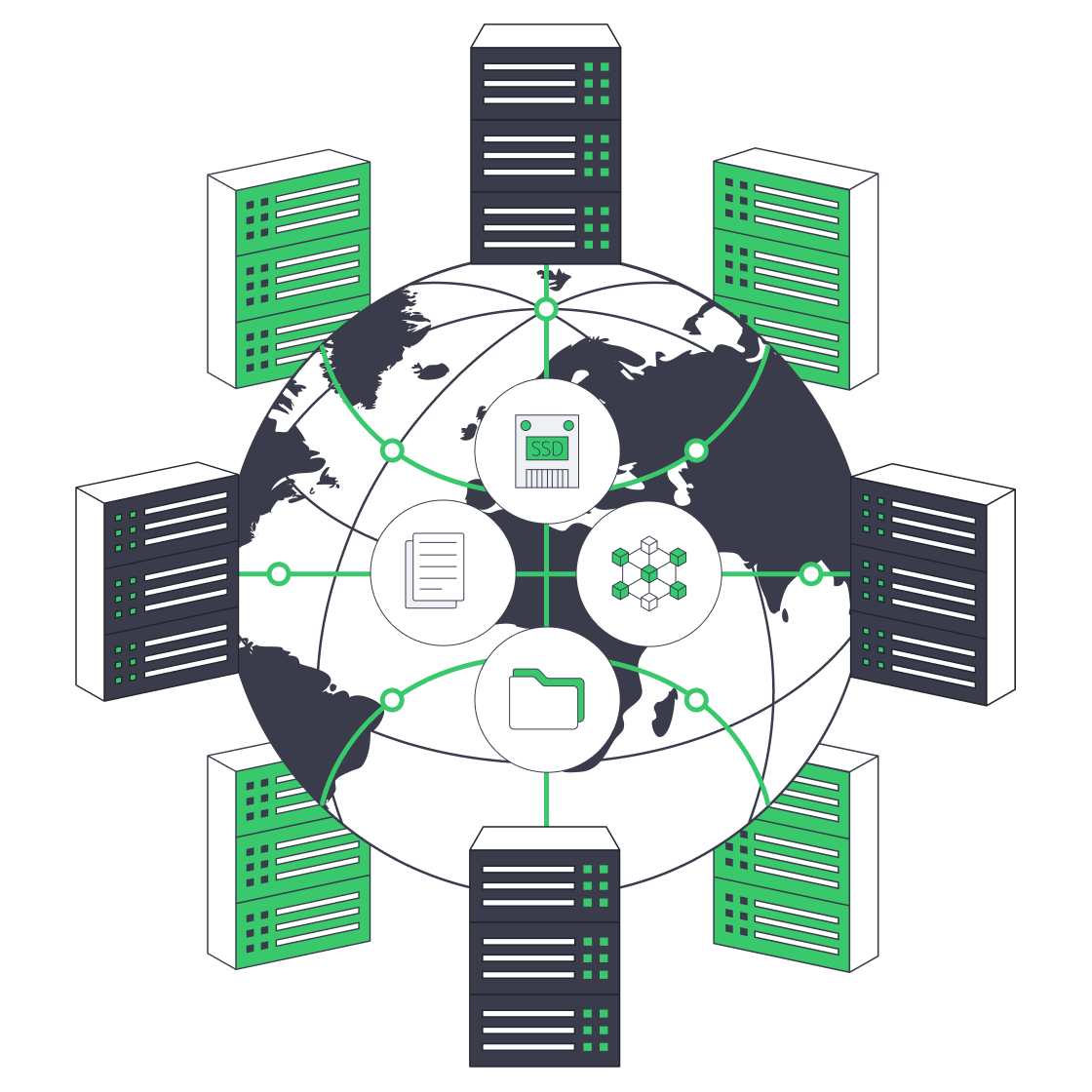

The catch is that decentralization doesn’t delete physics. It redistributes it. If you want to run production-grade nodes—whether you’re sealing data for a decentralized storage network or serving content over IPFS—your success is gated by disk topology, memory headroom, and the kind of bandwidth that stays boring under load.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

Decentralized Storage Nodes: Where the Real Bottlenecks Live

You can spin up a lightweight node on a spare machine, and for demos that’s fine. But professional decentralized storage providers are solving a different problem: ingesting and proving large datasets continuously without missing deadlines, dropping retrievals, or collapsing under “noisy neighbor” contention.

The scale is already real. The Filecoin Foundation notes that since mainnet launch in 2020,the network has grown to nearly 3,000 storage providers collectively storing about 1.5 exbibytes of data. That’s 1.5 billion gigabytes distributed across thousands of independent nodes—far beyond hobby hardware.

That scale pushes operators toward dedicated servers because the constraints are brutally specific: capacity must be dense and serviceable (drive swaps, predictable failure domains); I/O must be tiered (HDDs for bulk, SSD/NVMe for the hot path); memory must absorb caches and proof workloads without thrashing; and bandwidth must stay consistent when retrieval and gateway traffic spike.

In other words: decentralized cloud storage isn’t “cheap storage from random disks.” It’s a storage appliance—distributed.

Decentralized Blockchain Data Storage

Decentralized blockchain data storage merges a storage layer with cryptographic verification and economic incentives. Instead of trusting a single provider’s dashboard, clients rely on protocol-enforced proofs that data is stored and retrievable over time. Smart contracts or on-chain deals govern payments and penalties, turning “who stored what, when” into a verifiable ledger rather than a marketing claim.

This is where centralized vs decentralized storage stops being ideological and becomes operational. Centralized systems centralize trust; decentralized storage networks distribute trust and enforce it with math. The price is heavier, more continuous work on the underlying hardware: generating proofs, moving data, and surviving audits.

Explaining How Decentralized Cloud Storage Works With Blockchain Technology

Decentralized cloud storage uses a blockchain (or similar consensus layer) to coordinate contracts and incentives among independent storage nodes. Clients make storage deals, nodes periodically prove they still hold the data, and protocol rules handle payments and penalties. The chain anchors “who stored what, when” and automates enforcement—without a single trusted storage vendor.

Under the hood, production nodes behave like compact data centers. You’re not just “hosting files”; you’re running data pipelines: ingest payloads, encode or seal them, generate and submit proofs on schedule, and handle retrieval or gateway traffic. The blockchain is the coordination plane and the arbiter; the hardware has to keep up.

Deployment Is Getting Faster—and More OPEX‑Friendly

The industry trend is clear: shorten the time between “we want to join the network” and “we’re producing verified capacity.” In one Filecoin ecosystem example, partners offering predefined storage‑provider hardware and hosted infrastructure cut time‑to‑market from 6–12 months to 6–8 weeks, turning what used to be a full data‑center build into a matter of weeks. That shift reframes growth: you expand incrementally, treat infrastructure as OPEX, and avoid the “buy everything upfront and hope utilization catches up” trap.

A high‑performance dedicated server model fits that pattern. Instead of buying racks, switches, and PDUs, you rent single‑tenant machines in existing facilities, pay monthly, and scale out node fleets as protocol economics justify it.

| Factor | Build (CAPEX) | Rent (OPEX) |

|---|---|---|

| Upfront Cost | Large hardware purchase | Monthly or term spend |

| Deployment Time | Procurement + staging | Faster provisioning |

| Hardware Refresh | You fund and execute upgrades | Provider manages lifecycle |

| Scaling | Stepwise, slower | Add nodes as needed |

| Maintenance | Your ops burden | Shared support and replacement model |

| Best Fit | Stable, long‑run fleets | Fast expansion or protocol pilots |

Decentralized Content Identification and IPFS Storage

IPFS flips the addressing model: you don’t ask for “the file at a location”; you ask for “the file with this identity.” In IPFS terms, that identity is a content identifier (CID)—a label derived from the content itself, not where it lives. CIDs are based on cryptographic hashes, so they verify integrity and don’t leak storage location. This is content addressing: if the content changes, the CID changes.

In practical terms, decentralized file storage performance often comes down to where and how you run your IPFS nodes and gateways. Popular content may be cached widely, but someone still has to pin it, serve it to gateways, and move blocks across the network. If those “someones” are running on underpowered hardware with weak connectivity, you’ll see it immediately as slow loads and timeouts.

Bringing IPFS into production usually involves:

- Pinning content on dedicated nodes so it doesn’t disappear when caches evict it.

- Running IPFS gateways that translate CID requests into HTTP responses.

- Using S3 storage and CDNs in front of IPFS to smooth out latency + absorb read bursts.

That’s exactly where dedicated servers shine: you can treat IPFS nodes as persistent origin servers with high‑bandwidth ports, local NVMe caches, and large HDD pools—then rely on a CDN to fan out global distribution.

Hardware Demands in Practice: Build a Node Like a Storage Appliance

The winning architecture is boring in the best way: isolate hot I/O from cold capacity, avoid resource contention, and over‑provision the parts that cause cascading failures.

Decentralized Storage Capacity Is a Disk Design Problem First

Bulk capacity still wants HDDs, but decentralized storage nodes are not just “a pile of disks.” Filecoin’s infrastructure docs talk explicitly about separating sealing and storage roles, and treating bandwidth and deal ingestion as first‑class design variables. The pattern is roughly:

- Fast NVMe for sealing, indices, and hot data.

- Dense HDD arrays for sealed sectors and archival payloads.

- A topology that lets you replace failed drives without jeopardizing proof windows.

Memory Is the Shock Absorber for Proof Workloads and Metadata

Proof systems and high-throughput storage stacks punish tight memory budgets. In Filecoin’s reference architectures, the Lotus miner role is specified at 256 GB RAM, and PoSt worker roles are specified at 128 GB RAM—a blunt signal that “it boots” and “it proves on time” are different targets.

Bandwidth Isn’t Optional in a Decentralized Storage Network

Retrieval, gateway traffic, replication, and client onboarding all convert to sustained egress. Filecoin’s reference architectures explicitly call out bandwidth planning tied to deal sizes, because bandwidth is part of whether data stays usable once it’s stored.

A snapshot of what “serious” looks like: the Filecoin Foundation profile of a data center describes a European storage-provider facility near Amsterdam contributing 175PiB of capacity to the network, connected via dark fiber links into Amsterdam, and serving 25 storage clients. That is pro-grade infrastructure being used to power decentralized storage.

Melbicom runs into this reality daily: the customers who succeed in production are the ones who provision for sustained operations, not peak benchmarks. Melbicom’s Web3 hosting focus is built around that exact profile—disk-heavy builds, real memory headroom, and high-bandwidth connectivity tuned for decentralized storage nodes.

Web3 Storage Scales on the Same Basis as Every Other Storage System

Decentralized storage networks are converging on mainstream expectations: consistent retrieval, verifiable durability, and economics that can compete with traditional cloud storage—without collapsing into centralized control. The way you get there is not mysterious: tiered disks, sufficient memory, and bandwidth that can carry both ingest and retrieval without surprises.

Dedicated servers are the pragmatic bridge between decentralized ideals and decentralized cloud storage as a product. They turn node operation into engineering: measurable and scalable. Whether you are running Filecoin storage providers, IPFS gateways, or other decentralized storage network roles, the playbook looks like any serious storage system—just deployed across many independent operators instead of a single cloud.

Practical Infrastructure Recommendations for Decentralized Storage

- Treat nodes as appliances, not pets. Standardize a small number of server “shapes” (e.g., sealing, storage, gateway) so you can scale and replace them predictably.

- Tier storage aggressively. Put proofs, indices, and hot data on NVMe; push sealed or archival sectors to large HDD pools. Avoid mixing hot and cold I/O on the same disks.

- Over‑provision memory for proofs. Aim for RAM budgets that comfortably cover proof processes, OS, observability, and caching—especially on sealing and proving nodes.

- Size bandwidth to your worst‑case retrieval. Design for sustained egress and replication under peaks; use 10+ Gbps ports where retrieval/gateway traffic is critical.

- Plan for regional and provider diversity. Distribute nodes across multiple DCs and regions when economics justify it, to reduce latency and mitigate regional failures.

Deploy Dedicated Nodes for Web3 Storage

Run decentralized storage on single-tenant servers with high bandwidth, NVMe, and dense HDDs. Deploy Filecoin and IPFS nodes fast with top-tier data centers and unmetered ports.