Blog

Why Dedicated Server Hosting Is the Gold Standard for Reliability

Digital presence and revenue go hand in hand in the modern world, and as such, the concept of “uptime” is no longer simply a technical metric; it is now a critical business KPI. When literally every second of availability counts, you have to be strategic when it comes to infrastructural choices, and although the solutions have broadened as the hosting landscape has evolved over the years, dedicated server hosting remains the definitive gold standard when it comes to unwavering reliability without compromise.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

Though appealing for many reasons, multi-tenant environments often have an underlying compromise in terms of predictability; they are subject to service disruption, degradation, and failure that the dedicated model evades and trumps simply by being isolation-based. This isolation means it can provide a stable, resilient foundation that is structurally immune to the challenges that shared systems face. To fully understand why dedicated is still considered the gold standard, we need to dive deeper than a surface-level analysis of the architectural and operational mechanics and look to the specific resilience techniques that are preventing downtime and helping clients meet availability targets head-on.

Resource Contention: The Invisible Shared Infrastructure Threat

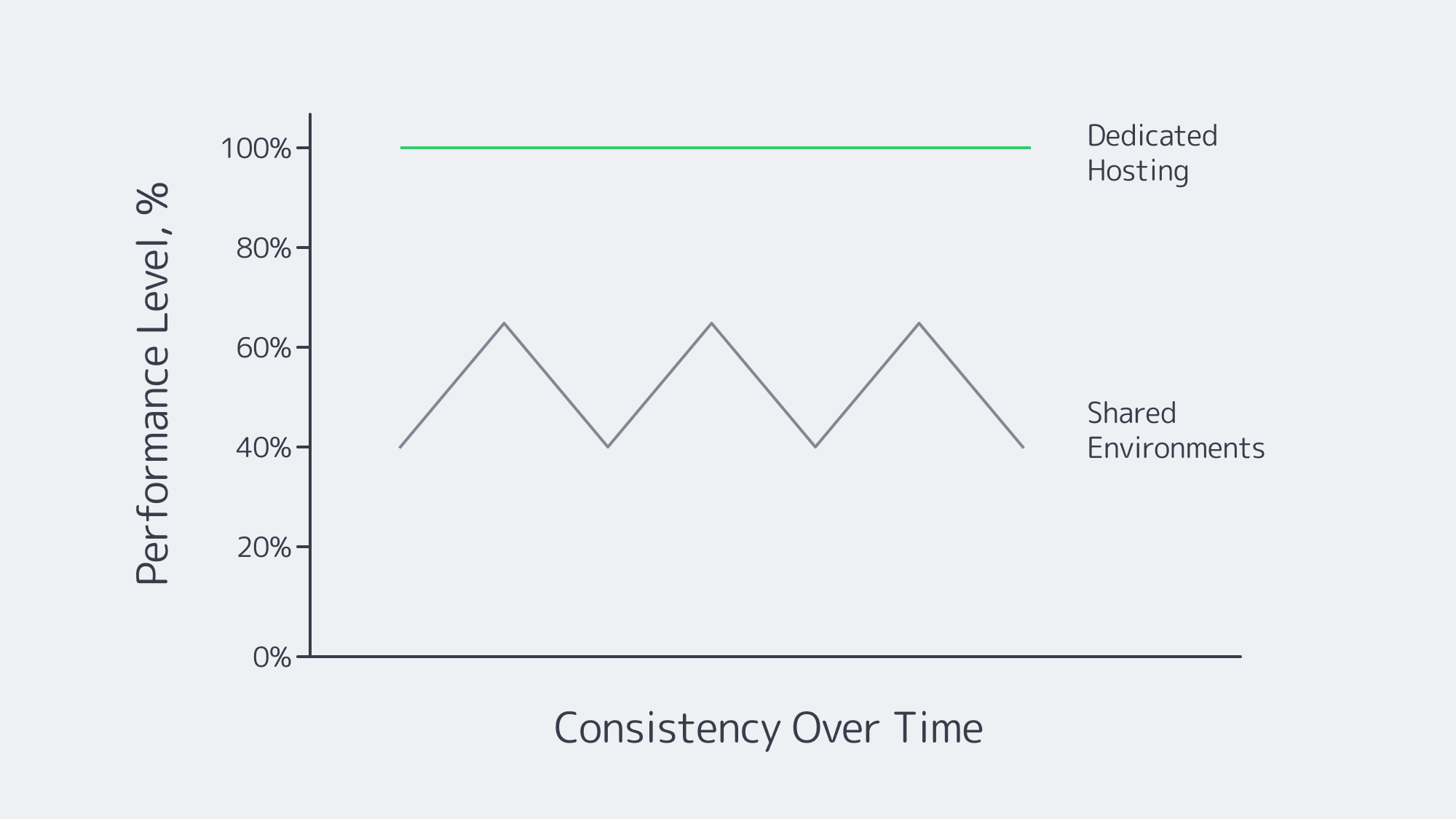

When multiple tenants reside within a shared or virtualized hosting environment on a single physical machine, they share the same resource pool. CPU, RAM, and I/O resources are essentially a “free-or-all,” which creates the “noisy neighbor” effect. The unpredictable workload demands of a single tenant can degrade the performance of everyone else relying on the same hardware because a high-demand workload consumes the resources disproportionately.

Demanding operations, with unpredictable high traffic surges such as e-commerce, will monopolize disk I/O during activity spikes, resulting in fluctuations and even a halt to critical database applications for other businesses. If your enterprise requires high availability and your workloads are mission-critical, then these unpredictable and uncontrollable performance fluctuations are more than merely an inconvenience; they are a risk you simply cannot afford to take.

The risk is eliminated altogether by leveraging the exclusive hardware that a dedicated server solution provides. As a sole tenant, all CPU cores, all gigabytes of RAM, and the full I/O bandwidth of the disk controllers are yours, which equals high performance that is consistent and predictable.

Predictability as Foundation

Performance predictability is the foundation of reliability, and a dependable performance is needed for applications such as real-time data processing and financial transactions, as well as the backend for critical SaaS platforms. Without consistent throughput and latency, these critical and time-sensitive operations can be costly. Shared and virtual environments add layers of abstraction and contention that are removed by choosing a dedicated server to host, guaranteeing smooth operations.

Virtual machines (VMs) are managed by hypervisors, which also introduce a small but measurable overhead with each individual operation. Granted, it is more or less negligible in instances where tasks are low intensity, but when it comes to I/O-heavy applications, it can become a significant performance bottleneck. This is once again prevented with a dedicated server, as the operating system runs directly on the hardware, and there is no “hypervisor tax” to contend with.

In a practical context, the raw, direct hardware access provided by dedicated servers is the reason they remain the platform of choice when millisecond-level inconsistencies are crucial, such as high-frequency trading systems, large-scale database clusters, and big data analytics platforms.

Resilient Hardware and Infrastructure: The Bedrock of Uptime

True reliability is engineered in layers, starting with the physical components as the foundation. With a dedicated server, you have the backbone to structure and bolster the resilience of the data center environment itself with features that shared platform models often abstract.

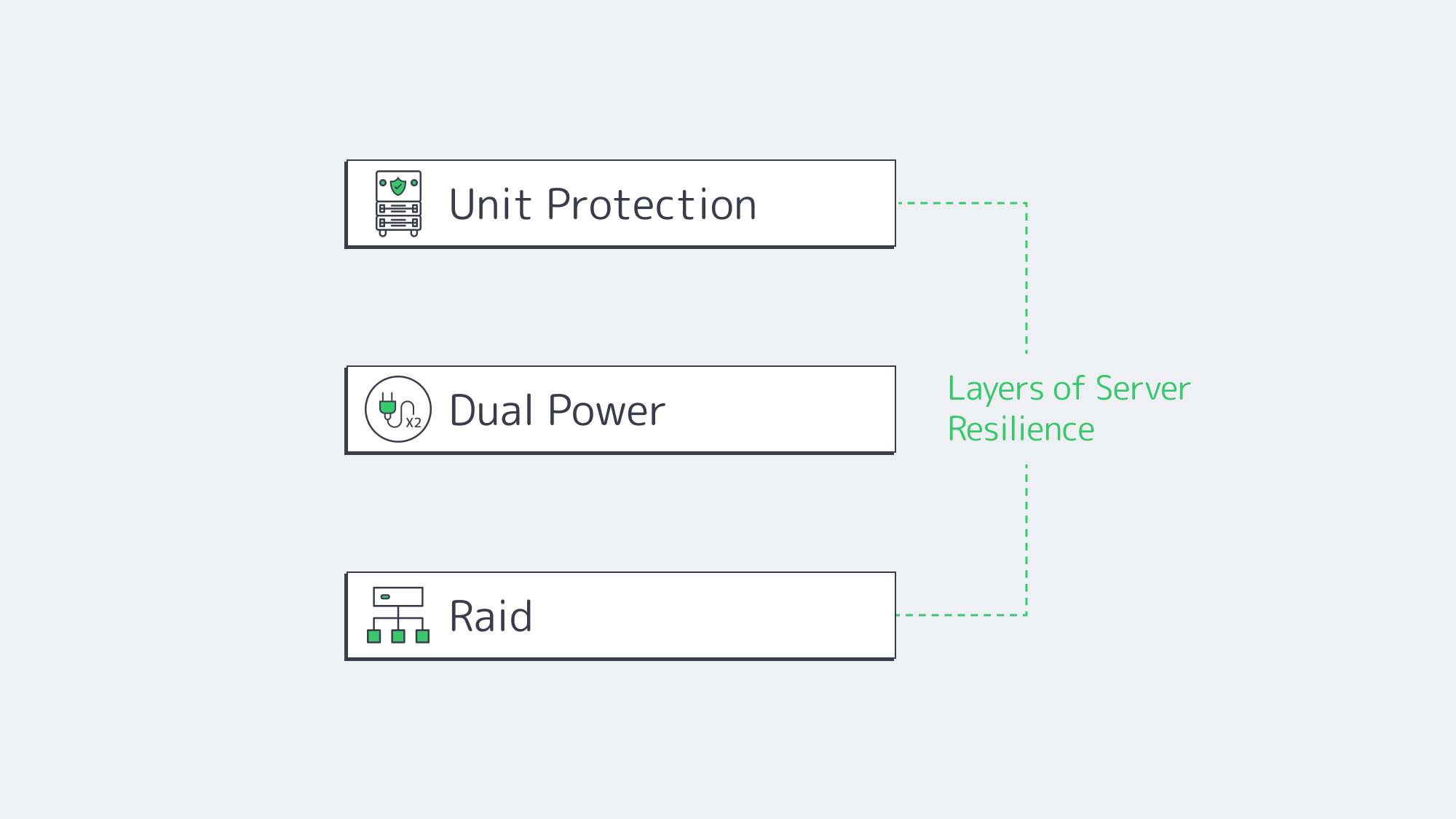

RAID for Data Integrity

Uptime metrics are also dependent on data integrity. Considering that a single drive failure can lead to catastrophic data loss and downtime, combining multiple physical drives into a singular unit through Redundant Array of Independent Disks (RAID) is essential to performance.

Dedicated servers utilize enterprise-grade RAID controllers and drives capable of 24/7 operation. Below are two popular configurations:

- RAID 1 (Mirroring): Writes identical data to two drives; if one fails, the mirror keeps the system online while the failed disk is replaced and the array rebuilds (redundancy, not backup).

- RAID 10 (Stripe of Mirrors): Provides high performance and fault tolerance by combining the speed of striping (RAID 0) with the redundancy of mirroring (RAID 1), ideal for critical databases.

With the ability to specify the exact RAID level and hardware, businesses have the advantage of being able to tailor their data resilience strategy to their specific application needs.

Preventing Single Points of Failure Through Power and Networking

Problems with a single component can also affect hardware redundancy, whether it’s a PSU issue or a network link. This risk is once again mitigated when choosing an enterprise-grade dedicated server:

- Dual Power Feeds: By equipping two PSUs, each with an independent power distribution unit (PDU), you have a failsafe should an entire power circuit fail.

- Redundant Networking: Continuous link availability can be achieved by configuring multiple network interface cards (NICs). Network traffic can be rerouted seamlessly through active remaining links if one card, cable, or switch port fails.

These redundant system features are key to architecting truly reliable infrastructure and are provided by Melbicom‘s servers, housed in our Tier III and Tier IV certified facilities in over 21 global locations.

Continuous High Availability Architecture

If your goal is performance reliability that sits in the upper echelon, then that single server focus needs to be switched to architecture resilience. Running multiple servers through high-availability (HA) clusters created on a dedicated server keeps service online even in the case of a complete server failure.

This can be done with an active-passive model or active-active, as explained below:

Active-Passive: A primary server actively handles traffic and tasks, while a second, identical passive server monitors the primary’s health on standby. Should the primary fail, the passive automatically kicks in as a failover, assuming its IP address and functions, making it ideal for databases.

Active-Active: In this model, all servers are online, actively processing traffic, and the requests are distributed between them via a load balancer. If it detects a server failure, it removes it from the distribution pool and redirects the traffic and tasks accordingly. This keeps availability high and helps facilitate scaling.

These sophisticated architectures provide the resilience needed for consistently high performance but require deeper hardware and network control, which is only achievable within the exclusive domain of dedicated server hosting.

Building the Future of Reliable Infrastructure with Melbicom

The strategic advantages that dedicated server hosting provides for operations that depend on unwavering uptime and predictable performance are undeniable, and the architectural purity they bring to the table through isolation makes them far more than a legacy choice. A dedicated server eliminates resource contention and gives granular control over hardware-level redundancy. With ground-level access comes the ability to construct sophisticated HA clusters that ensure a level of reliability that can’t be matched by shared platform structures. As the world continues to become increasingly digital and application demands grow, downtime will only be all the more costly for operators, and as such, the need for isolation and control is more important than ever.

Deploy Your Dedicated Server Today

Choose from 1,300+ ready-to-deploy dedicated configurations across our Tier III & IV data centers worldwide.