Blog

Designing São Paulo Edge for Commerce and OTT

Brazil is already a high-scale digital market: more than 84% of Brazilians were online in 2024, and São Paulo’s IX.br exchange has recorded peak traffic above 31 Tbit/s. That density is the opportunity—and the trap. At this scale, every cache miss becomes an infra event.

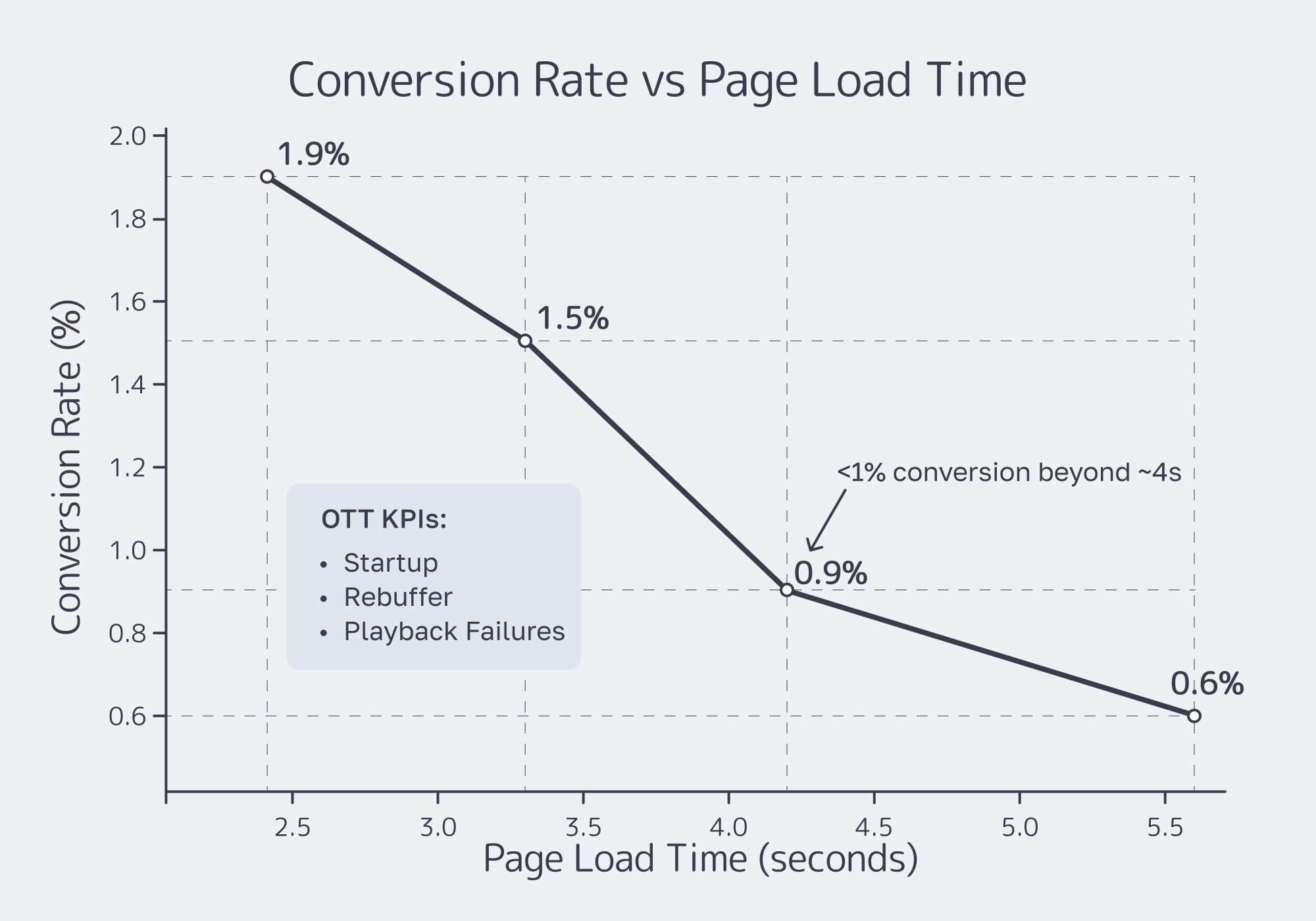

The urgency isn’t hypothetical. 53% of mobile visitors abandon if a page takes over 3 seconds to load, and speed research often cites conversion losses around 5–7% per additional second. Meanwhile, Latin America’s e-commerce market is rising 12.2% YoY and projected to top $215B by 2026, with 84% of online purchases happening on smartphones. If the storefront or stream feels slow in São Paulo, it’s slow everywhere that matters.

This is where CDN São Paulo PoP stops being a checkbox and becomes an operational design: cache rules tuned for commerce and OTT, an origin shield in São Paulo, and failover to secondary LATAM PoPs—measured with KPIs and A/B tests, not vibes.

Choose Melbicom— Reserve dedicated servers in Brazil — CDN PoPs across 6 LATAM countries — 20 DCs beyond South America |

How to Configure Cache Rules for São Paulo

Cache rules for a CDN in São Paulo should maximize edge hits without corrupting user state: cache static assets and VOD segments hard, micro-cache shared HTML where safe, keep carts/checkout uncacheable, and treat streaming manifests as near-real-time. The target is fewer long-haul origin trips, faster loads, and predictable behavior during spikes.

Cache what’s identical, never what’s personal

Start with the repeatable wins:

- Static assets (images/CSS/JS): Long TTL (days+). Use versioned filenames or cache-busting params so deploys invalidate naturally.

- Shared HTML: Product/category pages for anonymous users can take micro-caching (seconds to a few minutes) to absorb bursts without serving stale content for long.

- Personal flows: Cart, checkout, account, and strict price endpoints should be pass-through. Keep TLS termination at the edge so Brazilian users still get fast handshakes.

One rule: cache by content truth. If most users would see the same payload, edge-cache it. If it’s identity-, inventory-, or payment-sensitive, don’t.

Brazil Deploy Guide— Avoid costly mistakes — Real RTT & backbone insights — Architecture playbook for LATAM |

|

OTT: long-lived segments, short-lived manifests

- Segments (HLS/DASH): Cache aggressively. VOD segments can be long-lived; live segments can be cached briefly and will age out naturally.

- Manifests (.m3u8/MPD): Cache very short or require revalidation so viewers don’t drift behind during live events.

- Startup variance: Streaming research shows churn climbs once startup delay passes ~2 seconds, and each added second can lose ~5.8% more viewers—so treat manifest freshness as a reliability feature, not a tuning detail.

Melbicom’s CDN is built for this “segments are cache, manifests are control” split, and the São Paulo PoP is the right place to enforce it.

São Paulo Caching Patterns

| Content type | Examples | São Paulo caching strategy |

|---|---|---|

| Static assets | Product images, CSS/JS bundles | Long TTL; cache-bust via versioned URLs; enable modern delivery (HTTP/2, compression) where available. |

| Shared HTML | Home/product/category pages (anonymous) | Micro-cache; use stale-while-revalidate to hide origin latency while keeping content fresh. |

| Personal flows | Cart/checkout/account | No-cache / pass-through; still terminate TLS at edge for faster handshakes. |

| Video segments | HLS/DASH chunks | Cache hard (VOD) and cache briefly (live); fill São Paulo edge fast via pull or prefetch. |

| Manifests | .m3u8 / MPD | Very short TTL or revalidate; treat as “real-time index,” not a static file. |

Where to Integrate Origin Shields and Dedicated Servers

Put origin shields in São Paulo to collapse cache misses into a single upstream fetch, then pair that with a local dedicated server origin to eliminate cross-continent round trips for traffic you can’t cache. Design failover so Brazil can swing to secondary LATAM PoPs and backup origins automatically—without turning an edge hiccup into a full outage.

Dedicated origin shield in São Paulo: one miss, not a stampede

An origin shield is a mid-tier cache layer: edge PoPs check the shield first, and only the shield hits the origin on a miss. In a LATAM topology, São Paulo is the practical shield location because it’s the region’s aggregation point and Brazil’s main interconnect.

Two high-yield patterns:

- Origin outside Brazil → Shield in São Paulo: Regional PoPs pull through São Paulo, so the expensive international fetch happens once.

- Origin in São Paulo → Shield still helps: The shield reduces duplicate origin fetches during sudden spikes (product drops, breaking-news live streams).

Local dedicated server origin: when cache misses must be fast

Even the best CDN service in São Paulo won’t cache everything: pricing APIs, payment flows, entitlements, DRM, session state. That traffic should not be crossing continents.

Melbicom’s CDN is already operating across LATAM PoPs, and dedicated servers in São Paulo are coming soon—with capacity reservations open now. Melbicom also supports 1,400+ ready configurations across 21 Tier III/IV data center locations, with custom builds in 3–5 days.

The integration path is straightforward:

- Run origin services (web app, API gateway, streaming packager/auth) on a São Paulo dedicated server.

- Front it with the São Paulo CDN PoP for caching and TLS termination.

- Use São Paulo as the shield for other LATAM edges, keeping cache fill close.

For teams that want tighter routing control, BGP sessions can fit the same design—useful when standardizing delivery across regions without giving up autonomy. Melbicom empowers teams to deploy, customize, and scale infrastructure where customers actually are, not where a provider’s template says you should operate.

Failover strategy: secondary LATAM PoPs and backup origins

A single-PoP strategy is a fragile one. Build failover at two layers:

- Origin failover: Configure a secondary origin and health checks so the CDN switches automatically when the São Paulo origin is degraded.

- Edge failover: Ensure users can be routed to the next-closest LATAM PoP when São Paulo has issues, so Brazil stays online even if performance temporarily degrades. Melbicom’s CDN PoPs across Brazil, Chile, Colombia, Argentina, Peru, and Mexico make that secondary-path planning practical today.

Keep the failover path warm: replicate critical assets, keep config parity, and run failure drills during low-risk windows.

Which Metrics Measure E-Commerce and OTT Success

Success in a CDN São Paulo rollout is measurable: for commerce, track Brazilian-user TTFB/LCP plus conversion and bounce; for OTT, track startup time, rebuffering, and playback failure rates. Run an A/B plan that splits Brazil traffic between old and new delivery paths, uses real-user telemetry, and ties edge changes to business outcomes.

E-Commerce KPIs: speed is the input, conversion is the output

Measure from Brazil, not from your HQ:

- TTFB + LCP: Validate that São Paulo caching and edge TLS termination are working.

- Conversion and bounce: A retail benchmark reports a 1-second speedup can lift conversion.

- Checkout completion: Track cart abandonment and payment-step drop-offs; Brazil’s PIX usage makes “fast checkout” table stakes.

OTT KPIs: time-to-first-frame and “did it break?”

For streaming, track quality-of-experience, not just origin bandwidth:

- Video startup time: Measure p50/p95 time-to-first-frame and correlate to abandonment.

- Rebuffering ratio and stall events: The “buffer circle” is the product.

- Playback failures: Timeouts and errors during spikes are where delivery architecture shows its seams.

A/B testing plan: isolate the edge variable

Keep it simple enough to trust:

- Split Brazil traffic: 50/50 between the existing path and the new São Paulo edge configuration.

- Hold everything else constant: same release, same ABR ladder, same origin logic.

- Run long enough to cover peaks: promotional days for commerce and live-event windows for OTT.

- Evaluate by percentile: p50/p95 load and startup time and map changes to conversion and playback success.

Minimum dashboard:

- p50/p95 LCP and TTFB (Brazil)

- Cache hit ratio by content class (assets vs HTML vs video)

- p50/p95 startup time and rebuffering ratio

- Conversion rate and playback success rate

Conclusion: CDN São Paulo Rollout Checklist

A São Paulo edge only pays off when it’s treated as a system:

- Define a cache taxonomy first: treat assets, shared HTML, private flows, segments, and manifests as different products—with explicit TTL/revalidation targets and separate hit-ratio goals.

- Make São Paulo a tier, not a point: place the origin shield there early so other LATAM edges pull through it and a miss becomes one upstream fetch, not a stampede.

- Localize the “uncacheable” path: move checkout/auth/DRM/session APIs to a São Paulo origin so personalized calls and cache misses don’t cross oceans.

- Make failover boring on purpose: use health-checked origin pools plus edge fallback to secondary LATAM PoPs, then rehearse the switch before campaigns and live events.

- Gate rollout on A/B evidence: require percentile improvements (p95 LCP/startup) and stable error rates before pushing the new São Paulo config to 100% of Brazil traffic.

Traffic density keeps rising, so “we’ll fix performance later” gets more expensive. Ship the edge, validate with A/B results, then scale the design across LATAM.

Reserve São Paulo Dedicated Servers

Capacity reservations for Brazil are open. Pair local origins with our São Paulo CDN PoP to cut latency for e-commerce and OTT traffic across LATAM.