Blog

Guide to Deploying a Bare Metal in Brazil for AI/ML Workloads

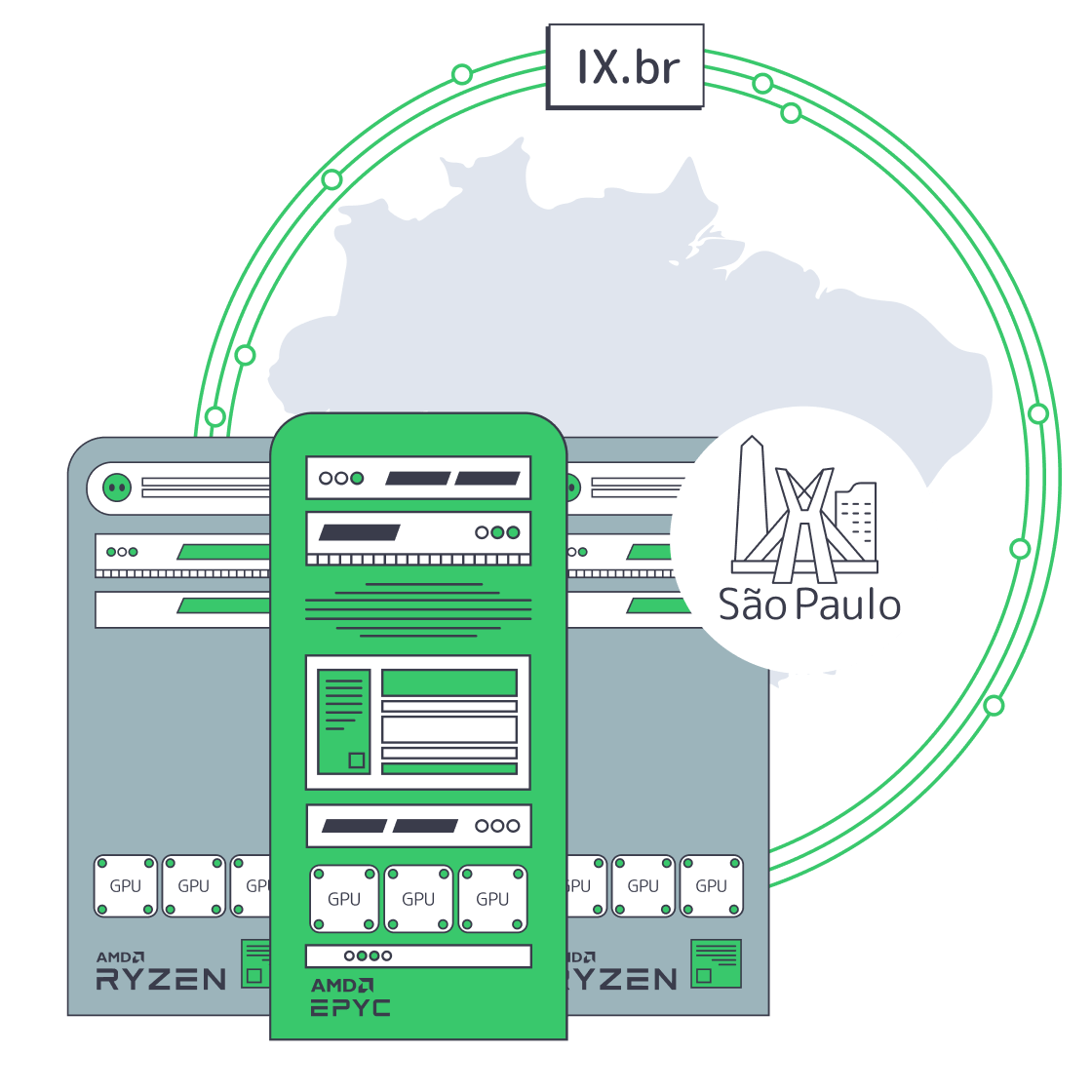

The IX.br exchange in São Paulo has become one of the world’s densest hubs with record traffic peaks, and the demand for interconnectivity in the area is only growing. The dense networks and fiber concentrated in the area provide an advantage if AI/ML teams are in the position to deploy modern bare metal in Brazil. With an origin in proximity to such a hive of interconnectivity, you gain lower latency and more deterministic throughput, and regional compliance and privacy obligations become far easier to prove.

Host in LATAM— Reserve servers in São Paulo — CDN PoPs across 6 LATAM countries — 20 DCs beyond South America |

Why Deploy AI/ML on São Paulo Bare Metal?

The data center market in Brazil is seeing rapid expansion; it is currently valued at US$3.4 billion and projected to reach US$5.96 billion within the next few years, according to investment research.AI‑heavy workloads are driving the need to compute closer to Brazil’s network core.

Surveys show that this will only snowball as most organizations in Brazil plan to increase AI investment, with AI/Generative projects predicted to cross BRL 13 billion, and it goes without saying that these AI systems will benefit from local, high‑performance infra.

The advantages of placing AI on bare metal in Brazil:

- Full hardware performance for AI math: Bare metal means no hypervisor overhead and sole access to CPU cores, RAM, and attached GPUs, which is better for training runs and high‑QPS inference.

- Proximity to IX.br São Paulo: Round-trip times are significantly reduced by having direct paths to Brazilian eyeball networks, and streams see much lower jitter, which is also a benefit for fraud scoring and interactive AI features.

- Provable data residency: If data is kept within Brazil, then LGPD compliance and audits are far simpler, reducing the risk of fines that can reach 2% of Brazilian revenue with a BRL 50 million cap per infraction.

- Predictability and customization: With full control over OS, drivers such as CUDA/ROCm, frameworks, and network layout, you can tune for specific model stacks and data pipelines without costs getting out of control.

Brazil Deploy Guide— Avoid costly mistakes — Real RTT & backbone insights — Architecture playbook for LATAM |

|

Which CPUs/GPUs to Choose for “AI‑Ready” São Paulo Server

For a dedicated server in Brazil, AMD EPYC and AMD Ryzen are the workhorses you need; EPYC boasts high core counts, large caches, and robust memory bandwidth, making data preprocessing, vector search, and distributed training coordination a doddle, while Ryzen’s high clock speeds boost latency‑sensitive logic and are ideal for smaller inference models. Each can be paired with optional GPUs to support deep learning.

AI integrations and operations in general are only expected to grow, and as they do so, the technology will push capacity requirements further, raising the bar. We have seen training compute for notable models doubling nearly every 6 months, a macro trend that reinforces the need to design nodes with plenty of headroom and the ability to scale horizontally.

How Local Interconnection Slashes Latency for AI Workloads

Serving from afar via long-distance pathways to Brazil adds latency and lengthens round-trip times. AI services are demanding and need shorter, more predictable paths for user requests and real‑time data feeds. By deploying in São Paulo and leveraging the direct links through the IX.br exchange, you move inference closer to last‑mile ISPs and major content networks. The hub has reported peaks surpassing 31–40 Tb/s; São Paulo regularly exceeds 22 Tb/s, making it a global leader in terms of both volume and participation.

Edge‑adjacent assets such as front‑end bundles, embeddings, and video tiles can be cached and delivered through Melbicom’s CDN in 55+ PoPs, which include South America, allowing core inference to run smoothly in São Paulo. Working in this hybrid manner reduces latency without relocation.

Where a Brazilian dedicated server trumps

Hosting in Brazil reduces cross-border hops, which is especially beneficial if your pipeline ingests Brazilian transaction, clickstream, or sensor data and helps maintain stable bandwidth during peaks. A local origin has a notable effect on user perception in terms of responsiveness, especially when it comes to recommendation engines, fintech risk scoring, live‑ops analytics, and speech systems.

The Privacy and Sovereignty Benefits of Bare Metal in Brazil

When workloads are privacy‑sensitive, compliance can be more complex, but keeping your processing and storage in the country and under your direct control is the perfect built-in solution. With local bare metal deployment, you isolate your data regionally, and as your models are on dedicated hardware, you have sole tenancy with transparent visibility into where copies reside. You avoid the challenges of cross-border transfer, making LGPD requirements for deletion and audits far less complicated while reducing the risk of LGPD fines that can cost up to 2% of Brazilian revenue, capped at BRL 50 million per violation, as well as non‑monetary sanctions like processing suspensions.

Monitoring and Capacity Planning for Instrumentation and Scaling

Design aside, you also need to be able to ensure a performant node is healthy and right‑sized. That means doing the following:

- Instrumenting from day one: High‑frequency metrics for CPU, GPU, memory, NVMe I/O, and NICs should be collected, and you need to ingest application traces and logs into a searchable store. That way, you can identify GPU throttling, data‑loader stalls, and queue build‑ups before they hit SLOs.

- Applying AIOps: Employ anomaly detection on latency, throughput, GPU memory, and temperature to surface subtle degradations such as drift‑driven latency creep or a slow memory leak.

- Forecasting capacity on leading indicators: Monitor and track sustained GPU utilization, p95/p99 latency, request queue depths, and feature‑store I/O. When critical resources consistently hold above roughly 70–80% during peaks, you should plan scale‑up or scale‑out with a design for modular growth that can cope with AI’s accelerating compute appetite, which seems to double every 6 months. This means adding GPUs to a chassis or adding nodes behind a model router.

- Operational discipline: Kernel/driver updates should be scheduled, SMART/NVMe health should be monitored, and replacements should be pre‑staged when error rates tick up.

Sizing for Training, Inference, and Pipelines

- Training nodes advice: Favor EPYC with abundant RAM and NVMe scratch; attach GPUs sized to the model (VRAM ≥ parameter+activation footprint). Use 25–100 Gbps to speed checkpoint sync and multi‑node all‑reduce.

- Latency‑critical inference: Ryzen or EPYC with strong single‑thread perf and ample L3 cache is ideal. If your model is particularly large, then you can cut tail latencies with a single or dual GPU. RTT can also be minimized by keeping all critical services near the IX.br fabric in São Paulo.

- Data pipelines in Real-time: Handle Kafka/Fluentd ingestion with NVMe + >10 Gbps NICs and co‑locate feature stores with inference when the data is sensitive or high‑velocity.

- Hybrid edge caching operation: The model execution should be kept on the São Paulo node while static assets and pre/post‑processing artifacts are pushed to the edge with a CDN such as Melbicom’s that offers PoPs in South America.

Future‑Proof Deployment with Melbicom’s Global Footprint

A locally based AI platform will eventually branch out globally, so why not prime ahead of time for ease when you are ready to do so? Melbicom has 21 global Tier III/IV DCs and a CDN that encompasses 55+ PoPs to enable symmetric patterns abroad, allowing you to replicate a trained model to Europe/Asia while simultaneously retaining Brazil‑resident training data. We can provide up to 200 Gbps per server, ideal for multi‑region checkpointing and dataset syncs. We have large in‑stock server pools and offer 24/7 support.

How Bare Metal in Brazil Addresses AI Workload Challenges

| AI/ML challenge | How São Paulo’s bare metal helps |

|---|---|

| High compute demand, such as deep nets and large optimizers | EPYC/Ryzen with optional GPUs deliver exclusive, non‑shared compute tailored to training and high‑QPS inference. |

| Massive I/O, for example, streaming features, and checkpoints | Local NVMe + high‑bandwidth NICs (with options up to 200 Gbps in selected sites) keep pipelines flowing without cross‑border backhaul. |

| Low latency/user proximity | Direct peering at IX.br São Paulo shortens paths to Brazilian ISPs/content networks; deterministic RTT results in better UX. |

| Data privacy/LGPD | Processing/storage on dedicated hardware kept in-country helps simplify compliance and reduces the risk of LGPD fines. |

| Rapid demand growth | Planning your capacity against compute trends (~6‑month doubling) plus modular scale‑up/out prevents bottlenecks. |

Deploying Bare Metal in Brazil: Next Steps

Melbicom is gearing up São Paulo data center presence and can support your launch in the near future while delivering advantages that generic providers often don’t: we operate our own network end‑to‑end; we deliver high‑bandwidth options globally; we build custom hardware configurations; and we run as an international remote company designed for speed and flexibility. The result is infrastructure freedom across four dimensions: deployment (place anything, anywhere, at any scale), configuration (customize hardware and network to your stack), operational (no vendor lock‑in or shared tenancy), and experience (simple onboarding with transparent control). Share your traffic volumes, latency SLOs, data‑residency needs, GPU/CPU requirements, and peering preferences; we’ll convert them into a precise, reservation‑backed deployment plan for São Paulo.

Be the first to host in Brazil on special terms

We at Melbicom will help you deploy, customize, and scale infrastructure freely—from AMD EPYC/Ryzen bare metal with optional GPUs to high‑capacity network interfaces and 50+ CDN PoPs you can use immediately. Tell us your volumes, targets, and exact specs so we can shape a tailored early offer and reserve capacity for your launch.