Blog

Speed and Collaboration in Modern DevOps Hosting

Modern software teams live or die by how quickly and safely they can push code to production. High-performing DevOps organizations now deploy on demand—hundreds of times per week—while laggards still fight monthly release trains.

To close that gap, engineering leaders are refactoring not only code but infrastructure: they are wiring continuous-integration pipelines to dedicated hardware, cloning on-demand test clusters, and orchestrating microservices across hybrid clouds. The mission is simple: erase every delay between “commit” and “customer.”

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

|

Below is a data-driven look at the four tactics that matter most—CI/CD, self-service environments, container orchestration, and integrated collaboration—followed by a reality check on debugging speed in dedicated-versus-serverless sandboxes and a brief case for why single-tenant servers remain the backbone of fast pipelines.

CI/CD: The Release Engine

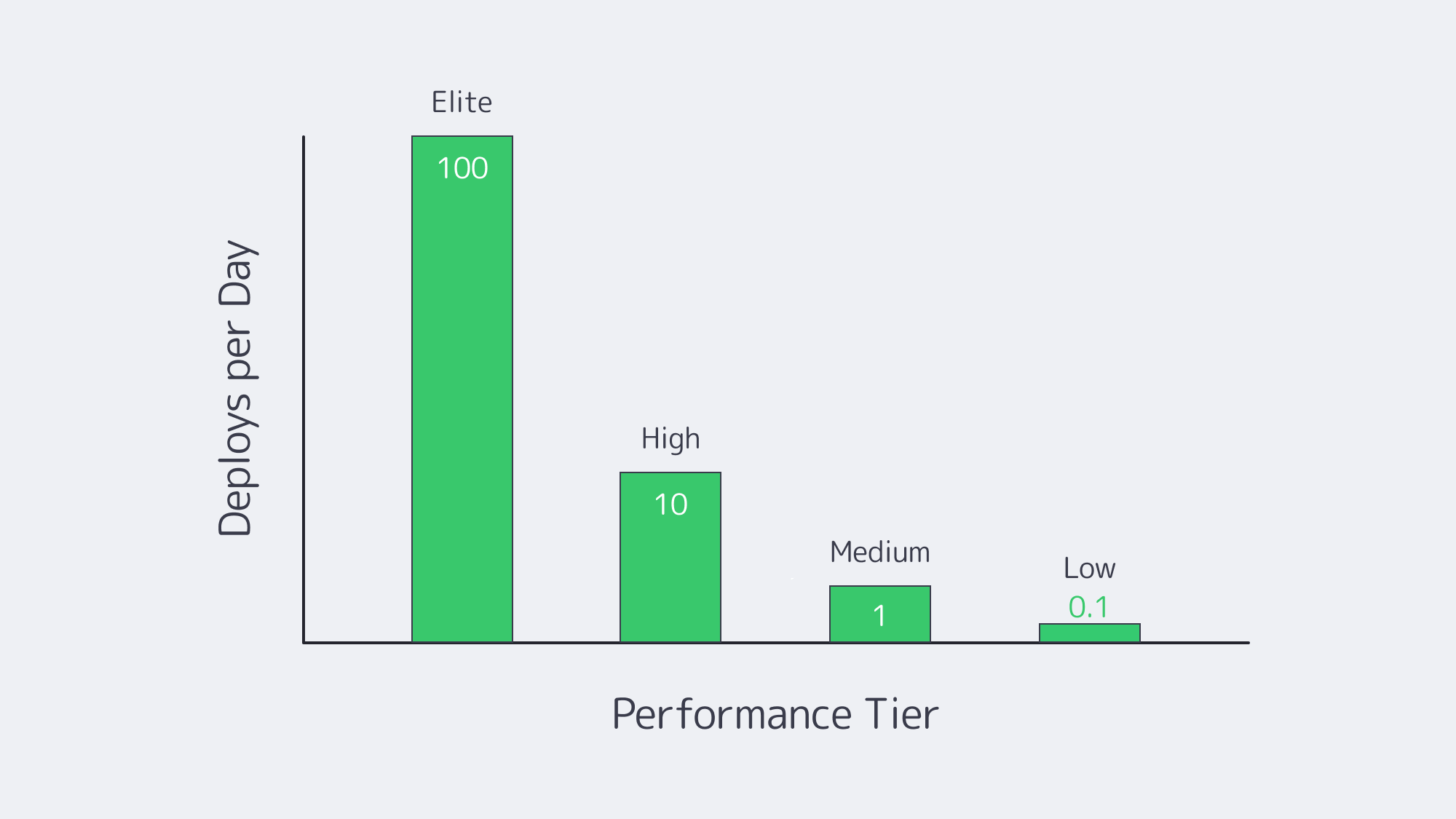

Continuous integration and continuous deployment have become table stakes—83 % of developers now touch CI/CD in their daily work.[1] Yet only elite teams convert that tooling into true speed delivering 973 × more frequent releases with three orders of magnitude faster recovery times than the median.

Key accelerators

| CI/CD Capability | Impact on Code-to-Prod | Typical Tech |

|---|---|---|

| Parallelized test runners | Cuts build times from 20 min → sub-5 min | GitHub Actions, GitLab, Jenkins on auto-scaling runners |

| Declarative pipelines as code | Enables one-click rollback and reproducible builds | YAML-based workflows in main repo |

| Automated canary promotion | Reduces blast radius; unlocks multiple prod pushes per day | Spinnaker, Argo Rollouts |

Many organizations still host runners on shared SaaS tiers that queue for minutes. Moving those agents onto dedicated machines—especially where license-weighted tests or large artifacts are involved—removes noisy-neighbor waits and pushes throughput to line-rate disk and network. Melbicom provisions new dedicated servers in under two hours and sustains up to 200 Gbps per machine, allowing teams to run GPU builds, security scans, and artifact replication without throttling.

Self-Service Environments: Instant Sandboxes

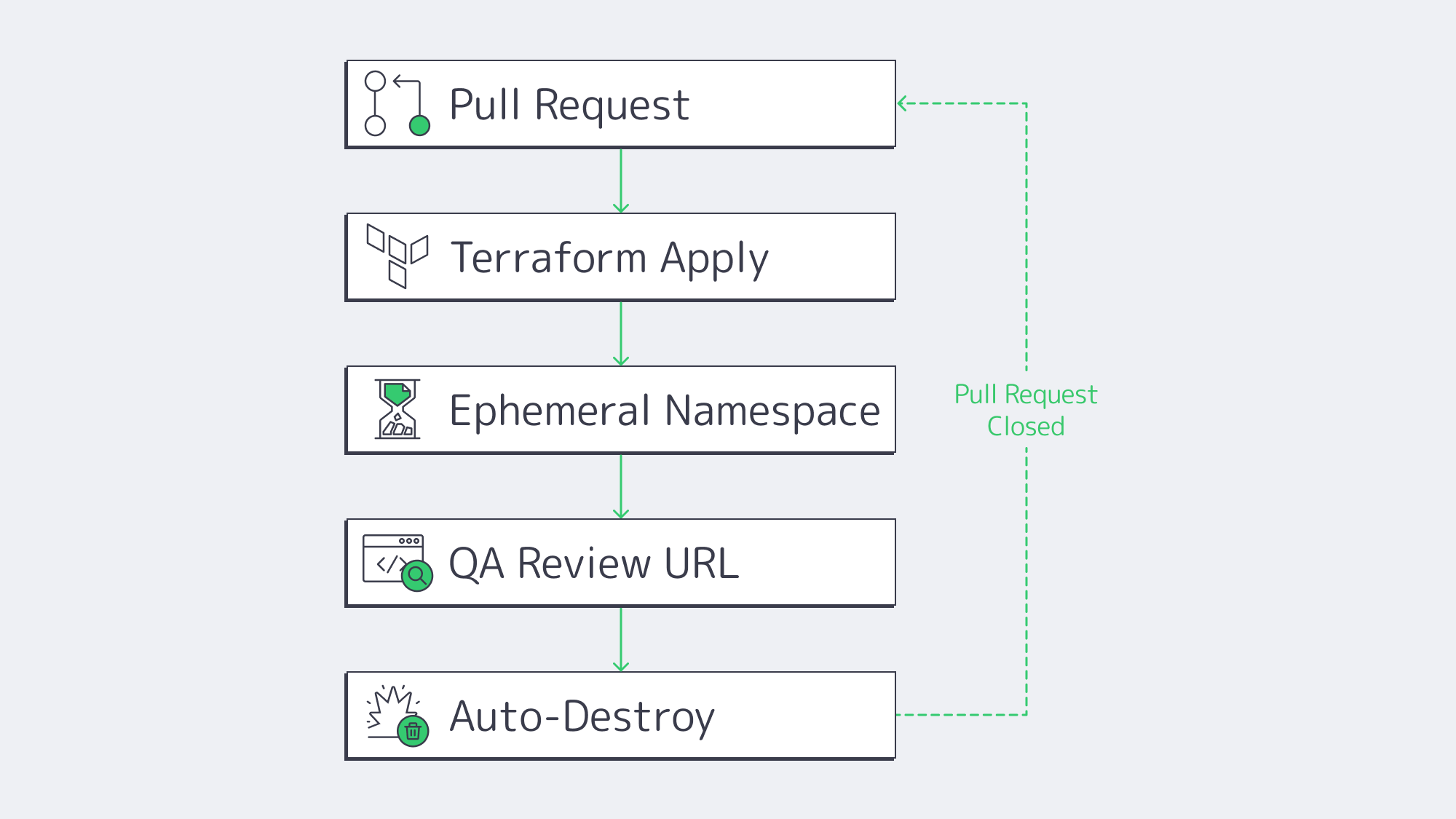

Even the slickest pipeline stalls when engineers wait days for a staging slot. Platform-engineering surveys show 68 % of teams reclaimed at least 30 % developer time after rolling out self-service environment portals. The winning pattern is “ephemeral previews”:

- Developer opens a pull request.

- Pipeline reads a Terraform or Helm template.

- A full stack—API, DB, cache, auth—spins up on a disposable namespace.

- Stakeholders click a unique URL to review.

- Environment auto-destroys on merge or timeout.

Because every preview matches production configs byte-for-byte, integration bugs surface early, and parallel feature branches never collide. Cost overruns are mitigated by built-in TTL policies and scheduled shutdowns. Running these ephemerals on a Kubernetes cluster of dedicated servers keeps cold-start latency near zero while still letting the platform burst into a public cloud node pool when concurrency spikes.

Container Orchestration: Uniform Deployment at Scale

Containers and Kubernetes long ago graduated from buzzword to backbone—84 % of enterprises already evaluate K8s in at least one environment, and two-thirds run it in more than one.[2] For developers, the pay-off is uniformity: the same image, health checks, and rollout rules apply on a laptop, in CI, or across ten data centers.

Why it compresses timelines:

- Environmental parity. “Works on my machine” disappears when the machine is defined as an immutable image.

- Rolling updates and rollbacks. Kubernetes deploys new versions behind readiness probes and auto-reverts on failure, letting teams ship multiple times per day with tiny blast radii.

- Horizontal pod autoscaling. When traffic spikes at 3 a.m., the control plane—not humans—adds replicas, so on-call engineers write code instead of resizing.

Yet orchestration itself can introduce overhead. Surveys find three-quarters of teams wrestle with K8s complexity. One antidote is bare-metal clusters: eliminating the virtualization layer simplifies network paths and improves pod density. Melbicom racks server fleets with single-tenant IPMI access so platform teams can flash the exact kernel, CNI plugin, or NIC driver they need—no waiting on a cloud hypervisor upgrade.

Integrated Collaboration: Tooling Meets DevOps Culture

Speed is half technology, half coordination. High-velocity teams converge on integrated work surfaces:

- Repo-centric discussions. Whether on GitHub or GitLab, code, comments, pipeline status, and ticket links live in one URL.

- ChatOps. Deployments, alerts, and feature flags pipe into Slack or Teams so developers, SREs, and PMs troubleshoot in one thread.

- Shared observability. Engineers reading the same Grafana dashboard as Ops spot regressions before customers do. Post-incident reports feed directly into the backlog.

The DORA study correlates strong documentation and blameless culture with a 30 % jump in delivery performance.[3] Toolchains reinforce that culture: if every infra change flows through a pull request, approval workflows become social, discoverable, and searchable.

Debugging at Speed: Hybrid vs. Serverless

Nothing reveals infrastructure trade-offs faster than a 3 a.m. outage. When time-to-root-cause matters, always-on dedicated environments beat serverless sandboxes in three ways:

| Criteria | Dedicated Servers (or VMs) | Serverless Functions |

|---|---|---|

| Live debugging & SSH | Full access; attach profilers, trace syscalls | Not supported; rely on delayed logs |

| Cold-start latency | Warm; sub-10 ms connection | 100 – 500 ms per wake-up |

| Execution limits | None beyond hardware | Hard caps (e.g., 15 min, 10 GB RAM) |

Serverless excels at elastic front-end API scaling, but its abstraction hides the OS, complicates heap inspection, and enforces strict runtime ceilings. The pragmatic pattern is therefore hybrid:

- Run stateless request handlers as serverless for cost elasticity.

- Mirror critical or stateful components on a dedicated staging cluster for step-through debugging, load replays, and chaos drills.

Developers reproduce flaky edge cases in the persistent cluster, patch fast, then redeploy to both realms with the same container image. This split retains serverless economics and dedicated debuggability.

Hybrid Dedicated Backbone: Why Hardware Still Matters

With public cloud spend surpassing $300 billion, it’s tempting to assume there’s no need for other types of solutions. Yet Gartner forecasts that 90% of enterprises will operate hybrid estates by 2027.[4] Reasons include:

- Predictable performance. No noisy neighbors means latency and throughput figures stay flat—critical when a 100 ms delay can cut e-commerce revenue 1 %.

- Cost-efficient base load. Steady 24 × 7 traffic costs less on fixed-price servers than per-second VM billing.

- Data sovereignty & compliance. Finance, health, and government workloads often must reside in certified single-tenant cages.

- Customization. Teams can install low-level profilers, tune kernel modules, or deploy specialized GPUs without waiting for cloud SKUs.

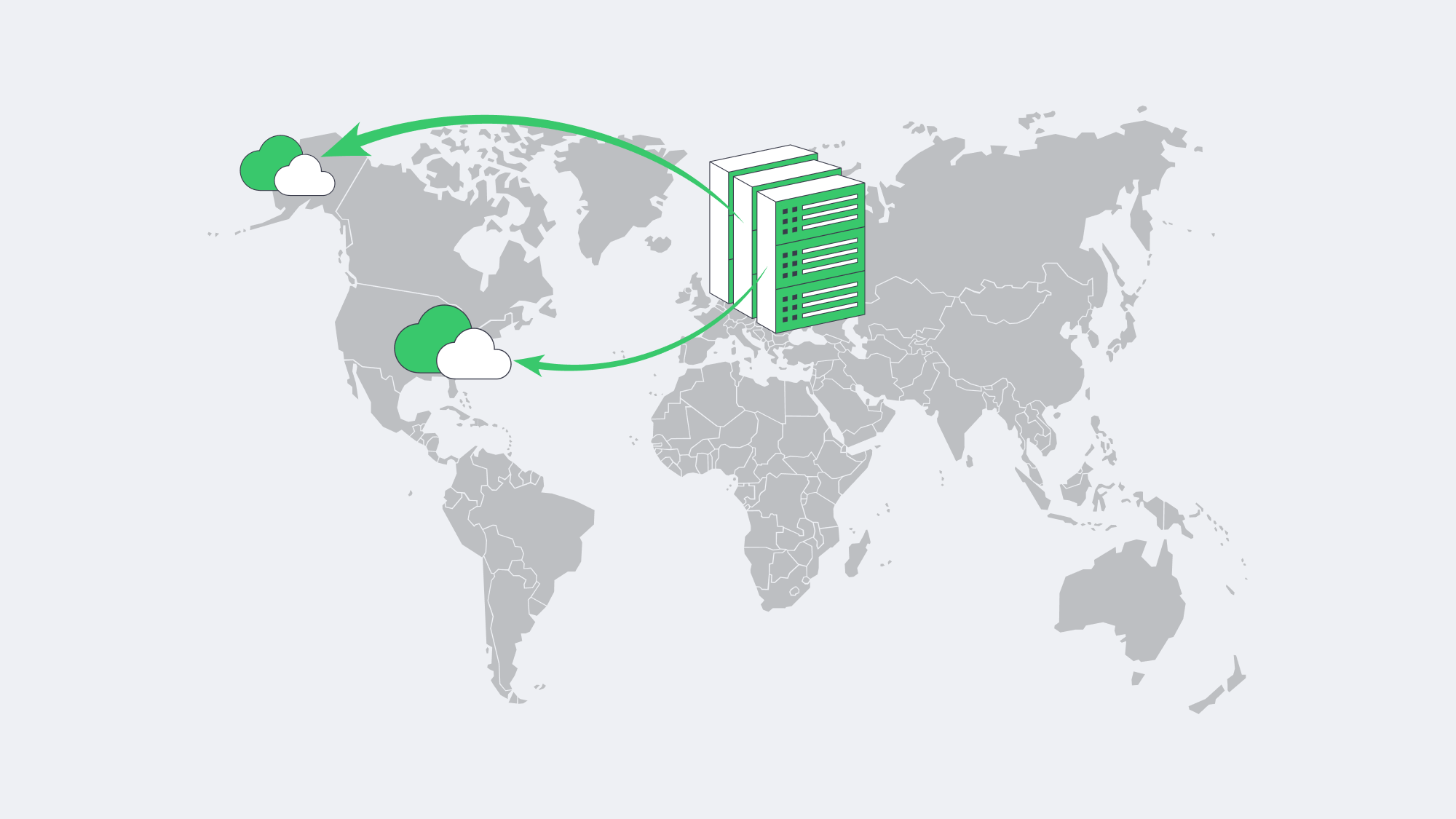

Melbicom underpins this model with 1,300+ ready configurations across 21 Tier III/IV locations. Teams can anchor their stateful databases in Frankfurt, spin up GPU runners in Amsterdam, burst front-end replicas into US South, and push static assets through a 55-plus-node CDN. Bandwidth scales to a staggering 200 Gbps per box, eliminating throttles during container pulls or artifact replication. Crucially, servers land on-line in two hours, not the multi-week procurement cycles of old.

Hosting That Moves at Developer Speed

Fast, collaborative releases hinge on eliminating every wait state between keyboard and customer. Continuous-integration pipelines slash merge-to-build times; self-service previews wipe out ticket queues; Kubernetes enforces identical deployments; and integrated toolchains keep everyone staring at the same truth. Yet the physical substrate still matters. Dedicated servers—linked through hybrid clouds—sustain predictable performance, deeper debugging, and long-term cost control, while public services add elasticity at the edge. Teams that stitch these layers together ship faster and sleep better.

Deploy Infrastructure in Minutes

Order high-performance dedicated servers across 21 global data centers and get online in under two hours.