Blog

Database Hosting Services for Low Latency, High Uptime & Lower TCO

Modern database hosting services span far more than the racks that once anchored corporate IT. Today, decision-makers juggle dedicated servers, multi-cloud Database-as-a-Service (DBaaS) platforms, and containerized deployments—each promising performance, scale, and savings. The goal of this guide is simple: to show how to pick the right landing zone for your data by weighing five hard metrics—latency, uptime guarantees, compliance, elastic scaling, and total cost of ownership (TCO). Secondary considerations (feature sets, corporate politics, tooling preferences) matter, but they rarely outrank these fundamentals.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

|

Which Database Hosting Services Are Available—and How Do They Differ?

Before public clouds, running a database meant buying hardware, carving out space in an on-prem data center, and hiring specialists to keep disks spinning. That model still lives in regulated niches, yet most organizations now treat on-prem data centers as legacy—valuable history, not future direction. The pressures shaping current choices are sovereignty rules that restrict cross-border data flow, hybrid resiliency targets that demand workloads survive regional failures, and a CFO-driven insistence on transparent, forecastable economics.

Three modern options dominate

| Model | Core Idea | Prime Strength |

|---|---|---|

| Dedicated server | Single-tenant physical host rented or owned | Predictable performance & cost |

| Cloud DBaaS | Provider-managed database instance | Rapid deployment & elastic scale |

| Container platform | Database in Kubernetes or similar | Portability across any infrastructure |

Each model can power QSL database hosting, web hosting MySQL database clusters, or high-volume postgres database hosting at scale; the difference lies in how they balance the five decision metrics that follow.

How Does Latency Affect Database Hosting—and How Do You Reduce It?

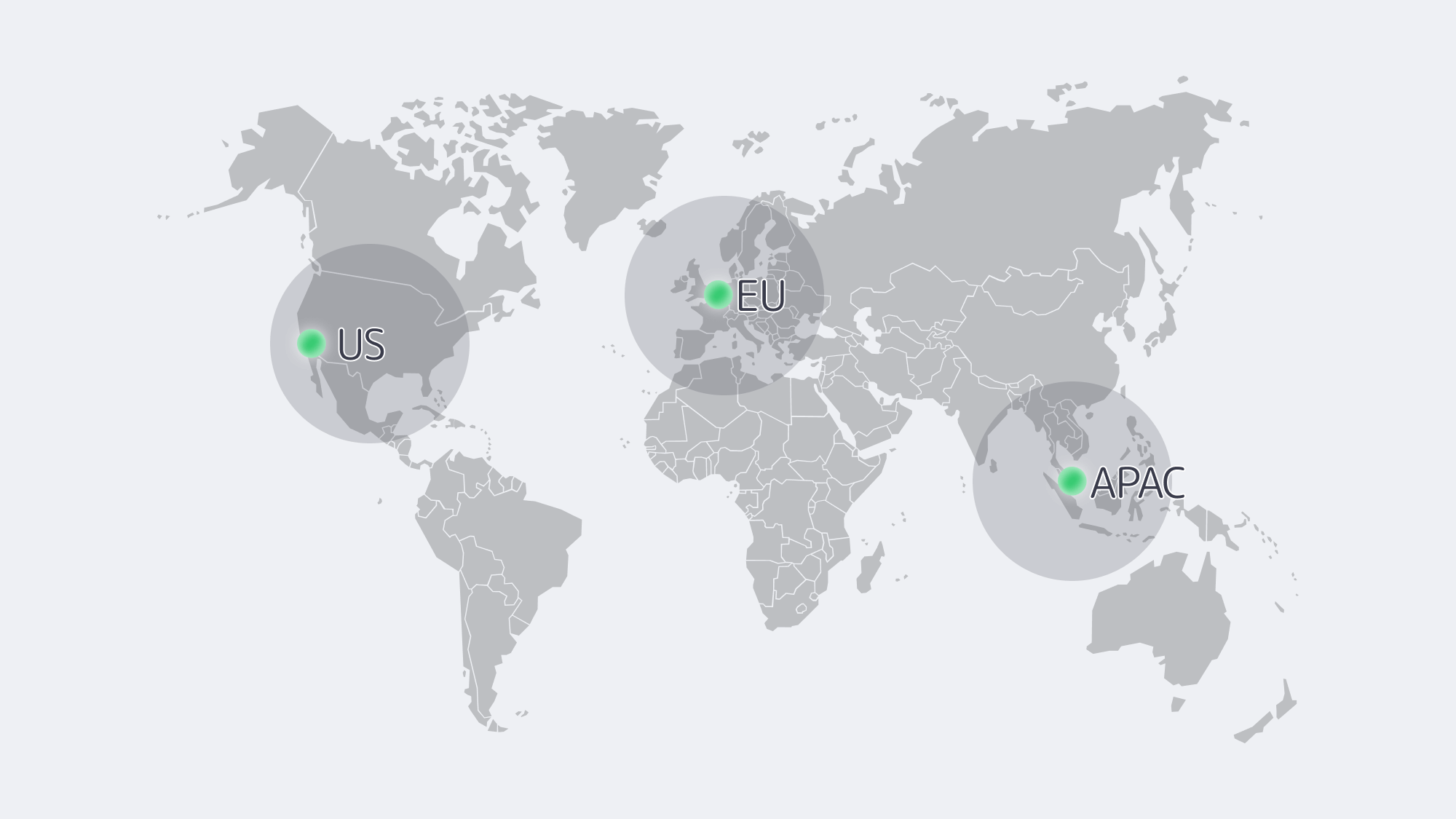

Every extra millisecond between application and datastore chips away at user experience. Dedicated servers let teams drop hardware into specific metros—Amsterdam for pan-EU workloads, Los Angeles for West-Coast startup—bringing median round-trip times under 5 ms within region and avoiding multi-tenant jitter.

DBaaS instances are just as quick when compute lives in the same cloud zone, but hybrid topologies suffer: shipping queries from an on-prem app stack to a cloud DB 2 000 km away adds 40-70 ms and invites egress fees. Container clusters mirror their substrate; run Kubernetes on bare metal in a regional facility and you match dedicated latencies, run it on VMs across zones and you inherit cloud hop counts.

For global audiences, no single host sits near everyone. The low-latency playbook is therefore:

- Pin read-heavy replicas close to users. Cloud DBaaS makes this almost one-click; dedicated nodes can achieve the same with streaming replication.

- Keep write primaries near business logic. It minimizes chatter on chatty OLTP workloads.

- Avoid forced detours. Private links or anycast routing outperform public-internet hops.

Melbicom pairs twenty Tier III–IV facilities with 200 Gbps server uplinks, so we can land data exactly where users live—without virtualization overhead or surprise throttling.

Is Your Database Hosting Highly Available—and What Should You Verify?

Dedicated servers inherit their facility rating: Tier IV promises <26 minutes of annual infrastructure downtime, Tier III about 1.6 hours. Hardware still fails, so true availability hinges on software redundancy—multi-node clusters or synchronous replicas in a second rack. Melbicom mitigates risk by swapping failed components inside 4 hours and maintaining redundant upstream carriers; fail-over logic, however, remains under your control.

Cloud DBaaS automates much of that logic. Enable multi-zone mode and provider target 99.95 %-plus availability; failovers finish in <60 s. The price is a small performance tax and dependence on platform tooling. Region-wide outages are rare yet headline-making; multi-region replication cuts risk but doubles cost.

Container databases ride Kubernetes self-healing. StatefulSets restart crashed pods, Operators promote replicas, and a cluster spread across two sites can deliver four-nines reliability—provided storage back ends replicate fast enough. You’re the SRE on call, so monitoring and rehearsed run-books are mandatory.

Rule of thumb: platform automation reduces human-error downtime, but the farther uptime is outsourced, the less tuning freedom you retain.

Compliance & Data Sovereignty: Control vs Convenience

- Dedicated servers grant the clearest answers. Choose a country, deploy, and keep encryption keys offline; auditors see the whole chain. European firms favor single-tenant hosts in EU territory to sidestep cross-border risk, while U.S. healthcare providers leverage HIPAA-aligned cages.

- DBaaS vendors brandish long lists of ISO, SOC 2, PCI, and HIPAA attestations, yet true sovereignty is fuzzier. Metadata or backups may leave region, and foreign-owned providers remain subject to their home-state disclosure laws. Customer-managed keys, private endpoints, and “sovereign-cloud” variants ease some worries but add cost and sometimes feature gaps.

- Containers let teams codify policy. Restrict nodes by label, enforce network policies, and pin PostgreSQL clusters to EU nodes while U.S. analytics pods run elsewhere. The trade-off is operational complexity: securing the control plane, supply-chain-scanning images, and documenting enforcement for auditors.

When sovereignty trumps all, single-tenant hardware in an in-country facility still rules. That is why we at Melbicom maintain European data centers and make node-level placement an API call.

How Do Database Hosting Services Scale—Vertical, Horizontal, or Serverless?

Cloud DBaaS sets the benchmark: resize a MySQL instance from 4 vCPUs to 16 vCPUs in minutes, add replicas with one API call, or let a serverless tier spike 20× during a flash sale. It’s difficult to match that zero-touch elasticity elsewhere.

Dedicated servers scale vertically by swapping CPUs or moving the database to a bigger box and horizontally by adding shard or replica nodes. Melbicom keeps > 1,000 configurations on standby, so extra capacity appears within hours, not weeks, but day-scale elasticity cannot copy second-scale serverless bursts. For steady workloads—think ERP, catalog, or gaming back-ends—predictable monthly capacity often beats pay-per-peak surprises.

Container platforms mimic cloud elasticity inside your footprint. Kubernetes autoscalers launch new database pods or add worker nodes when CPU thresholds trip, provided spare hardware exists or an underlying IaaS can supply it. Distributed SQL engines (CockroachDB, Yugabyte) scale almost linearly; classic Postgres will still bottleneck on a single writer. Good operators abstract most of the ceremony, but hot data redistribution still takes time and IO.

In practice, teams often blend models: burst-prone workloads float on DBaaS, while core ledgers settle on right-sized dedicated clusters refreshed quarterly.

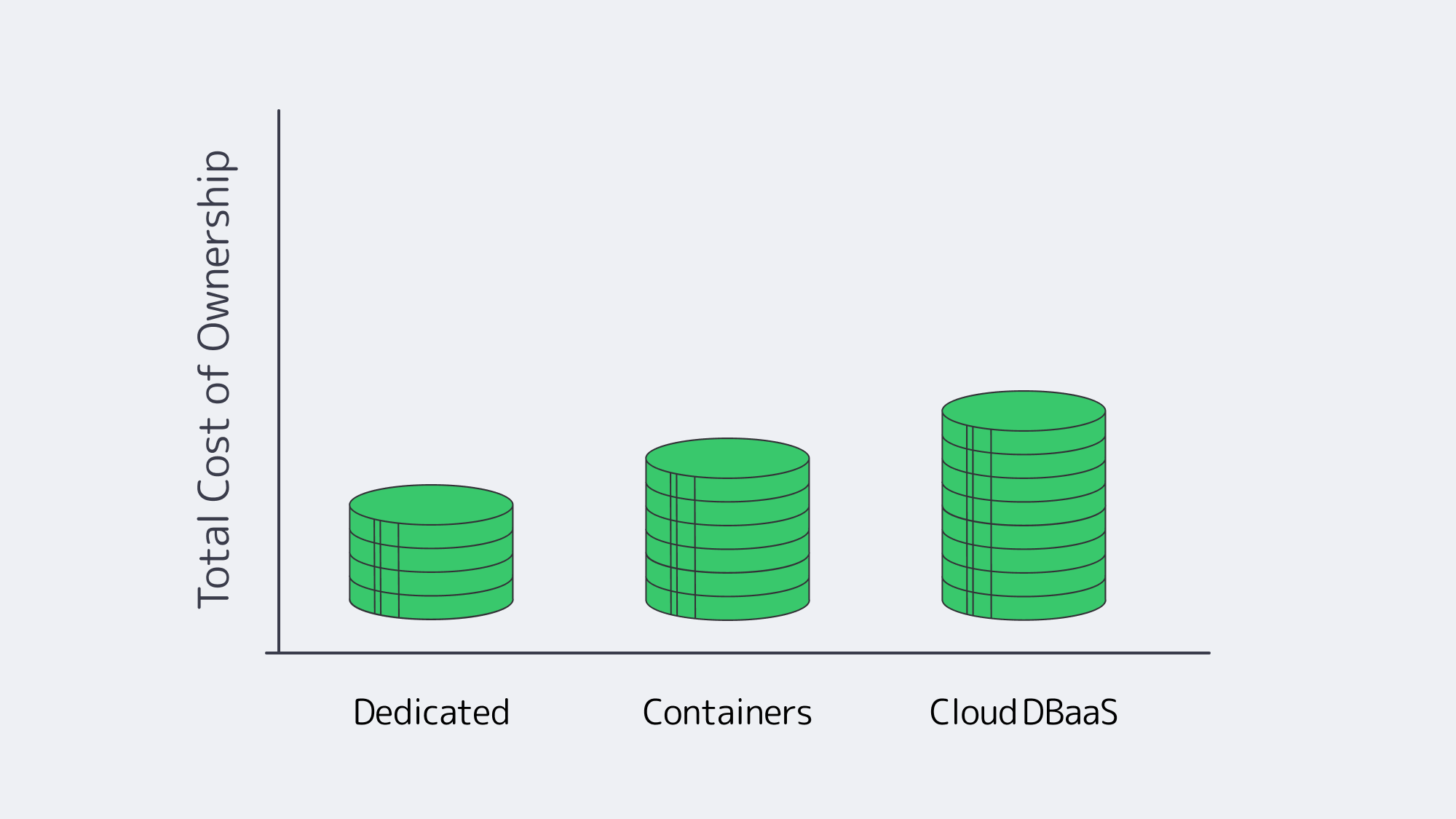

How Much Do Database Hosting Services Cost—and What Drives TCO?

Price tags without context are dangerous. Evaluate three buckets:

- Direct infrastructure spend. Cloud on-demand rates can be 2-4× the monthly rental of an equivalently specced dedicated host when utilized 24 / 7. Transferring 50 TB out of a cloud region can cost more than leasing a 10 Gbps unmetered port for a month.

- Labor and tooling. DBaaS bundles patching, backups, and monitoring. Dedicated or container fleets need DBAs and SREs; automation amortizes the cost, but talent retention counts.

- Financial risk. Over-provision on-prem and you eat idle capital; under-provision in cloud and burst‐pricing or downtime hits revenue. Strategic lock-in adds long-term exposure: repatriating 100 TB from a cloud can incur five-figure egress fees.

A common pattern: startups prototype on DBaaS, stabilize growth, then migrate stable workloads to dedicated hardware to cap expenses. Dropbox famously saved $75 million over two years by exiting cloud storage; not every firm hits that scale, yet many mid-sized SaaS providers report 30–50 % savings after moving heavy databases to single-tenant hosts. Transparent economics attract finance teams; we see customers use Melbicom servers to fix monthly costs for core data while keeping elastic analytics in cloud Spot fleets.

Which Database Hosting Service Fits Your Use Case? Decision Matrix

| Criterion | Dedicated server | Cloud DBaaS | Container platform |

|---|---|---|---|

| Latency control | High (choose metro, no hypervisor) | High in-zone, variable hybrid | Mirrors substrate; tunable |

| SLA responsibility | Shared (infra provider + your cluster) | Provider-managed failover | You + Operator logic |

| Compliance sovereignty | Full control | Certifications but shared jurisdiction | High if self-hosted |

| Elastic scaling speed | Hours (new node) | Seconds–minutes (resize, serverless) | Minutes; depends on spare capacity |

| Long-term TCO (steady load) | Lowest | Highest unless reserved | Mid; gains from consolidation |

How to Choose a Database Hosting Service: A Balanced Framework

Every serious database platform evaluation should begin with hard numbers: latency targets per user region, downtime tolerance in minutes per month, regulatory clauses on data residency, scale elasticity curves, and projected three-year spend. Map those against the strengths above.

- If deterministic performance, sovereign control, and flat costs define success—bank transactions, industrial telemetry—dedicated servers excel.

- If release velocity, unpredictable bursts, or limited ops staff dominate, cloud DBaaS delivers fastest returns—think consumer apps, proof-of-concepts.

- If portability, GitOps pipelines, and multi-site resilience are priorities, container platforms offer a compelling middle road—particularly when married to dedicated nodes for predictability.

Hybrid architectures win when they let each workload live where it thrives. That philosophy underpins why enterprises increasingly mix clouds and single-tenant hardware rather than betting on one model.

Conclusion

The Path to Confident Database Hosting

Choosing a home for critical data is no longer about picking “cloud vs. on-prem.” It is about aligning latency, availability, compliance, elasticity, and cost with the realities of your application and regulatory environment—then revisiting that alignment as those realities shift. Dedicated servers, cloud DBaaS, and containerized platforms each bring distinct advantages; the smartest strategies orchestrate them together. By quantifying the five core metrics and testing workloads in realistic scenarios, teams reach decisions that hold up under growth, audits, and budget scrutiny.

Launch Your Database on Dedicated Servers

Provision a high-performance, low-latency dedicated server in hours and keep full control of your data.