Blog

File Server Backup Solutions: Step‐by‐Step Protection

Modern data protection has evolved far beyond manual copy scripts; the stop-gap era is well and truly gone. Over the years, data estates have sprawled across petabytes, the backups have become the prime target of attacks, and modern compliance demands are stricter than ever before, driving real changes in the way that file server admins protect data for provable recovery.

Where once techs were occupied after-hours, swapping disks with little more than a prayer that cron jobs ran smoothly, they now need provable safeguards driven by evidence and immutable data restoration.

So to prevent budgets spiraling and redundant copies, we have put together this guide to walk you through five interlocking steps to ensure that file server failure or ransomware blasts don’t leave you in crisis. They are: classification, granular retention, automated incremental snapshots, restore testing, and anomaly-driven monitoring. If followed strategically hand in hand with the following technologies: Volume Shadow Copy snapshots, immutable object storage, and API-first alerting, then you have a fool-proof restore process for whatever you may face.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

|

Share Classification: How to Prioritize

Backup windows become bloated when every share is treated equally, and that is a sure path to unmet Recovery Point Objectives (RPOs). Therefore, your first step should be a data-classification sweep to categorize data into the following tiers:

- Tier 1 – Business-critical data such as finance ledgers, deal folders, and engineering release artifacts.

- Tier 2 – Important but not critical data from department workspaces and any ongoing project roots.

- Tier 3 – Non-critical archives and reference libraries data, project dumps that are at the end of life.

Classifying data in this manner drives everything downstream. Be sure to interview data owners, scan for regulated content (PII, PHI), and log the operational impact of losing each share. This helps with creating policies; the data protection needs of a finance ledger that changes every hour are different from those of an archive with quarterly updates.

The approach also ties hand in hand with demonstrating that you are accomplishing compliance demands, such as the GDPR mandate that states at-risk EU personal data must have adequate safeguards, thus preventing fines.

Granular, Immutable Retention Policies

With the tiers clarified, you can begin to craft retention that reflects data value and change rate. The typical “backup daily, retain 30 days” blanket rule hogs space unnecessarily, and critical RPO targets may still fall through the cracks with this method. Using a tiered matrix ensures efficient protection, keeping things sharp without wasting storage. Take a look at the following example:

| Tier | Backup cadence | Version retention |

|---|---|---|

| 1 | Hourly incremental snapshot and nightly full | 90 days |

| 2 | Nightly incremental snapshot and weekly full | 30 days |

| 3 | Weekly full | 14 days |

Non-negotiable Immutability

Worryingly, in 97% of ransomware incidents, the attackers corrupt backups directly, making immutability vital.[1] Using WORM-locked object storage, be it S3-Object Lock, SFTP-WORM appliances, or cloud cold tiers for snapshots, means killswitch attempts fail. One simple change, which takes away a ransomware gang’s power, is to have one recent full backup for every Tier 1 dataset stored in an immutable bucket for 90 days before aging out automatically. That way, a “pay or lose everything” threat is of no concern.

Following the 3-2-1 pattern (three copies, in two media, one kept off-site) helps reinforce disaster resilience. So, be sure to ship nightly copies of Tier 1 critical data and weekly copies of Tier 2 important data to remotely located storage in a different region. Remember, latency is less important than clean data should a physical disaster, such as a site-level fire or flood, happen to occur.

Tools for Automating Incremental Snapshots: VSS/LVM/ZFS

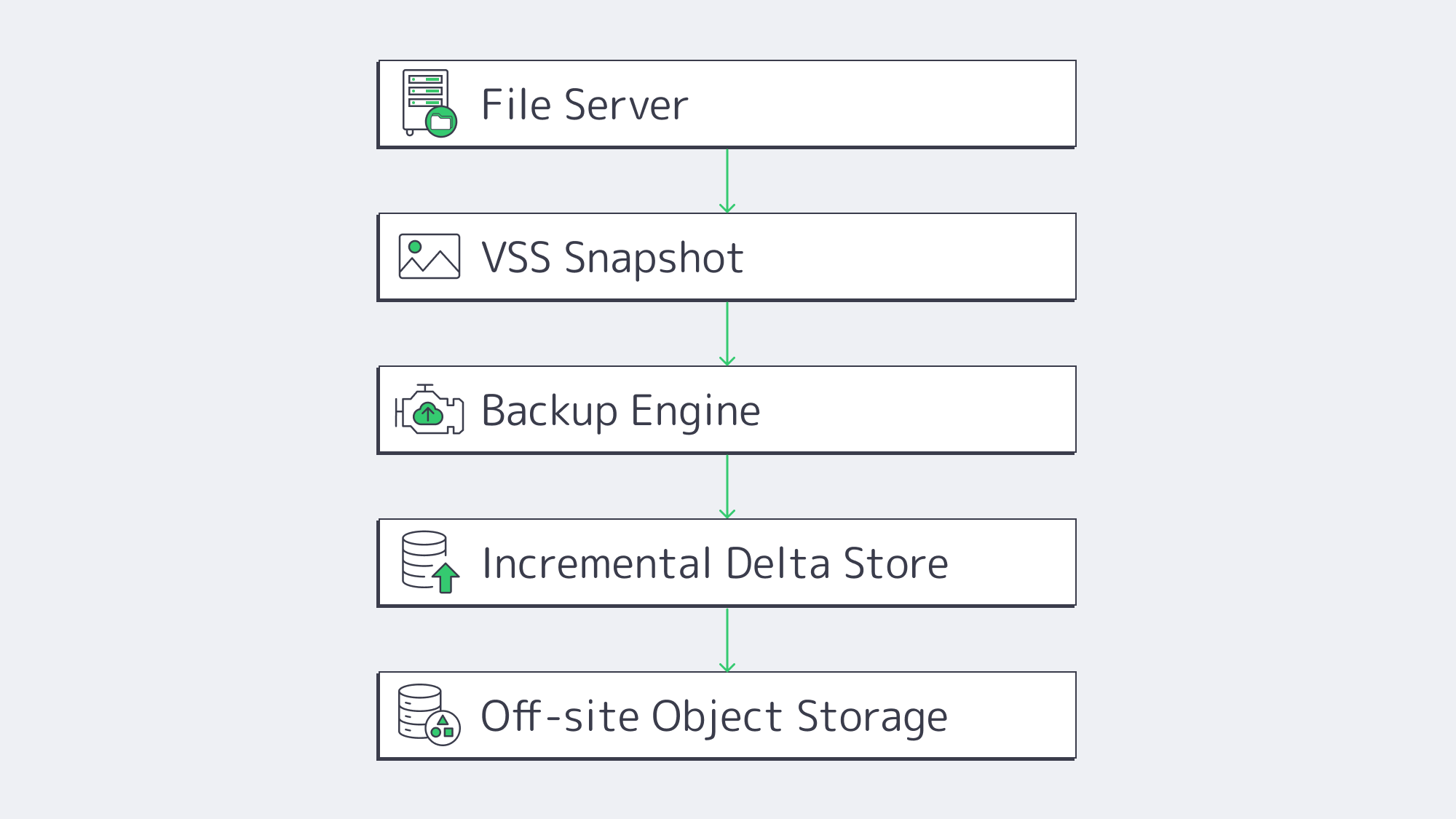

Modern backup solutions have moved away from running full multi-terabyte volumes all weekend, favoring synthetic incremental snapshots. Now, following an initial full seed, only deltas move. This can cut backup traffic and runtime by as much as 80–95 %[2], allowing continuous protection without crushing the network, courtesy of the smaller payloads.

Automating the process requires no human intervention:

- Job schedulers can be automated to fire according to the tier classification (hourly, nightly, etc).

- Post-process hooks immediately replicate backups to off-site targets.

- Report APIs automatically push status to Slack or PagerDuty.

The automated filesystem snapshots run in an application-consistent state, ensuring zero downtime, and they also eliminate “file locked” errors. If using Volume Shadow Copy Service (VSS) for snapshot automation on Windows, there is a momentary freeze as it writes, and the backup software captures point-in-time blocks without downtime, regardless of the file type, even open PSTs or SQLite files are instantly written. The same can be said for Linux-equivalent software such as LVM snapshots or ZFS send/receive.

Testing Restores for Real-World Recovery Before the Fact

Almost 60 % of real-world recoveries fail for one of the following reasons: corrupt media, missing credentials, or mis-scoped jobs.[3] Each of these risks can be reduced by testing restores for real-world situations by simply baking restores into the run-book and making it a part of regular operations. We suggest the following:

- Weekly random spot checks: pick three files from a variety of tiers; restore them to an isolated sandbox and validate hashes.

- Quarterly full volume recovery drills: Using a new VM or dedicated server host, perform a full Tier 1 recovery. Be sure to time the process and log any gaps identified.

- Verification after any changes: Ad-hoc restore tests should be performed following any changes, such as new shares being added, tweaks to ACLs, or backup agent upgrades

Remember, while the auto-mount VM features included in many modern suites are useful to verify boot or run block-level checksums following a backup, human-eyed drills are still needed to validate run-books and credentials. Double-checking manually also builds muscle memory for when teams are under stress.

Anomaly Monitoring and Wiring Alerts into Operational Fabric

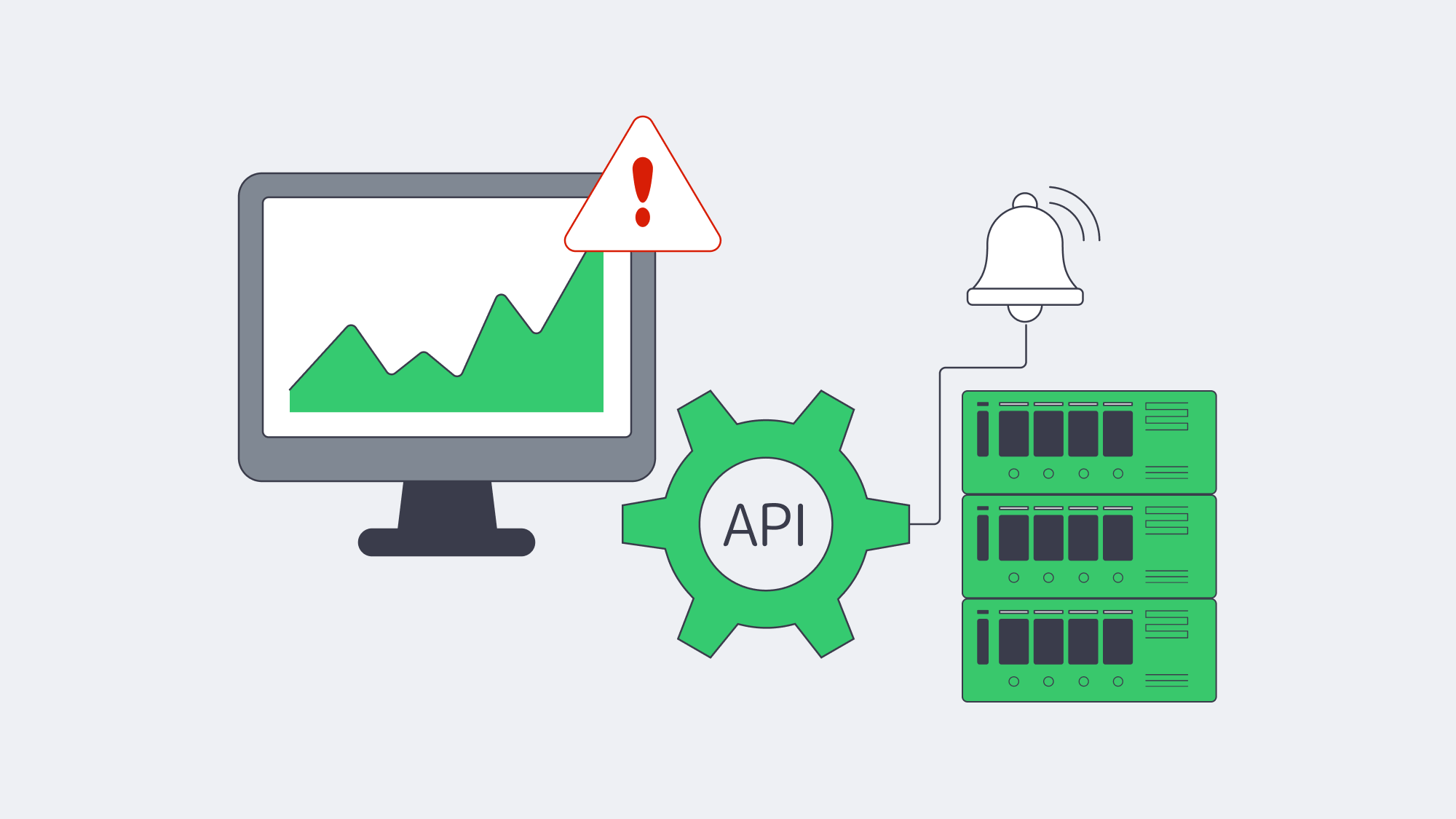

Automation has its perks, but monitoring is essential. Ransomware encrypts at machine speeds, and so a quiet backup that finishes without alert could be masking a future disaster, and you won’t know until the next scheduled backup. Anomaly engines can help observe backup activity and watch for spikes or changes in compression ratios and file counts to spot deletion and make sure delta ballooning is identified efficiently. If your nightly capture is usually around 800MB, and last night’s job hit 25 GB, time is of the essence. Next week’s review will be too late.

Back-end metrics also need monitoring for red flags such as low repository disk capacity, climbs in replication lag, and immutable lock misconfigurations. API endpoints or webhooks, fed to SIEM, Prometheus, or similar, can help with vigilance, and any failures can be reported to teams with a one-line cURL script; for example, JSON payloads triggering auto-ticket creation. Restrict the triggers to actionable events (failed jobs, anomalies, capacity thresholds) to prevent alert fatigue and be sure to train on them.

By integrating anomaly-driven monitoring into daily ops, you turn your backups into a built-in early-warning radar, and given that 97 % of ransomware strains seek backup repositories first [4] you will be in a good position to catch attack vectors within minutes. That way, you can stop encryption in its tracks and isolate infected shares effectively, preventing downtime and business crises.

Modern Data Protection: a Continuous Process, Not a Product

A disciplined process is needed for file-server protection in the modern world. It starts with classifying shares to make sure resources flow efficiently, then granular immutable retention can be put in place, assisted by technology such as VSS or similar for snapshot automation. Rehearsing restores turns the process into muscle memory, as does making anomaly alerts an integral part of everyday operations, reducing the level of panic faced during a real crisis.

Though the steps alone are each modest, they work in conjunction to form a last-line defense that hardens backups sufficiently to survive ransomware, hardware fires, and accidental deletes. The results of following the outline shared are quantifiable, too. 21 days is considered an industry-average following a ransomware outage,[4] but this recovery window shrinks to merely hours under testing, with clean infrastructure housing immutable backups at the ready.

Deploy Your Backup Node Now

Get a dedicated server configured as a backup node within 2 hours. Immutable storage, high-bandwidth links, and 24/7 support.