Blog

Ensuring Low Latency with LA‐Based Dedicated Servers

Live experiences rely on more than bandwidth, and real‑time experiences rely on low latency. A couple of milliseconds can determine the difference between a smooth user session and an irritating one in competitive iGaming, live streaming, and time‑sensitive analytics. This article traces the network strategies that deliver ultra‑low latency with Los Angeles dedicated servers, explains why Los Angeles’s transpacific position matters, and shows how to evaluate providers that can turn geography into quantifiable performance for West Coast and Asia‑Pacific users.

Choose Melbicom— 50+ ready-to-go servers — Tier III-certified LA facility — 50+ PoP CDN across 6 continents |

|

What Can Latency (Not Bandwidth) Tell Us About User Experience?

Latency is the round‑trip time between user and server. In real‑time applications, greater delay worsens interactivity and performance (e.g., gameplay responsiveness, live video quality, voice clarity, and trade execution). Reduced latency enhances interaction; organizations that optimized routing policies recorded clear response‑time improvements. The implication for infrastructure leaders is that, to compete on experience, you must design for the fewest, fastest network paths.

What Makes Los Angeles a Prime Low‑Latency Hub?

Geography and build‑out converge in LA

Roughly one‑third of Internet traffic between the U.S. and Asia passes through Los Angeles, with One Wilshire as the hub. It is one of the most interconnected buildings on the West Coast, supporting 300+ networks and tens of thousands of cross‑connects, including nearly every major Asian carrier. Such a density condenses hop count and makes traffic local.

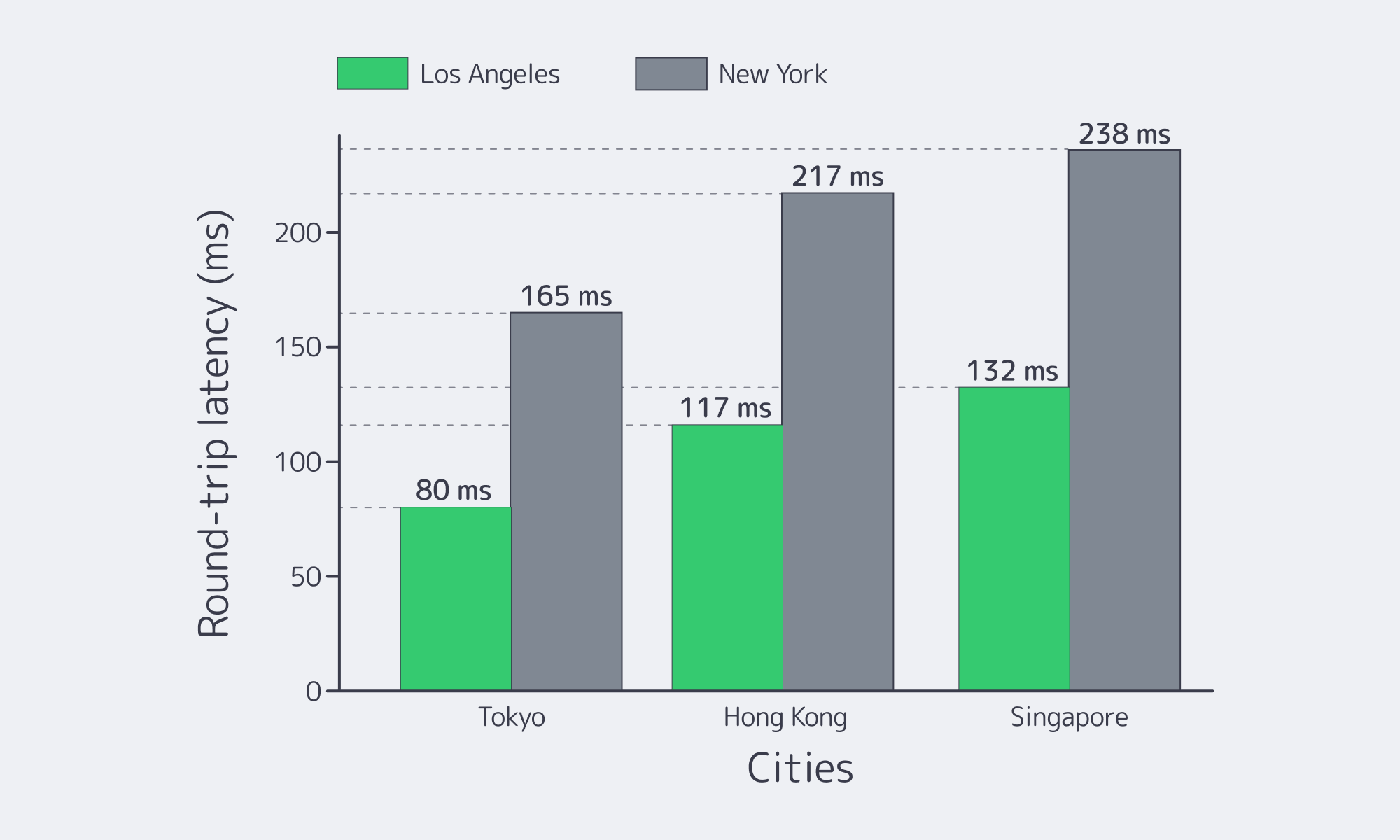

Distance still matters: adding 1,000 km of fiber distance increases round‑trip delay by roughly 5 ms. Shorter great‑circle routes from LA to APAC, enabled by direct subsea landings, visibly reduce ping. Benchmark Examples:

| City (Destination) | Latency from LA (ms)Approx. | Latency from NYC (ms)Approx. |

|---|---|---|

| Tokyo, Japan | 80 | 165 |

| Singapore | 132 | 238 |

| Hong Kong, China | 117 | 217 |

The chart below shows how consistently LA outperforms New York on APAC round trips.

Latency from Los Angeles versus New York to APAC hubs (smaller is better). The west coast position of LA provides significantly shorter transpacific routes.

The infrastructure story is also conclusive

Legacy and next‑gen cables land in or backhaul to LA—for example, the Unity Japan–LA 8‑fiber‑pair system terminates at One Wilshire. More modern constructions increase capacity and operation strength: JUNO connects Japan and California with up to 350 Tbps and latency‑minded engineering, and Asia Connect Cable‑1 will offer 256 Tbps over 16 pairs on a Singapore–LA route. Carrier hotels continue to upgrade (e.g., power to ~20 MVA and cooling enhancements) to maintain high‑density interconnection.

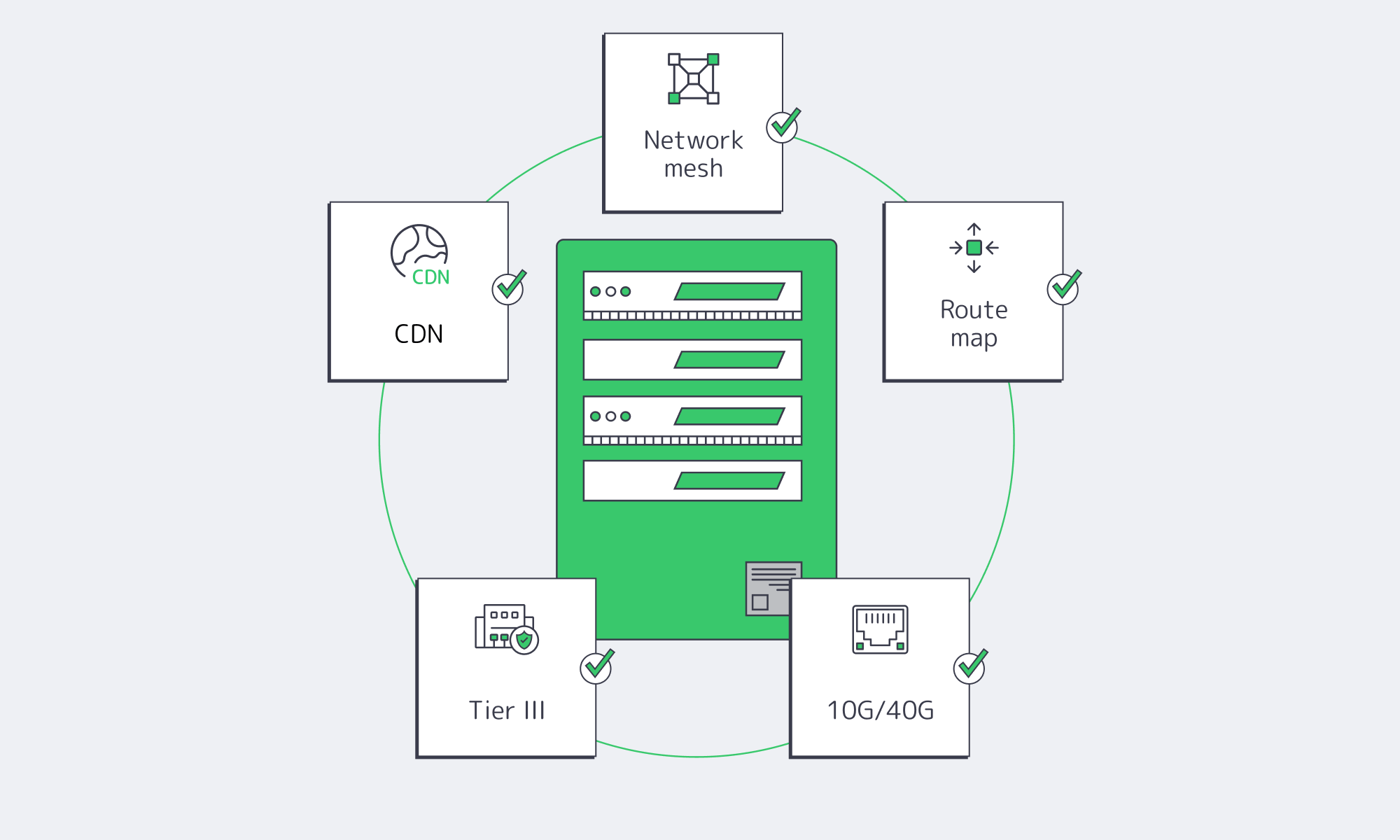

Melbicom’s presence in Los Angeles is in a Tier III‑certified site with substantial power and space, ideal for terminating high‑capacity links and hosting core routers. Practically, this means fast, direct handoffs to last‑mile ISPs and international carriers, reducing detours that introduce jitter.

Why Are LA Dedicated Servers Ideal for Achieving the Lowest Latency?

Direct peering and local interconnectivity

Each additional network jump is time consuming. Direct peering shortens the path by interconnecting with target ISPs/content networks within LA’s exchanges, which can enable one‑hop delivery to end users. This prevents long‑haul detours, congestion, and points of failure. Melbicom is a member of numerous Internet exchanges (out of 25+ in the world), and peers with access and content networks in Los Angeles. In the case of a Los Angeles dedicated server serving a cable‑broadband user, packets leave our edge and hand off to the user’s ISP within the metro.

Public IX peering is augmented by private interconnects (direct cross‑connects), providing deterministic microsecond‑level handoff on high‑volume routes. This approach is especially effective for dedicated servers in Los Angeles serving streaming applications and multiplayer lobbies, where the first hop sets the tone for the entire session.

Optimized BGP routing (intelligent path selection)

Default BGP prefers the fewest AS hops, not the lowest latency. To ensure that the BGP routing tables are optimized, the BGP route optimization platforms constantly probe multiple upstreams and direct the traffic to the minimal-delay and minimal-loss path. Traffic is redirected when a better path becomes available (or an old path fails). The network shifts to the new path within seconds. Tuning BGP policies has reduced latency for 65% of organizations.

Melbicom maintains multi‑homed BGP with numerous Tier‑1/Tier‑2 carriers and can announce customer prefixes and use communities to control traffic flow. In practice, when a Los Angeles dedicated server can egress via a San Jose route or a Seattle route to reach Tokyo, we at Melbicom prefer the better‑measured route—particularly during peak times—so players or viewers see steady pings rather than oscillations.

Low-contention high bandwidth connectivity

Bandwidth headroom prevents queues; bandwidth is not the same as latency. Link saturation creates queues and increases latency. The fix is large, dedicated ports that have a significant backbone capacity. Melbicom also provides 1 G–200 Gbps per server and supports a global network of more than 14 Tbps. Guaranteed bandwidth (no ratios) reduces contention as traffic rises. To the group that needs a 10G dedicated server in Los Angeles, the advantage of this in practice is that bursts do not cause bufferbloat; the 99th-percentile latency remains nearly the same as when they are not busy.

Modern data‑center fabrics (including cut‑through switching) and tuned TCP stacks further reduce per‑hop and per‑flow delay. And that is it; broad pipes and forceful discipline over the overuse of the broadband result in the steady small latency.

Edge caching as latency complement and CDN

A CDN will not replace your origin, but it shortens the distance to users for cacheable content. With 50+ locations through our CDN, Melbicom has deployed the assets to the users leaving the bulk of the work to the dynamic call on your LA origin on a quick local path. In Los Angeles deployments of dedicated servers, such as video streaming platforms or iGaming apps with frequent patch downloads, caching heavy objects at the edge while keeping stateful gameplay or session control in Los Angeles is the best of both worlds.

How to Choose a Low Latency Dedicated Server LA Provider

Reduce checklist to that which makes the latency needle move:

- Leverage LA’s peering ecosystem. Prefer providers with carrier‑dense coverage (One Wilshire adjacency, strong presence at Any2/Equinix IX). Melbicom peers extensively in Los Angeles to keep routes short.

- Demand route intelligence. Enquire about multi‑homed BGP and on‑net performance routing; route control is associated with reduced real‑world pings. Melbicom provides communities/BYOIP with the ability to tune flows.

- Purchase bandwidth in the form of latency insurance. Even better, use 10G+ ports even when you are unsure; the capacity margin prevents queues. With Melbicom, this sits on a guaranteed‑throughput network engineered for up to 200 Gbps per‑server bandwidth.

- Confirm facility caliber. Power/cooling capacity and Tier III design minimize risk. The LA facility at Melbicom qualifies that bar and is in favor of high density interconnects.

- Localize heavy objects with the help of CDN. Offload content to 50+ PoPs, leaving stateful traffic at your LA origin.

LA Low-Latency Networking, What Next?

Transpacific fabrics are becoming thinner and quicker. JUNO launches 350 Tbps Japan-California capacity w/ latency-sharing architecture; Asia Connect Cable‑1 launches 256 Tbps on a Singapore–LA arc. Investment in subsea systems is also rapid across the industry, $13billion in 2025-2027, and the global bandwidth has tripled since 2020, due to cloud and AI. At the routing layer, SDN and segment‑routing technologies are coming of age, enabling latency‑aware path control at large scale. Within the data center, quicker NICs and kernel‑bypass stacks shave microseconds that add up across millions of packets within the networking stack.

The practical note: the solution to APAC -facing workloads and West Coast user bases will continue to be a first-choice hub in LA, with increased direct routes, improved failover, and reduced jitter, each year.

Where LA Servers Make Latency a Competitive Advantage

Assuming that milliseconds determine your success, Los Angeles makes geography an SLA-quality asset – without route deviations. The playbook is settled: co‑locate near Pacific cables, peer aggressively within the metro, deploy smart BGP to keep paths optimal, and provision ports and backbone capacity to avoid queues. Add CDN to localize heavy objects, and you can provide real-time experience that is localized to the West Coast and acceptable to East Asia. In the case of teams intending to acquire a dedicated server in Los Angeles (or increase an existing West Coast presence), the low-latency benefit is calculable in terms of engagement, retention, and revenue.

That is the result we at Melbicom are designing for: the physical benefits of LA plus network engineering that squeeze out wasted milliseconds. Hosting a dedicated server in Los Angeles—well‑peered and high‑bandwidth—remains among the most defensible infrastructure moves you can make.

Deploy in Los Angeles today

Launch low‑latency dedicated servers in our LA facility with multi‑homed BGP, rich peering, 50+ CDN PoPs, and up to 200 Gbps per server.