Blog

Blueprint For High-Performance Backup Servers

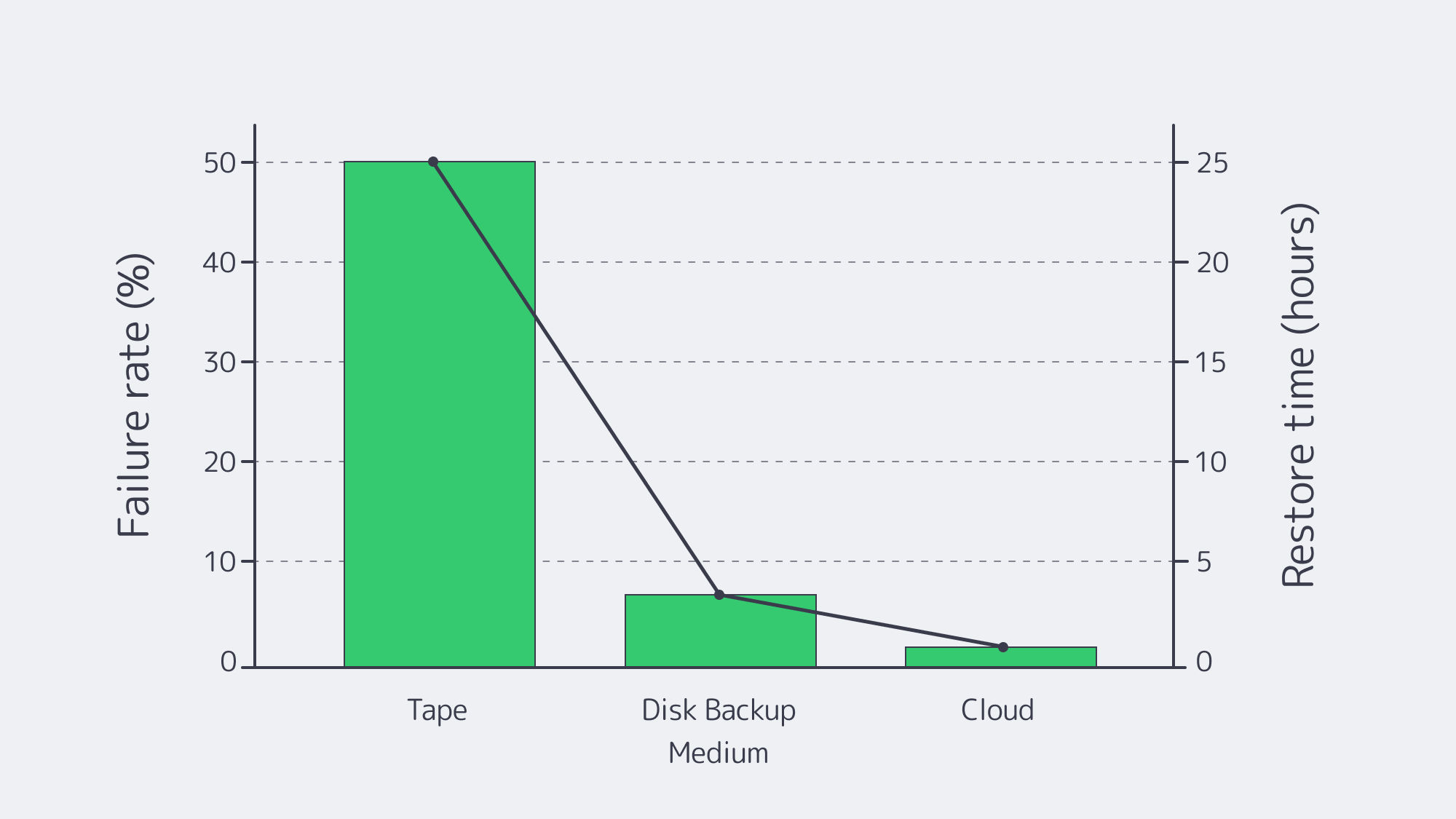

Exploding data volumes—industry trackers expect global information to crack 175 zettabytes (Forbes) within a few short cycles—are colliding with relentless uptime targets. Yet far too many teams still lean on tape libraries whose restore failure rates exceed 50 percent. To meet real-world recovery windows, enterprises are pivoting to purpose-built backup server solutions that blend high-core CPUs, massive RAM caches, 25–40 GbE pipes, resilient RAID-Z or erasure-coded pools, and cloud object storage that stays immutable for years. The sections below map the essential design decisions.

Right-Sizing the Compute Engine

High-Core CPUs for Parallel Compression

Modern backups stream dozens of concurrent jobs, each compressed, encrypted, or deduplicated in flight. Tests show Zstandard (Zstd) compression running across eight cores can outperform uncompressed throughput by 40 percent. That scale continues almost linearly to 32–64 cores. For a backup dedicated server, aim for 40–60 logical cores—dual-socket x86 or high-core ARM works—to keep CPU from bottlenecking nightly deltas or multi-terabyte restores.

RAM as the Deduplication Fuel

Catalogs, block hash tables, and disk caches all live in memory. A practical rule: 1 GB of ECC RAM per terabyte of protected data under heavy deduplication, or roughly 4 GB per CPU core in compression-heavy environments. In practice, 128 GB is a baseline; petabyte-class repositories often scale to 512 GB–1 TB.

25–40 GbE Networking: The Non-Negotiable Modern Backbone

Why Gigabit No Longer Cuts It

At 1 Gbps, transferring a 10 TB restore takes almost a day. A properly bonded 40 GbE link slashes that to well under an hour. Even 25 GbE routinely sustains 3 GB/sec, enough to stream multiple VM restores while performing new backups.

| Network Throughput | Restore 10 TB |

|---|---|

| 1 Gbps | 22 h 45 m |

| 10 Gbps | 2 h 15 m |

| 25 Gbps (est.) | 54 m |

| 40 Gbps | 34 m |

| 100 Gbps | 14 m |

Dual uplinks—or a single 100 GbE port if budgets allow—ensure that performance never hinges on a cable, switch, or NIC firmware quirk. Melbicom provisions up to 200 Gbps per server, letting architects burst for a first full backup or a massive restore, then dial back to the steady-state commit.

Disk Pools Built to Survive

RAID-Z: Modern Parity Without the Drawbacks

Disk rebuild times balloon as drive sizes hit 18 TB+. RAID-Z2 or Z3 (double or triple parity under OpenZFS) tolerates two or three simultaneous failures and adds block-level checksums to scrub silent corruption. Typical layouts use 8+2 or 8+3 vdevs; parity overhead lands near 20–30%, a small premium for long-haul durability.

Note: RAID-Z on dedicated servers requires direct disk access (HBA/JBOD or a controller in IT/pass-through mode) and is not supported behind a hardware RAID virtual drive. Expect to provision it via the server’s KVM/IPMI console; it’s best suited for administrators already comfortable with ZFS.

Erasure Coding for Dense, Distributed Repositories

Where hundreds of drives or multiple chassis are in play, 10+6 erasure codes can survive up to six disk losses with roughly 1.6× storage overhead—less wasteful than mirroring, far safer than RAID6. The CPU cost is real, but with 40-plus cores already in the design, parity math rarely throttles throughput.

Tiered Pools for Hot, Warm, and Cold Data

Fast NVMe mirrors or RAID 10 capture the most recent snapshots, then policy engines migrate blocks to large HDD RAID-Z sets for 30- to 60-day retention. Older increments age into the cloud. The result: restores of last night’s data scream, while decade-old compliance archives consume pennies per terabyte per month.

Cloud Object Storage: The New Off-Site Tape

Immutability by Design

Object storage buckets support WORM locks: once written, even an administrator cannot alter or delete a backup for the lock period. That single feature has displaced vast tape vaults and courier schedules. In current surveys, 60 percent of enterprises now pipe backup copy jobs to S3-class endpoints.

Bandwidth & Budget Considerations

Seeding multi-terabyte histories over WAN can be painful; after the first full, incremental forever plus deduplicated synthetic backups shrink daily pushes by 90 percent or more. Data pulled back from Melbicom’s S3 remains within the provider’s network edge, avoiding the hefty egress fees typical of hyperscalers.

End-to-End Architecture Checklist

- Compute: 40–60 cores, 128 GB+ ECC RAM.

- Network: Two 25/40 GbE ports or one 100 GbE; redundant switches.

- Disk Landing Tier: NVMe RAID 10 or RAID-Z2, sized for 7–30 days of hot backups.

- Capacity Tier: HDD RAID-Z3 or erasure-coded pool sized for 6–12 months.

- Cloud Tier: Immutable S3 bucket for long-term, off-site retention.

- Automation: Policy-based aging, checksum scrubbing, quarterly restore tests.

With that foundation, server data backup solutions can meet aggressive recovery time objectives without the lottery odds of legacy tape.

Why Dedicated Hardware Still Matters

General-purpose hyperconverged rigs juggle virtualization, analytics, and backup—but inevitably compromise one workload for another. Purpose-built server backup solutions hardware locks in known-good firmware revisions, isolates air-gapped management networks, and lets architects optimize BIOS and OS tunables strictly for streaming I/O.

Melbicom maintains 1,000+ preconfigured servers across 20 Tier III and Tier IV facilities, each cabled to high-capacity spines and world-wide CDN pops. We spin up storage-dense nodes—12, 24, or 36 drive bays—inside two hours and back them with around-the-clock support. That combination of hardware agility and location diversity lets enterprises drop a backup node as close as 2 ms away from production.

Choosing Windows, Linux, or Both

- Linux (ZFS, Btrfs, Ceph): Favored for open-source tooling and native RAID-Z. Kernel changes in recent releases push per-core I/O to 4 GB/sec, perfect for 25 GbE.

- Windows Server (ReFS + Storage Spaces): Provides block-clone fast-cloning and built-in deduplication; best won when deep Active Directory trumps everything else.

- Mixed estates often deploy dual backup proxies: Linux for raw throughput, Windows for application-consistent snapshots of SQL, Exchange, and VSS-aware workloads. Networking, storage, and cloud tiers stay identical; only the proxy role changes.

Modernizing from Tape: A Brief Reality Check

Tape once ruled because spinning disk was expensive. Yet LTO-8 media rarely writes at its promised 360 MB/sec in the real world, and restore verification uncovers 77 % failure rates in some audits (Unitrends). Transport delays, stuck capstan motors, and degraded oxide layers compound risk. By contrast, a RAID-Z3 pool can lose three disks during rebuild and still read data, while a cloud object store replicates fragments across metro or continental regions. Cost per terabyte remains competitive with tape libraries once you factor robotic arms, vault fees, and logistics.

Putting It All Together

Purpose-built server backup solutions now start with raw network speed, pile on compute for compression, fortify storage with modern parity schemes, and finish with immutable cloud tiers. Adopt those pillars and the nightly backup window shrinks, full-site recovery becomes hours not days, and compliance auditors stop reaching for red pens.

Conclusion: Blueprint for Next-Gen Backup

High-core processors, capacious RAM, 25–40 GbE lanes, RAID-Z or erasure-coded pools, and an immutable cloud tier—this blueprint elevates backup from an insurance policy to a competitive advantage. Architects who embed these elements achieve predictable backup windows, verifiable restores, and long-term retention without the fragility of tape.

Order Your Backup Server Now

Get storage-rich dedicated servers with up to 200 Gbps connectivity, pre-configured and ready to deploy within hours.