Blog

Dedicated Servers for Buffer-Free Streaming at Scale

Video now dominates the internet—industry estimates put it at more than 80% of all traffic. Audiences expect instant starts, crisp 4K playback, and zero buffering.

That expectation collides with physics and infrastructure: a single marquee live event has already reached 65 million concurrent streams; a mere three seconds of buffering can drive a majority of viewers to abandon a session. Meanwhile, a 4K stream typically requires ~25 Mbps and consumes 7–10 GB per hour. Multiply by thousands—or millions—of viewers and the arithmetic becomes unforgiving.

Choose Melbicom— 1,300+ ready-to-go servers — 21 global Tier IV & III data centers — 55+ PoP CDN across 6 continents |

|

Choosing the right dedicated server is no longer about generic CPU and a 1 Gbps port. It’s about massive bandwidth headroom, predictable performance under concurrency, CPU/GPU horsepower for real-time encoding, and NVMe storage that feeds the network at line rate. This piece lays out the modern requirements for video streaming hosting, trims legacy considerations to context only, and shows where dedicated infrastructure—paired with a global CDN—gives you both smooth playback and room to scale.

What “Modern” Means for Video Streaming Server Hosting

Four pillars define streaming-ready servers today:

- Network throughput and quality. Think 10/40/100+ Gbps per server. Low-latency routing, strong peering, and non-oversubscribed ports matter as much as raw speed. We at Melbicom operate a global backbone measured in the double-digit terabits and can provision up to 200 Gbps per server where required.

- Compute for encoding/transcoding. HD and especially 4K/AV1 workloads punish CPUs. You’ll want high-core, current-generation processors, plus GPU offload (NVENC/AMF/Quick Sync) when density or latency demands it.

- Fast storage and I/O. NVMe SSDs eliminate seek bottlenecks and sustain multi-gigabyte-per-second reads. Hybrid tiers (NVMe + HDD) can work for large libraries, but hot content belongs on flash.

- Distributed reach via CDN. Even perfect origin servers won’t save a stream if the path to users is long. A CDN with dozens of global POPs puts segments close to the last mile and offloads origin bandwidth. Melbicom’s CDN spans 55+ locations across 36 countries; origins plug in with standard headers, origin-shielding, and tokenization.

Everything else—kernel tuning, congestion control variants, packaging tweaks—adds incremental value only if these pillars are sized correctly.

Dedicated Servers vs. Cloud

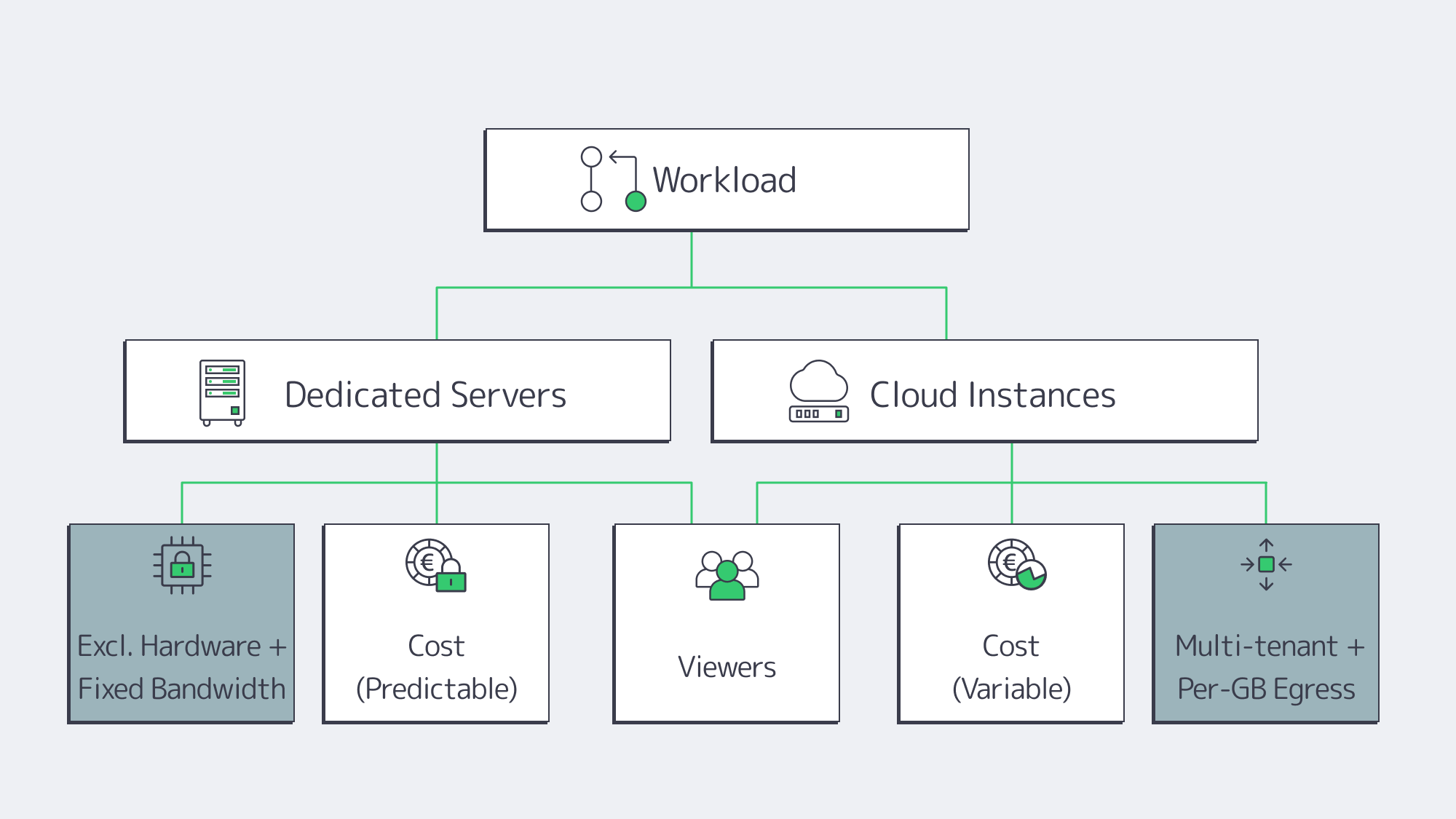

Cloud advantages. Fast time-to-first-instance and elasticity are real benefits. If you’re validating a format or riding unpredictable bursts, cloud can bridge the gap.

Cloud trade-offs for streaming. Video is egress-heavy. At $0.05–$0.09/GB in typical cloud egress, 1 PB/month can add $50k–$90k—for bandwidth alone. Multi-tenant “noisy neighbor” effects and hypervisor overhead also introduce variability exactly when you least want it: during peak concurrency.

Dedicated advantages. You get exclusive hardware: full CPU cores, RAM, NICs—and fixed, often unmetered bandwidth. That predictability keeps latency steady as concurrency climbs, and the cost per delivered gigabyte drops as your audience grows. Dedicated servers also give you full-stack control: CPU model, GPU option, NICs, NVMe layout, kernel version, and IO schedulers—all tunable for media workloads.

A pragmatic strategy. Many teams run steady state on dedicated (for performance and cost efficiency) and keep the cloud for edge cases. Melbicom supports this path with 1,300+ ready-to-go configurations across 21 Tier IV & III data centers for rapid provisioning of the right shape at the right place.

Bandwidth First: the Constraint that Makes or Breaks Smooth Playback

Bandwidth is the streaming bottleneck most likely to surface first—and the one that is hardest to paper over with “optimization.” The math is stark:

- 10,000 × 1080p at ~5 Mbps ≈ 50 Gbps sustained.

- 10,000 × 4K at ~20 Mbps ≈ 200 Gbps sustained.

A once-standard 1 Gbps port saturates quickly; it also caps monthly transfer at roughly 324 TB if run flat-out. For modern services, 10 Gbps should be the minimum, with 40/100/200 Gbps used where concurrency or bitrate warrants. The interface is only part of the story; you also need:

- Non-oversubscribed ports. If a “10 Gbps” port is shared, you don’t really have 10 Gbps.

- Good routes and peering. Closer, cleaner paths reduce retransmits and startup delay.

- Redundancy. Dual uplinks and diverse routing keep live streams alive during incidents.

Melbicom’s network pairs high-capacity ports with a multi-Tbps backbone and wide peering to keep loss and jitter low. The result is practical: a streaming origin that can actually push the advertised line-rate—hour after hour.

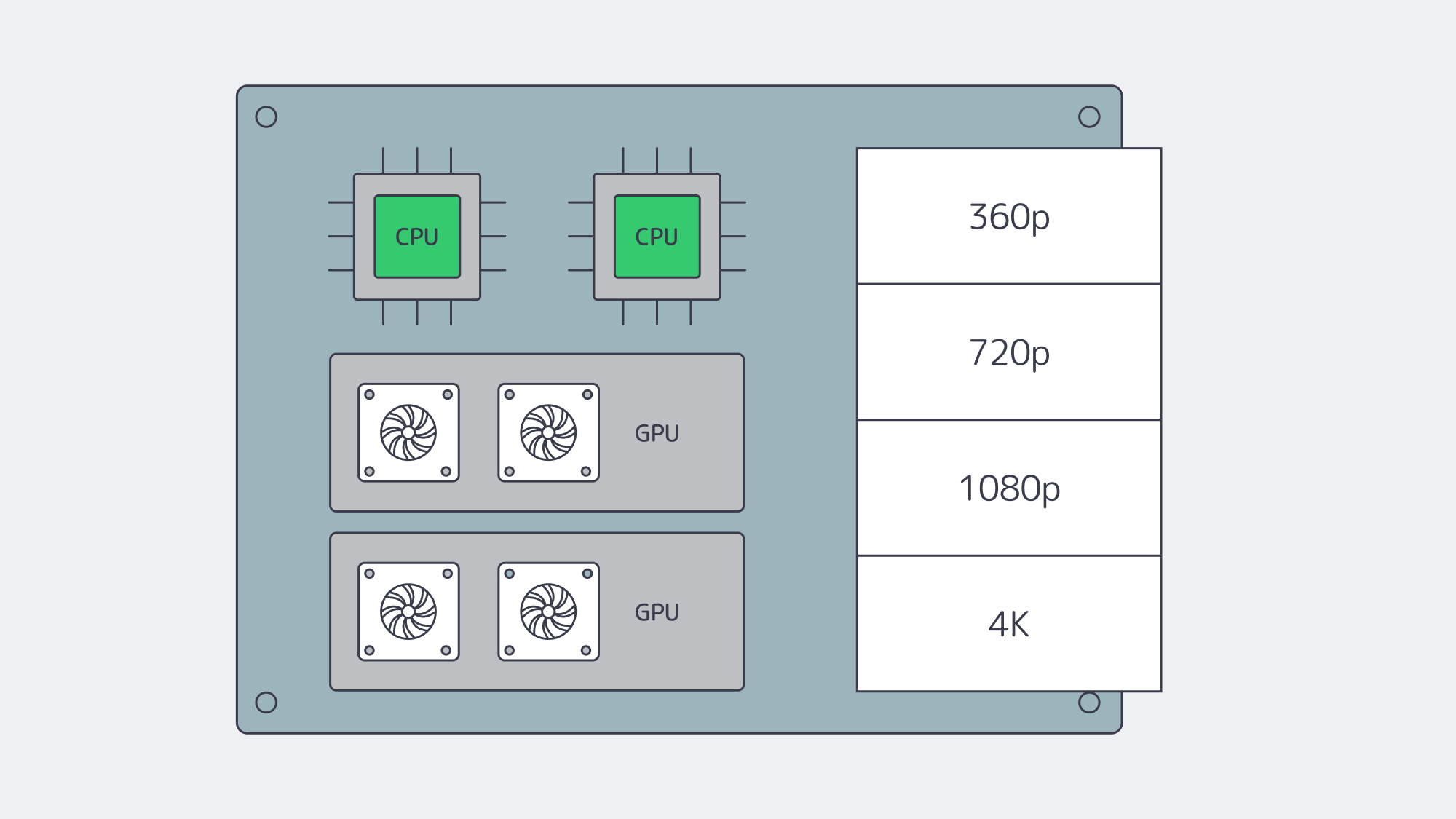

Compute: CPU and GPU for Real-Time Encoding and Packaging

Software encoders for H.264/H.265 chew through cores; AV1 is even more demanding. Plan for high-core, current-gen CPUs—32, 64, or more physical cores per server for dense live transcode farms—and keep clock speed in mind when single-stream latency matters. As workflows scale, GPU acceleration becomes the difference between “possible” and “operationally efficient.” A single modern NVIDIA card can handle dozens of 1080p or multiple 4K encodes in real time, freeing CPUs for packaging, DRM, timers, and I/O.

A few practical notes when sizing:

- Match encoders to business logic. If you need multiple ladders (360p→4K) per live channel, compute grows linearly. Hardware encoders compress that curve.

- Use the right instruction set. AVX2/AVX-512 helps software encoders; stay on current CPU generations.

- Right-size RAM. 32–64 GB is a baseline for origins and packagers; live transcoders often need more for buffers and queues.

Melbicom offers dedicated systems with CPU-dense footprints and GPU options ready for media workloads. We’ll help you align codec plans, density targets, and cost per channel.

Storage and I/O: NVMe as the Default, Hybrid for Scale

Legacy wisdom held that “video is sequential, HDDs are fine.” That’s only true in the lab. In production, hundreds or thousands of viewers request different segments, skip around, and collide at popular timestamps—turning your disk profile into a random I/O problem that spinning disks handle poorly. Modern origins should treat NVMe SSDs as the default for hot content:

- Throughput. Single NVMe drives sustain 3–7 GB/s reads; arrays scale linearly.

- Latency. Sub-millisecond access dramatically improves time-to-first-byte and reduces startup delay.

- Concurrency. High IOPS keeps per-stream fetches snappy even under heavy fan-out.

For large libraries, pair NVMe (hot tier) with HDD capacity (cold tier). Software caching (filesystem or application-level) keeps the working set on flash, while HDD arrays hold the long tail. A critical sanity check: ensure storage throughput ≥ NIC throughput. A 40 Gbps port fed by a single HDD will never reach line rate; a few NVMes or a wide HDD RAID can. Melbicom offers dedicated servers with NVMe-first storage so your disks never become the limiter for an otherwise capable 10/40/100/200 Gbps network path.

Global Reach, Low Latency: Origins in the Right Places, CDN at the Edge

You cannot fight the speed of light. The farther a user is from your origin, the higher the startup delay and the larger the buffer required to hide jitter. Best practice is twofold:

- Multi-region origins. Place dedicated servers near major exchanges in your key audience regions—e.g., Amsterdam/Frankfurt, Ashburn/New York, Singapore/Tokyo—so CDN origin fetches are short and reliable. Melbicom operates 21 locations worldwide.

- CDN everywhere your users are. A 55+ POP footprint (like Melbicom’s CDN) minimizes last-mile latency. It also shifts the load: the CDN serves most viewer requests, while your origins handle cache fill, authorization, and long tail content.

Routing features—peering with local ISPs, tuned congestion control, and judicious use of anycast or GeoDNS—trim further milliseconds. For live formats (LL-HLS/DASH, WebRTC), these shave enough delay to keep “glass-to-glass” comfortable and consistent.

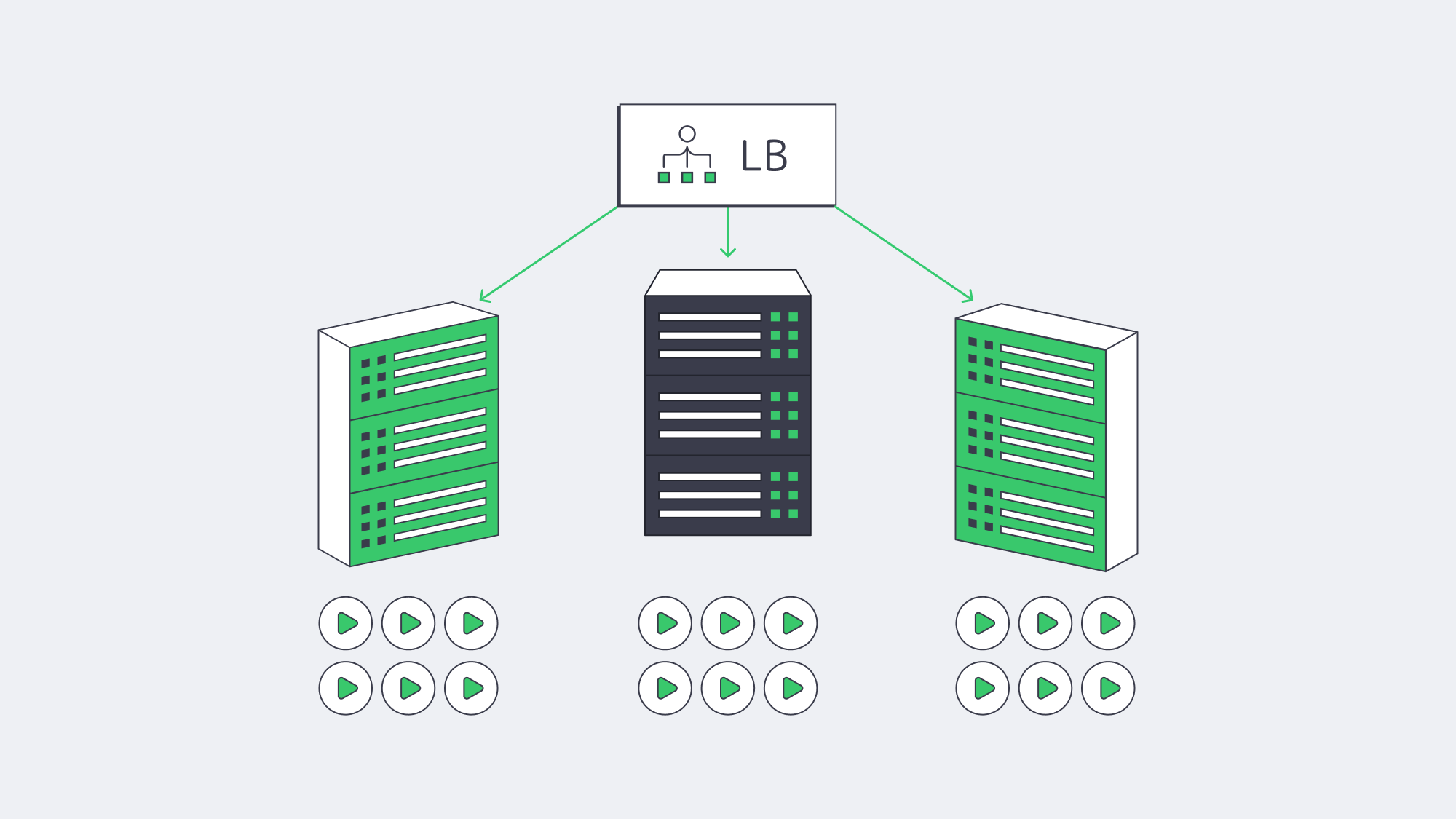

Concurrency at Scale: Predictable Performance when it Counts

Smooth playback under high concurrency is where dedicated servers repay the decision. Without hypervisor overhead or multi-tenant contention, you get stable CPU budgets per stream, deterministic packet pacing, and no surprise throttles on ports. That stability is the difference between riding out a surge and watching buffer wheels spin. It also simplifies capacity planning:

- Estimate your peak simultaneous viewers and average ladder bitrate.

- Convert to Gbps/headroom targets per origin.

- Add elasticity with either more origins (horizontal scale) or fatter ports (vertical scale).

Because costs are fixed, you can afford to over-provision a bit—buying insurance against viral spikes without incurring runaway per-GB fees.

Future-Proofing: Codecs, Bitrates, Growth

Codec transitions (AV1 today, more to come) and richer formats (HDR, high-FPS, eventually 8K for select use cases) all push bitrate and compute up. Build with headroom:

- Network. Start at 10 Gbps, plan for 40/100/200 Gbps upgrades.

- Compute. Favor CPU families with strong SIMD, and keep a path to GPU-accelerated encoding.

- Storage. Assume NVMe for hot sets; scale horizontally with sharding or caching as libraries grow.

- Placement. Add regions as your audience map changes; test performance regularly.

Melbicom’s model—configurable dedicated servers in 21 locations, an integrated 55+ POP CDN, and high-bandwidth per-server options—lets you extend capacity in step with viewership without re-architecting from scratch.

Practical Checklist for Video Streaming Server Hosting

- Bandwidth & routing. 10 Gbps minimum; verify non-oversubscription and peering quality. Keep an upgrade path to 40/100/200 Gbps.

- CPU/GPU. High-core CPUs for software encodes; GPUs to multiply channels per node and lower per-stream latency.

- Storage. NVMe for hot read paths; hybrid tiers for cost control. Ensure disk can saturate NICs under concurrency.

- CDN. Use it. Pick a footprint that maps to your audience; configure origin shield and sane cache keys for segments.

- Regions. Place origins near user clusters and IXPs; plan failover across regions.

- Costs. Model egress carefully. Dedicated servers with fixed or unmetered bandwidth usually beat per-GB cloud billing at scale.

- Operations. Monitor CPU, NIC, and disk saturation; tune kernel and socket buffers; automate capacity adds before events.

Build for Smooth Playback, Scale for Spikes

The core job of a streaming platform is simple to say and hard to do: start instantly and never stutter. That demands big, clean network pipes, compute engineered for codecs, and NVMe-class I/O—backed by a CDN that puts content within a short RTT of every viewer. Dedicated servers excel here because they deliver predictable performance under concurrency and cost discipline at high egress. When you can guarantee 10/40/100/200 Gbps per origin, encode in real time without jitter, and serve hot segments from flash, viewers feel the difference—especially during peak moments.

If you’re mapping next steps—migrating off the cloud for economics, preparing for a rights-holder’s audience surge, or adopting AV1 at scale—the blueprint is consistent: place origins where they make sense, pair them with a broad CDN, and size network/compute/storage for tomorrow’s ladders, not yesterday’s.

Stream-ready dedicated servers

Deploy high-bandwidth servers in 21 global data centers and start streaming without limits.